About B-splines. Twenty answers to one question: What is the cubic B-spline for the knots -2,-1,0,1,2?

February 9, 2016.

\(^\ast \)October 22, 2004. SINTEF Applied Mathematics, Oslo, Norway.

This article by the late Ewald Quak is published with the consent of his wife Riina and upon the initiative of a member of the editorial board of this journal. It is followed by some comments of Carl de Boor on early Bulgarian and Romanian contributions to B-splines.

In this composition an attempt is made to answer one simple question only: What is the cubic B-spline for the knots -2,-1,0,1,2? The note will take you on a most interesting trip through various fields of Mathematics and finally convince you on how little we know.

MSC. 41-XX, 65-XX, 60-XX

Keywords. cubic B-spline, curves and surfaces, CAD-CAM, Approximation Theory, Numerical Analysis, Probability.

1 Introduction

Polynomial splines are real-valued functions which consist of polynomial pieces that are glued together at given breakpoints with prescribed smoothness. To name just a few applications of these powerful tools within applied and computational mathematics: splines are a de-facto standard in the mathematical description of curves and surfaces for industrial computer-aided design and manufacture (CAD-CAM). Spline surfaces and their offspring subdivision surfaces are at the heart of professional animation systems for the production of movies like Toy Story or Monsters, Inc. Splines are also used to solve ordinary differential equations and in the multivariate form of finite elements they form the cornerstone for the numerical treatment of partial differential equations. The approximation of scattered data, be it in geometric modelling or statistics, can be carried out with splines. Wavelet theory and multiresolution analysis owe a lot to spline functions as well.

![\includegraphics[scale=0.5]{bspline.png}](https://ictp.acad.ro/jnaat/journal/article/download/1099/version/1021/1378/3078/img-0001.png)

So both faculty and students are bound to encounter splines in very different settings and basic spline material is to some extent incorporated in undergraduate numerical analysis text books. In this paper I would like to give some examples how a certain B-spline basis function can be characterized. Some of these examples are well-known and at the heart of important theory or applications, other disguises are rather far-fetched, and could be considered as dragged from mathematical oblivion. I do not claim that this list is in any way complete, and I invite all readers to supply me with other approaches they have encountered.

In this text, I have deliberately chosen to focus on cubic splines and consequently not formulated any statements in the highest possible generality. In this way I hope to generate more questions rather than give complete answers, stimulating the reader’s interest to take a closer look –for example at other spline degrees or different choices of breakpoints. Some remarks in this direction are given in the final section of the paper. I tried to hold the proofs as elementary as possible, but still giving a flavor of what is happening in more general situations, avoiding - if possible - straightforward calculations just valid for the cubic case. As for the sources that inspired my twenty answers, I tried to quote early original papers, but are not in a position to claim absolute historical accuracy concerning who did what first. Instead the reader is invited to consult on his or her own more detailed spline monographs, or study books dealing with a specific application area using splines. Be aware, however, that it was of course necessary to change original notations and also proofs to generate a consistent presentation.

The twenty choices are listed in Section 2, including the references that inspired them. The first answer is discussed in Section 3 and allows to say a bit where the name spline originated. Using yet another definition we are able to address in Section 4 some basic properties in items 2, 3 and 4, including the basis property that motivated the term B-spline as in basis spline. In the subsequent Section 5, divided differences of truncated power functions allow us to come up with a closed formula expression, point out connections to Green’s functions and Peano kernels, and give a geometric interpretation in items 5-8. Fourier transforms are employed in Section 6 for items 9-11. Subdivision concepts, important in wavelet theory and geometric modeling, are then showcased in Section 7 for items 12 and 13. Section 8 is devoted to probability interpretations in items 14-17, including the historic reference from 1904, where the graph of a cubic B-spline (probably) appears for the first time, years before the term B-spline was coined. Also the last items 18-20 covered in Section 9 stem from roots in probability, but are singled out because they originated in the author’s country of residence. The final Section 10 tries to give some information how the general spline theory can be developed from the cubic approach in this elementary paper and where to find the relevant information. Enjoy!

2 Twenty choices

[ 44 , 27 ] The function \(f\) which minimizes the integral

\[ \int _{-2}^{2}\left( g^{\prime \prime }\left( x\right) \right) ^{2}dx, \]when considering all functions \(g\), whose second derivatives are square integrable over the interval \([-2;2]\) and which interpolate the following data:

\[ g\left( -2\right) =g^{\prime }\left( -2\right) =g\left( 2\right) =g^{\prime }\left( 2\right) =0,\ g\left( -1\right) =g\left( 1\right) =\tfrac {1}{6},\ g\left( 0\right) =\tfrac {2}{3}? \][ 38 ] The function \(f\) defined by the pieces

\[ f\left( x\right)= \begin{cases} \tfrac {1}{6}\left( x+2\right) ^{3},& \text{if }x\in \left[ -2,-1\right] ,\\ \tfrac {1}{6}\left( \left( x+2\right) ^{3}-4\left( x+1\right) ^{3}\right),& \text{if }x\in \left[ -1,0\right] ,\\ \tfrac {1}{6}\left( \left( 2-x\right) ^{3}-4\left( 1-x\right) ^{3}\right),& \text{if }x\in \left[ 0,1\right] ,\\ \tfrac {1}{6}\left( 2-x\right) ^{3},& \text{if }x\in \left[ 1,2\right] ,\\ 0,& \text{otherwise.}\end{cases} ? \][ 13 ] The function\(f\) of smallest support such that \(f\) is a cubic polynomial on each interval \([k;k+1]\) for each \(k\in \mathbb {Z}\), twice continuously differentiable everywhere, even and for which \(f(0)=\frac{2}{3}?\)

[ 2 , 12 ] The function \(f\) built from characteristic functions as

\begin{align*} f\left( x\right) & =\tfrac {x+2}{3}\left( \tfrac {x+2}{2}\left( \left( x+2\right) \mathcal{X}_{|[-2,-1)}\left( x\right) +\left( -x\right) \mathcal{X}_{|[-1,0)}\left( x\right) \right) \right. \\ & \quad \left. +\tfrac {1-x}{2}\left( \left( x+1\right) \mathcal{X}_{|[-1,0)}\left( x\right) +\left( 1-x\right) \mathcal{X}_{|[0,1)}\left( x\right) \right) \right) \\ & \quad +\tfrac {2-x}{2}\left( \tfrac {x+1}{2}\left( \left( x+1\right) \mathcal{X}_{|[0,1)}\left( x\right) +\left( 1-x\right) \mathcal{X}_{|[0,1)}(x)\right) \right. \\ & \quad \left. +\tfrac {2-x}{2}\left( \left( x\right) \mathcal{X}_{|[0,1)}\left( x\right) +\left( 2-x\right) \mathcal{X}_{|[1,2)}\left( x\right) \right) \right) . \end{align*}where for \(k=-2,-1,0,1\)

\[ \mathcal{X}_{|[k,k+1)}= \begin{cases} 1& \ \text{if\ }x\in \lbrack k,k+1),\\ 0& \ \text{if\ }x\notin \lbrack k,k+1) \end{cases} ? \][ 38 ] The function \(f\) given by

\[ f\left( x\right) =\tfrac {1}{6}\left( \left( -2-x\right) _{+}^{3}-4\left( -1-x\right) _{+}^{3}+6\left( -x\right) _{+}^{3}-4\left( 1-x\right) _{+}^{3}+\left( 2-x\right) _{+}^{3}\right) , \]where the truncated power functions are defined as \(x_{+}=\max \left( x,0\right) \) and \(x_{+}^{3}=\left( x_{+}\right) ^{3}?\)

[ 5 ] The function \(f\) obtained by taking the divided difference with respect to t in the points \(-2,\ -1,0,1,2\) of Green’s function associated with the differential operator\(\ D^{4}\) and multiplying the result by 24? This means

\[ f\left( x\right) =24\left[ -2,-1,0,1,2\right] _{t}G_{4}\left( t,x\right) , \]where for any given function \(h\) which is integrable on \(\left[ -2,2\right] \) and any real numbers \(g_{0},\ g_{1},\ g_{2},\) \(g_{3}\) the function

\[ g\left( t\right) =\sum _{j=0}^{3}g_{j}\tfrac {\left( t+2\right) ^{j}}{j!}+\int _{-2}^{2}G_{4}\left( t,x\right) h\left( x\right) dx \]solves the initial value problem

\begin{align*} g^{\left( 4\right) }\left( t\right) & =h\left( t\right), \text{\ \ \ \ \ almost everywhere in }\left[ -2,2\right] ,\\ g^{\left( \ell \right) }\left( -2\right) & =g_{\ell },\ \ell =0,1,2,3. \end{align*}[ 13 ] The function \(f\) for which

\[ \int _{-\infty }^{+\infty }f\left( x\right) h^{\left( 4\right) }\left( x\right) dx=\bigtriangleup ^{4}h\left( 0\right) , \]for all functions \(h\), which are four times continuously differentiable on \(\left[ -2,2\right] \), and where \(\bigtriangleup ^{4}h\left( x\right) \) is the fourth order central difference defined as

\[ \bigtriangleup ^{4}h\left( x\right) =\bigtriangleup \left( \bigtriangleup \left( \bigtriangleup \left( \bigtriangleup h\left( x\right) \right) \right) \right) ,\quad \text{\ with }\bigtriangleup h\left( x\right) =h\left( x+\tfrac {1}{2}\right) -h\left( x-\tfrac {1}{2}\right) ? \][ 13 ] The function \(f\) so that \(f\ (x)\) represents the 3-dimensional volume of the intersection of a hyperplane in \(\mathbb {R}^{4}\) that is orthogonal to the \(x\)-axis and goes through the point \((x,0,0,0)\) with a 4-simplex of volume 1, which is placed in \(\mathbb {R}^{4}\), so that its five vertices project orthogonally onto the points on the \(x\)-axis \((-2,0,0,0),(-1,0,0,0),(0,0,0,0),(1,0,0,0),\) \((2,0,0,0)\)?

[ 38 ] The function \(f\) whose Fourier transform is the \(4th\) power of a dilated sinc function, i.e., using the inverse Fourier transform

\[ f\left( x\right) =\tfrac {1}{2\pi }\int _{-\infty }^{+\infty }\left( \tfrac {\sin \left( \frac{u}{2}\right) }{\frac{u}{2}}\right) ^{4}e^{ixu}du? \][ 38 ] The function \(f\) which results when taking the convolution of the hat function

\[ N\left( x\right) = \begin{cases} x+1,& \ \text{if\ }x\in \left[ -1,0\right], \\ 1-x,& \ \text{if\ }x\in \left[ 0,1\right], \\ 0,& \ \text{otherwise,}\end{cases} \]with itself, i.e.,

\[ f\left( x\right) =\left( N\ast N\right) \left( x\right) =\int _{-\infty }^{+\infty }N\left( x-y\right) N\left( y\right) dy,\quad x\in \mathbb {R}? \][ 36 , 28 ] The function \(f\) generated in the following way?

In \(\mathbb {R}^{2}\) a unit square is placed with its lower edge on the \(x\)-axis and then moved along this axis. Assuming the square’s center at time \(x\) to be the point \(\left( x,\frac{1}{2}\right) \), we obtain a function \(A\) such that \(A(x)\) is the area of the region cut out of the square by the graph of the hat function \(N(x)\) (see item 10) and the \(x\)-axis. Repeating this process one more time, we obtain \(f(x)\) as the area of the region cut out of the square by the graph of the function \(A(x)\) and the \(x\)-axis.

[ 8 ] The continuous function \(f\) which has the values \(f(0)=\frac{2}{3},\) \(f\left( 1\right) =f\left( -1\right) =\frac{1}{6},\) and \(0\) in all other integers and satisfies the functional equation

\[ \qquad f\left( x\right) \! =\! \tfrac {1}{8}\left( f\left( 2x\! +\! 2\right) \! +\! 4f\left( 2x\! +\! 1\right) \! +\! 6f\left( 2x\right) \! +\! 4f\left( 2x\! -\! 1\right) \! +\! f\left( 2x\! -\! 2\right) \right) ? \][ 34 , 9 ] The continuous function \(f\) which results from the following limit process?

We start with the polygon in \(\mathbb {R}^{2}\) that connects the initial points \(P_{k}^{0}=(k,\delta _{k,0})\). Then we generate new polygons successively by setting for \(r\in N\cup \{ 0\} \) and any \(k\in \mathbb {Z}\)

\begin{align*} P_{2k}^{r+1} & =\tfrac {1}{8}\left( P_{k-1}^{r}+6P_{k}^{r}+P_{k+1}^{r}\right) ,\\ P_{2k+1}^{r+1} & =\tfrac {1}{2}\left( P_{k}^{r}+P_{k+1}^{r}\right) . \end{align*}The resulting polygons converge to \(f\) in the sense that for \(r\in \mathbb {N}\cup \{ 0\} \) and any \(k\in \mathbb {Z}\)

\[ \left\Vert \left( \tfrac {k}{2^{r}},f\left( \tfrac {k}{2^{r}}\right) \right) -P_{k}^{r}\right\Vert _{\infty }\leq \tfrac {1}{3}\tfrac {1}{2^{r}}. \][ 25 ] The function \(f\) describing the discrete probability distribution for the following urn model?

Consider an urn initially containing w white balls and b black balls. One ball at a time is drawn at random from the urn and its color is inspected. Then it is returned to the urn and \(w+b\) balls of the opposite color are added to the urn. A total of three such draws are carried out. With \(t=w/(w+b)\) as the probability of selecting a white ball on the first trial, the probability of selecting exactly 3 white balls in the three trials is \(f\ (t-2)\), of selecting exactly 2 white balls in the three trials is \(f\) \((t-1)\), of selecting exactly 1 white ball in the three trials is \(f(t)\) and of selecting exactly \(0\) white balls in the three trials is \(f(t+1)\).

[ 38 , 43 ] The function \(f\) that is the density distribution of the error committed in the sum \(X_{1}+X_{2}+X_{3}+X_{4}\) of 4 independent real random variables \(X_{k}\), if each variable is replaced by its nearest integer value?

[ 43 ] The function \(f\), such that \(f(x)\) is the 3-dimensional volume of the section of the 4-dimensional unit cube \(\left[ -\frac{1}{2},\frac{1}{2}\right] ^{4}\) cut out by the hyperplane \(x_{1}+x_{2}+x_{3}+x_{4}=x\), which lies normal to the cube’s main diagonal (connecting \(\left( -\frac{1}{2},-\frac{1}{2},-\frac{1}{2},-\frac{1}{2}\right) \) to \(\left( \frac{1}{2},\frac{1}{2},\frac{1}{2},\frac{1}{2}\right) \))?

[ 43 ] The function \(f\) whose graph appears in the contribution of A. Sommerfeld to the Festschrift Ludwig Boltzmann gewidmet zum 60. Geburtstage, the monograph dedicated to the 60th birthday of Ludwig Boltzmann, February 20th 1904?

[ 6 ] The function \(f\) so that

\[ \qquad f\left( x\right) =\tfrac {1}{\pi }\int _{x-\frac{1}{2}}^{x+\frac{1}{2}}\int _{t_{3}-\frac{1}{2}}^{t_{3}+\frac{1}{2}}\int _{t_{2}-\tfrac {1}{2}}^{t_{2}+\frac{1}{2}}\left( Q\left( t_{1}+\tfrac {1}{2}\right) -Q\left( t_{1}-\tfrac {1}{2}\right) \right) dt_{1}dt_{2}dt_{3}, \]where

\[ Q\left( t\right) =\lim _{\lambda \rightarrow 0^{+}}\arctan \left( \tfrac {t}{\lambda }\right) ? \][ 23 ] The function \(f\) given by the real part of a complex integral in the following way:

\[ f\left( x\right) =\tfrac {8}{\pi }\operatorname {Re}\int _{0}^{+\infty }\tfrac {u^{3}}{\left( 4^{2}+\left( u-2ix\right) ^{2}\right) \left( 2^{2}+\left( u-2ix\right) ^{2}\right) \left( u-2ix\right) }du? \][ 23 ] The function \(f\) represented as

\[ f\left( x\right) =\tfrac {1}{12}\sum _{k=0}^{4}\left( -1\right) ^{k}\tbinom {4}{k}\left\vert x+2-k\right\vert ^{3}{\rm sign}\left( x+2-k\right) ^{4}? \]

3 Prelude: why is a spline called a spline?

One of the most important and straightforward things one wishes to do in numerical analysis is to interpolate given values using some simple basic functions, for example algebraic or trigonometric polynomials. Concerning interpolation by algebraic polynomials, however, it has been known for over a hundred years [ 35 ] that an interpolant can display enormous oscillations, even if the data is taken from a smooth well-behaved function. One of the ideas used to counter this effect is to consider functions that consist of various simple pieces which are glued together with a given smoothness.

3.1 The complete cubic spline interpolant

Here we will try cubic polynomial pieces connected in such a way that they form a function that is twice continuously differentiable everywhere. The places where different pieces meet are typically called breakpoints or knots. In this sense let us consider the following example.

(Interpolation) Given the five abscissae \(t_{0}{\lt}t_{1}{\lt}t_{2}{\lt}t_{3}{\lt}t_{4},\) we wish to find an interpolating cubic spline function \(s\), meaning that

for prescribed real values \(g_{k}\).

Is this problem even well-posed in the sense that there exists a unique solution amongst all the piecewise cubic \(C^{2}\) functions on \([t_{0},t_{4}]\) with interior breakpoints in \(t_{1}{\lt}t_{2}\) \({\lt}t_{3}\)? The function \(s\) must be a cubic polynomial \(p\) on the interval \([t_{0},t_{1}]\), giving us 4 parameters. Since we have \(C^{2}\) continuity in the point \(t_{1}\), we must have \(s(x)=p(x)+a_{1}(x-t_{1})^{3}\) on the interval \([t_{1},t_{2}]\), and analogously \(s(x)=p(x)+a_{1}(x-t_{1})^{3}+a_{2}(x-t_{2})^{3}\) on \([t_{2},t_{3}]\) and \(s(x)=p(x)+a_{1}(x-t_{1})^{3}+a_{2}(x-t_{2})^{3}+a_{3}(x-t_{3})^{3}\) on \([t_{3},t_{4}]\). This means we have in total seven parameters and five interpolation conditions to satisfy, so if – as we just expect, but do not know precisely right now – the conditions are independent, we still have two parameters to fix by other conditions. There are many choices for the additional two conditions, typically involving the two endpoints of the interval. One such choice is \(s^{\prime \prime }(t_{0})=s^{\prime \prime }(t_{4})=0\) [ 27 ] , but that actually influences the approximation quality in the endpoints negatively ( [ 3 ] , p. 55). Another prominent choice, which we consider here, is to prescribe Hermite data in the endpoints [ 44 ] , i.e., demanding in addition

yielding what is called a complete cubic spline interpolant \(s\).

To show properly the existence and uniqueness of the complete spline interpolant, we can proceed as in the early days of splines and set directly

We get from the interpolation conditions (3.1) and the continuity in the break-points, with \(h_{k}=t_{k+1}-t_{k},\) for \(k=0,1,2,3\)

The continuity of the first derivatives then yields

and the continuity of the second derivative

Using as principal unknowns the \(C_{k}=\frac{1}{2}s^{\prime \prime }\left( t_{k}\right) \) for \(k=0,1,2,3\) as well as \(C_{4}\) as an additional variable, we get for \(k=0,1,2\) from (3.6) \(k=3\)

Then (3.4) yields

Finally, the equations (3.8) and (3.7) substituted into (3.5) yield the linear system

with the right hand side

The two additional Hermite conditions (3.2) in the endpoints mean that

resulting in the final tridiagonal \(5\times 5\) system

with

The coefficient matrix is diagonally dominant, and so there exists a unique solution of \(C_{k}^{\prime }s\), from which all the other parameters can be computed, yielding a unique complete cubic spline interpolant \(s\) for the prescribed interpolation conditions (3.1) and (3.2). Clearly, the whole deduction can be carried out for any number \(n\) of distinct interior breakpoints and Hermite endpoint conditions.

3.2 An extremal property

The complete cubic spline interpolant \(s\) has a very special extremal property. If we take any other function \(g\) that possesses a square-integrable second derivative on \(\left[ t_{0},t_{4}\right] \) and interpolates the given function values \(g_{k}\ \)in the abscissae \(t_{k}\), and in addition the derivative values \(g_{0}^{\prime }\) and \(g_{4}^{\prime }\) in the endpoints, we have the following integral relation between \(s\) and \(g\)

because integration by parts yields

as the remaining terms vanish due to the derivative interpolation (3.2) in the interval endpoints. Since \(s^{\prime \prime \prime }\) is piecewise constant, namely \(6D_{k}\) on the interval \(\left[ t_{k},t_{k+1}\right] \), we can split the integral to obtain

due to the interpolation conditions (3.1), establishing (3.10). This relation, however, implies for any such interpolatory \(g\) a Pythagorean integral equation

so that

with equality only if \(g=s\).

So \(s\) is the unique function among all the interpolants for the specific interpolation conditions (3.1) and (3.2) which possesses minimal 2-norm of the second derivative. Again, this holds for all sets of distinct breakpoints in an interval \(\left[ a,b\right] \).

Note that the integral relation (3.10) and thus the extremal property (3.11) also hold in the case, where the Hermite boundary interpolation conditions (3.2) are replaced by the conditions \(s^{\prime \prime }\left( t_{0}\right) =s^{\prime \prime }\left( t_{4}\right) =0\) [ 27 ] , and with suitable boundary conditions similar properties can be derived for other odd spline degrees [ 1 ] .

3.3 This why a spline is called a spline

Finally, we can address the title question of this section. From differential geometry we know that the curvature of a curve, which is given as the graph \(\left( x,g\left( x\right) \right) \) of a smooth function \(g\) over an interval \([a,b]\), can be described locally by the formula

Instead of minimizing the strain energy \(\int \) \(\kappa ^{2}\left( x\right) dx\) of such a curve that is to pass through given points, one can simplify by just minimizing \(\int \left( g^{\prime \prime }\left( x\right) \right) ^{2}dx\). From the previous subsection we know that the optimal function in this latter case is in fact the cubic spline interpolant. Keep in mind, though, that only if \(\left\vert g^{\prime }\right\vert \) is really small, then the curvature \(\kappa \) is actually close to \(g^{\prime \prime }\).

Certain instruments used in naval architecture for the construction of a smooth curve through given points were called splines, and it is for this reason the name spline was introduced in the pioneering work of I. J. Schoenberg [ 38 ] , p. 48: For \(k=4\) (polynomial order = degree \(+1\)) they (the curves) represent approximately the curves drawn by means of a spline (instrument) and for this reason we propose to call them spline curves of order \(k\).

Note that the use of polynomial order instead of degree has some advantages which we will come back to in later sections. Figure 2 shows the reconstruction of a mechanical spline instrument, produced by the Norwegian Maritime Museum for the University of Oslo.

3.4 A special case (Item 2.1)

Using the function values and first derivatives given in item 2.1, we obtain from the general case (3.9) the specific tridiagonal system of conditions

yielding the solution \(C_{0}=C_{4}=0,\ C_{1}=C_{3}=\frac{1}{2}\) and \(C_{2}=-1.\)

As the piecewise representation of the complete cubic spline interpolant \(s\) in this (very) particular case we thus obtain

Note that we actually get here \(s\in C^{2}\left( \mathbb {R}\right) \) , if we take \(s\) to be identically zero outside of the interval \(\left[ -2,2\right] \). For a graph of this function take a look back at the front page.

4 One possible definition and some properties

Somehow the introduction in the previous section is not exactly to the point. Although it shows clearly why cubic splines as a class of functions are distinguished for certain interpolation problems through the extremal property (3.11), it does not really single out one specific function amongst all splines. Why should the function values and endpoint derivatives in item 2.1 lead to any kind of special spline function? Shouldn’t we rather think of a spline that is equal to one in one breakpoint and zero in all others? We see that if we add more break-points and corresponding interpolation conditions, we increase the size of the system of equations (3.9), though it will still be tridiagonal and uniquely solvable. As a consequence, however, the complete cubic spline interpolant will generally consist of many pieces and be globally supported from endpoint to endpoint. Thus in total item 2.1 does not seem like such a good way to define spline basis functions. In fact, what happened is the following: I wanted to introduce the general extremal property of splines and thus explain the name in the beginning, but also had to get exactly the answer (3.16). So in a way I still owe you a proper definition of a cubic B-spline for the knots \(-2,-1,0,1,2\).

4.1 A definition of a cubic B-spline

At the end of the previous section, we have already seen that the function \(s\) in (3.16) is actually everywhere twice differentiable if we extend it by setting it to zero outside of the interval \(\left[ -2,2\right] \). That way \(s\) is in fact a cubic polynomial over each interval \(\left[ k,k+1\right] ,\ k\in \mathbb {Z}\), not just for \(k=-2,\) \(-1,0,1\). The function has just four nontrivial pieces, and for numerical purposes it would be very advantageous to operate only with small supports and a small number of pieces. So far, however, all these properties are satisfied also for a multiple \(c\cdot s\), for any nonzero constant \(c\). A prescribed function value in the center of the support, \(x=0\), is one way to fix this constant. Why exactly the normalization in the following definition was picked, will become clear a little later. Now let’s go.

(Cubic B-spline) We introduce the cubic B-spline B for the knots (or breakpoints) \(-2,-1,0,1,2\) by demanding that it satisfies the following properties:

\(B\) is a real-valued function defined for all \(x\in \mathbb {R}\).

\(B\) has only local support, namely the interval \(\left[ -2,2\right] \).

On each subinterval \(\left[ k,k+1\right] \) for \(k\in \mathbb {Z}\) the function \(B\) is a cubic polynomial: \(B_{| [k,k+1] }\in \Pi _{3}\).

The six polynomial pieces, i.e., including the two identically zero ones on \([-\infty ,-2)\) and \(\left( 2,\infty \right) ,\) are fitted together so that the function \(B\) is twice continuously differentiable everywhere: \(B\in C^{2}\left( \mathbb {R}\right) \).

Finally we impose that the function \(B\) attains a specific value in the center of its support, namely \(B\left( 0\right) =\frac{2}{3}\).

Ignoring the results of the previous section, we would just naively register that we have a total of sixteen free parameters, since we have the four cubic polynomial pieces over \(\left[ -2,2\right] \), each providing four parameters. On the other hand, we have fifteen conditions in the form of three continuity conditions for each of the five active breakpoints \(-2,-1,0,1,2,\), which heuristically explains why we need a final condition to obtain a unique solution.

4.2 An explicit formula (Item 2.2)

Starting from scratch, we determine the polynomial pieces of \(B\) explicitly this time. The \(C^{2}\) continuity in \(-2\) and \(2\) imposes, since \(B\) is identically zero outside \([-2,2]\), that

for some factors \(a_{-2}\) and \(a_{1}\), while the \(C^{2}\) continuity in \(-1\) and \(1\) leads to

for some \(a_{-1}\) and \(a_{0}\). The \(C^{2}\) continuity in \(0\) yields – not too surprisingly – the symmetry that \(a_{-2}=a_{1}\) and \(a_{-1}=a_{0}\) as well as \(a_{0}=-4a_{1}\).

Thus the cubic B-spline \(B\) is indeed given up to a constant factor by the continuity conditions imposed in the knots and the prescribed function value in \(0\) fixes this constant to achieve the representation of item 2.2, with the polynomial pieces of course just the same as those already stated in (3.16). Note that

Item 2.2 can be found in formula (4.2) on page 71 of [ 38 ] , but there it is derived in a completely different way, which we will come back to later in Section 6.

4.3 The basis property

Next we see that for \(k\in \mathbb {Z}\) the translated functions \(B\left( \cdot -k\right) \) are also in \(C^{2}\left( \mathbb {R}\right) \), have support \(\left[ k-2,k+2\right] \) and each consist of four cubic polynomial pieces within their supports. Thus it is possible to look at the whole set of functions \(\{ B\left( \cdot -k\right) \} _{k\in \mathbb {Z}}\) instead of only \(B\). Then any function represented as

is a real-valued piecewise cubic \(C^{2}\) function on all of \(\mathbb {R}\) with breakpoints in the integers \(\mathbb {Z}\). Note that due to the local supports, the sum for any \(x\in \mathbb {R}\) only contains at most four terms for which the corresponding function value of B-spline translates is nonzero, so we need not worry about the convergence of an infinite series here.

Do we cover all real-valued piecewise cubic \(C^{2}\) functions with breakpoints in the integers this way, meaning is it possible to write any such function \(g\) in the form given in (4.1)? The answer is yes, justifying the term B-spline – as for basis spline – introduced by Schoenberg in [ 39 ] , p. 256: It was shown in ( [ 13 ] ) that spline functions in an interval \(\left( a,b\right) \), finite or infinite, with prescribed knots, admit a unique representation in terms of so-called fundamental spline functions, which we now call B-splines. The master was of course referring to arbitrary knots and polynomial degrees, but we are content to investigate the specific situation at hand.

Let us start by looking at the interval \(\left[ 0,1\right] \). Only the four translates \(B\left( \cdot +1\right) ,B\left( \cdot \right) ,B\left( \cdot -1\right) \) and \(B(\cdot -2)\) do not vanish on this interval. Denoting \(p_{k}=B\left( \cdot -k\right) _{|\left[ 0,1\right] }\) for \(k=-1,0,1,2,\) we have for \(x\in \left[ 0,1\right] \) from item 2.2

An instructive way to check that the four polynomials \(p_{k}\) are linearly independent is to show that the monomial basis up to degree 3 can be represented by the \(p_{k}\) locally, i.e., for \(x\in \left[ 0,1\right] \):

This means that we can write the restriction of any polynomial over \(\left[ 0,1\right] \) in terms of \(B\left( \cdot -k\right) ,k=-1,0,1,2:\)

The \(C^{2}\) continuity in \(x=1\) means that terms of

As the support of \(B\left( \cdot -3\right) \) is the interval \(\left[ 1,5\right] \) and \(B\left( x-3\right) _{|\left[ 1,2\right] }=\frac{1}{6}\left( x-1\right) ^{3}\), we get

and we can proceed inductively interval by interval for the positive x-axis and repeat the procedure for the negative \(x\)-axis, looking first at \(\left[ -1,0\right] \) and so forth, thus establishing that any \(g\) has a representation of the form (4.1).

4.4 Local reproduction of polynomials

As a special case, since all cubic polynomials can of course be seen as piecewise polynomials, where the breakpoints are just not active, we know that we can represent them as a spline series of the form (4.1). In fact, the extension of the local representation of the monomials can be derived inductively for all \(x\in \mathbb {R}\) as

Note that the normalization condition \(B\left( 0\right) =\frac{3}{2}\) from the B-spline definition is just the right one to produce that the spline \(B\) and its integer translates form a partition of unity (4.3).

Recalling that \(B\left( \cdot -k\right) \) has support \(\left[ k-2,k+2\right] \), the knots in the interior of its support are \(k-1,k,k+1\). Why don’t you find out how the coefficients in the expansions of the monomials are related to the symmetric functions? These are given for any three real numbers \(s_{1},s_{2},s_{3}\) by

4.5 Minimal support (Item 2.3)

Since we have now established that each \(C^{2}\) function which is piecewise cubic over the integers can be written in the

series form (4.1), we can show that the function \(B\) has indeed smallest support, meaning that there is no such function \(g\neq 0\), whose support is properly contained in \(\left[ -2,2\right] \). If we assume that there is such a function, since it is piecewise polynomial over the integers, its support would be contained either in \(\left[ -2,1\right] \) or \(\left[ -1,2\right] \). We look at the first case, the other one is dealt with analogously.

According to the basis property, we know that the function \(g\) must have a representation of the form (4.1). Since the function g is identically zero on \(\left[ 1,2\right] \), arguing in a similar way as for (4.2), we establish that in the series representation \(c_{0}=c_{1}=c_{2}=c_{3}=0\), and use induction to show that \(c_{k}=0\) also for \(k\geq 4\). Proceeding similarly for the interval \(\left[ -3,-2\right] \), we get \(c_{-4}=c_{-3}=c_{-2}=c_{-1}=0\), and from there on \(c_{k}=0\) also for \(k\leq -5\), establishing that \(g\equiv 0\). The same argument holds of course to see that the piecewise cubic \(C^{2}\) functions of support \(\left[ k-2,k+2\right] \) must have the form \(c_{k}B\left( \cdot -k\right) ,c_{k}\neq 0\), for any \(k\in \mathbb {Z}\).

We are now able to address item 2.3. A function, which is in \(C^{2}\left( \mathbb {R}\right) \), a cubic polynomial on each interval \(\left[ k,k+1\right] \) for \(k\in \mathbb {Z}\), and has smallest support must have the form \(c_{k}B\left( \cdot -k\right) \). Since it attains the function value \(\frac{2}{3}\) in \(x=0\), it can only be \(4B\left( \cdot +1\right),\ B\left( \cdot \right) \) or \(4B\left( \cdot +1\right)\). Since the function is supposed to be even as well, it must be \(B\) itself.

As mentioned before, our argument for the basis property of spline functions is derived from [ 13 ] , where it is actually carried out for any degree and any kind of knot sequence, i.e., the breakpoints are no longer equally spaced and thus the basis functions no longer translates of just one function like our \(B\) here. For those who are striving (not always successfully) to publish their results promptly, here is a quote from the introduction of this paper of 1966: The present paper was written in 1945 and completed by 1947 (...) but for no good reason has so far not been published. Those were the days.

4.6 A recursion formula (Item 2.4)

Although I have stated in the introduction that we will restrict ourselves to polynomial degree 3, it is actually essential to see how minimally supported splines of given degree can be recursively put together using minimally supported splines of lower degree. So I am going to introduce a specific piecewise quadratic, linear and constant function now, but without much of an explanation. Find out for yourself about their properties such as the relation of the polynomial degree to the number of nontrivial pieces, positioning of breakpoints and the order of smoothness in these breakpoints. Once you have reached the last section and thus the general definition of a B-spline, you will be able to put things into perspective. Just to confuse you I will index these piecewise polynomial functions by the order of the polynomial pieces, and not by their degree.

We start by setting \(M_{4}=B\) as a piecewise cubic function. For piecewise quadratics we define \(M_{3}\) by

and the piecewise linear \(M_{2}\) (yes, this is actually the hat function \(N\) from item 2.10) as

Finally, for piecewise constants we take

Now the functions \(M_{1}\left( \cdot -\frac{1}{2}\right) =\chi _{|{[0,1)}},M_{2},M_{3}\left( \cdot -\frac{1}{2}\right) \) and \(M_{4}\) are all piecewise polynomials with breakpoints in the integers of increasing polynomial degree, but are they connected in some way? We set \(t_{k}=k,k\in \mathbb {Z}\), and define, following the notation in [ 13 ] ,

It is easily verified using item 2.1, (4.8), (4.12) and (4.15), that the following recursion holds for \(j=2,3,4\) and any \(k\in \mathbb {Z}\):

This recursion formula can be illustrated in the form of a triangle

For \(k=-2,\) substituting expressions for decreasing \(j\) and comparing with item 2.2 interval by interval, establishes item 2.4. Of course, just checking the formulae for the polynomial pieces is unsatisfactory, and will not work for arbitrary degree, let alone arbitrary breakpoints, so we are in need of a closed formula expression for the B-splines and we will attack that problem in the subsequent section. For the recursion in the general case, we defer to the final section.

Having a recursion available is very important, for instance, to handle B-splines in practical computations in a numerically stable way. The recursion, as found independently in [ 2 ] and [ 12 ] , is typically considered as the starting point for splines as a practical numerical tool. We will not dwell on this too much here, but give one example, namely the evaluation of the series (4.1).

For \(x\in \lbrack t_{\ell },t_{\ell +1})\), for any \(\ell \in \mathbb {Z}\), only four terms are relevant due to the compact support of \(B\):

where the \(c_{\ell }^{3}\left( x\right) \) are defined recursively for \(j=1,2,3\), and \(k=\ell -3+j,\ldots ,\ell \) as

with the initialization

Again, we can illustrate this using a triangular scheme:

For a thorough look at triangular schemes for polynomials and splines consult [ 26 ] .

5 Enter: truncated power functions

To derive a closed formula, especially one allowing also the use of nonuniform knots, we start with a different approach. The most straightforward piecewise polynomial functions are the following:

For all \(x\in \mathbb {R}\) the truncated function is defined as

and for fixed \(n\in \mathbb {N}\) the truncated power function of degree \(n\) by

These functions consist of just two polynomial pieces glued together at the breakpoint \(0\), so that the function values of the two pieces match in the knot and also all the corresponding onesided derivatives (the leftsided ones for the left piece which is identically zero and the rightsided ones for the right piece, the polynomial \(x^{n}\)) up to the order \(n-1\). The \(n\)-th derivative then displays a jump discontinuity in the breakpoint. Altogether \(x_{+}^{n}\) is a function that is \(n-1\) times continuously differentiable on all of \(\mathbb {R}\). Clearly the breakpoint can be shifted to any other point by a simple translation, i.e., \(\left( x-a\right) _{+}^{n}\) has its breakpoint in \(a\). A quick quote from [ 3 ] , p. 101: We refuse to worry about the meaning of the symbol \(\left( 0\right) _{+}^{0}\).

5.1 A closed formula for B-splines (Item 2.5)

It is possible that a linear combination of truncated power functions with different breakpoints, which are of infinite support, can be combined to generate a compactly supported function. In fact, using the definition and investigating the different cases for the respective intervals, we can show that representation 2.5 holds, i.e.,

At this point it is time to remember a fundamental tool in numerical analysis, typically introduced when studying the Newton form of interpolation polynomials, namely the divided differences.

For a function \(f\) the fourth order divided difference in the five distinct points \(t_{0},t_{1},t_{2},t_{3},t_{4}\) is defined as the leading coefficient of the polynomial of degree 4 that interpolates \(f\) in \(t_{0},t_{1},t_{2},t_{3},t_{4}\), so

where

As is well known, we can compute divided differences for points \(t_{k}{\lt}\ldots {\lt}t_{k+\ell }\) by a recursion:

and

Defining the function \(G\left( t,x\right) =\left( t-x\right) _{+}^{3}\), we can establish that in fact

so that the cubic B-spline \(B\) for the knots \(-2,-1,0,1,2\) is generated by applying the fourth order divided difference for these knots to the functions \(G\) with respect to the variable \(t\) and multiplying by the difference last knot minus first knot. The equation is translation invariant in the sense that

5.2 Green’s functions (Item 2.6)

Now we proceed to investigate other settings, where the truncated power functions and thus B-splines as their divided differences are of importance.

For the linear differential operator

and initial conditions

a bivariate function \(G_{\mathcal{L}}\left( x,t\right) \) defined on a bivariate interval \(\left[ a,b\right] ^{2}\) is called Green’s function for the differential operator (5.5) with given initial conditions (5.6), if the unique solution to the initial value problem (IVP) given by

for any right hand side function \(h\) that is integrable on the interval \(\left[ a,b\right] \) and arbitrary initial condition (5.6) can be expressed as

where \(y_{p}\ \)is the solution of the homogeneous \(IVP\ \mathcal{L}[y]=0\) under the given initial conditions, while the integral term solves the equation \(\mathcal{L}[y]=h\) under homogeneous initial conditions.

We can now compute directly that for the simple operator of fourth order differentiation \(\mathcal{L}=D^{4},\) i.e., \(\mathcal{L}\left[ y\right] =y^{\left( 4\right) }\) we obtain the fourth-order Taylor polynomial in \(a\) as \(y_{p}^{4}\)

Differentiating under the integral sign yields

and thus according to Definition 4

yielding as the solution of the IVP on \(\left[ a,b\right] \)

This holds true also for other spline degrees n, the differential operator \(D^{n+1}\), and the truncated power function \(\frac{1}{n!}\left( x-t\right) _{+}^{n}\) as the corresponding Green’s function, see [ 5 ] . Note that we have used \(\left( x-t\right) _{+}^{3}\) here, while we use \(\left( t-x\right) _{+}^{3}\) in the B-spline definition. We have, however, that

and since the fourth order divided difference annihilates polynomials up to degree 3

and thus item 2.6, if we choose specifically \(\left[ a,b\right] =\left[ -2,2\right] \).

5.3 Peano kernels (Item 2.7)

The annihilation property of divided differences comes again into play in a special case of a remainder theorem originally established by Peano (see [ 15 ] ). In my opinion this a really powerful theorem as it yields remainder terms for various types of interpolation, numerical integration and differencing. For cubic polynomials this allows – for example – to state remainder formulae for Lagrange interpolation in four points, for the cubic Taylor polynomial in one given point, or for Simpson’s rule for numerical quadrature (try and see what you obtain).

Let a linear functional on the function space \(C^{4}\left[ a,b\right] \) be given in the form

where the functions \(a_{j}\) are assumed to be piecewise continuous over \(\left[ a,b\right] \) the points \(t_{j,k}\) lie in \(\left[ a,b\right] \) while all \(j_{k}\) are in \(\mathbb {N}\) and all \(b_{j,k}\) are real numbers. If the functional \(L\) annihilates polynomials of degree 3, i.e.,

then we have for \(h\in C^{4}\left[ a,b\right] \) the representation

with

meaning that the functional \(L\) is applied to the truncated power function as a function of \(t\), treating \(x\) as a free parameter. The function \(K\) is called the Peano kernel of the functional \(L\).

This remainder theorem also covers our divided differences of fourth order, since they annihilate cubics, and we obtain for all \(h\in C^{4}\left[ a,b\right] \)

so the cubic B-spline \(B\) is – up to a factor – the Peano kernel for the divided difference functional, and this holds also for other degrees and nonuniform knots.

Note that we obtain from here the definite integral of the cubic B-spline By choosing \(h\left( x\right) =x^{4}\), so that

Finally, we point out that for equally spaced points, there is of course a direct correspondence between divided differences and central differences. Over the integers we define the central difference

and iteratively

We thus compute that

On the other hand according to the recursive computation of the divided differences (5.2), we have

and thus (recalling (5.8) we obtain item 2.7

5.4 A geometric interpretation (Item 2.8)

The Peano kernel property of the B-spline \(B\) and an integral representation of divided differences (again valid for any choice of simple knots) give rise to our first geometric interpretation of \(B\). Using again the recursion for divided differences (5.2), it is possible to establish the Hermite-Genocchi integral representation of a divided difference for a smooth function \(h\in C^{4}\left[ x_{0},x_{4}\right] \)

where the domain of integration is the simplex \(\Sigma \) in \(\mathbb {R}^{4}\) given as

A straightforward proof for general order divided differences is of course done by induction over the number of points \(x_{j}\).

We now position a unit-volume simplex \(\Sigma ^{\ast }\) in \(\mathbb {R}^{4}\), so that its five vertices under orthogonal projection to the \(x\)-axis are mapped onto the points \(\left( x_{j},0,0,0\right) ^{T}\). There are a lot of possibilities for this, a simple one being the vertices

Regardless of the actual choice of \(X_{j}=\left( x_{j},y_{j,}z_{j},u_{j}\right) ^{T}\), as long as \(4!{\rm vol}_{4}\left( \Sigma ^{\ast }\right) =\det \left( X_{1}-X_{0},X_{2}-X_{0},X_{3}-X_{0},X_{4}-X_{0}\right) =4!\), we make a change of variables

The determinant of the Jacobian \(\frac{\partial \left( x,y,z,u\right) }{\partial \left( s_{1},s_{2},s_{3},s_{4}\right) }\) needed for the corresponding integral transformation is \({\rm det}\left( X_{1}-X_{0},X_{2}-X_{0},X_{3}-X_{0},X_{4}-X_{0}\right) =4!\), so that we obtain by the Hermite-Genocchi formula (5.10)

The interior triple integral is now indeed the 3-dimensional volume of the intersection of the hyperplane given by \(x=x_{f}\), with \(x_{f}\) fixed between \(x_{0}\) and \(x_{4}\), and the simplex \(\Sigma ^{\ast }\). On the other hand, by (5.8), it must be the B-spline \(B\) as the Peano kernel of the divided difference, since the equations hold for all functions \(h\in C^{4}\left[ a,b\right] \), yielding

and thus item 2.8. Note how the volume is always the same, regardless of how the simplex is chosen, provided the first coordinate of each \(X_{j}\) is \(x_{j}\) and the volume of \(\Sigma ^{\ast }\) is 1, a nice illustration of Cavalieri’s principle.

6 Fourier transforms

We will now look at situations, where the function \(B\) arises specifically because its breakpoints lie not just anywhere, but in the integers. Schoenberg called this the cardinal case, see his monograph [ 40 ] . For background material on Fourier transforms, used here without proof, consult a textbook on Fourier analysis. I for myself used the formulations from Chapter 2 in [ 11 ] . Note that \(i\) everywhere in this text denotes the imaginary unit, it is never used as an index.

Recall first that the Fourier transform of a function \(g\in L_{1}\left( \mathbb {R}\right) \) is defined as

and the inverse Fourier transform of \(\hat{g}\in L_{1}\left( \mathbb {R}\right) \) as

Next recall that, provided both \(g\) and \(\hat{g}\in L_{1}\left( \mathbb {R}\right) \), at every point \(x\) where \(g\) is continuous

Let \(g,h\in L_{1}\left( \mathbb {R}\right) \), then the convolution \(g\ast h\) is also in \(L_{1}\left( \mathbb {R}\right) \), where

For the Fourier transform of a convolution we have

Recall now that the sinc function is defined as

It turns out that the sinc function is closely related to piecewise polynomials through the Fourier transform.

A fundamental result for practical applications is the Sampling Theorem. The theorem deals with functions that are bandlimited, i.e. whose Fourier transform is nonzero only on an interval of finite length, say \(\mathcal{F}g\left( \omega \right) =0\) for \(\left\vert \omega \right\vert {\gt}L\). In this case the whole function (signal in engineering terms) can be reconstructed from countably many discrete samples, namely the function values \(g\left( k\pi /L\right) \) for \(k\in \mathbb {Z}\), as

Compare (6.5), where a bandlimited function is represented using the sinc function and its translates, to (4.1), where a piecewise polynomial over the integers is represented by the B-spline \(B\) and its integer translates. While the convergence in (6.5) is very slow, evaluation is quickly done in (4.1) due to compact support and the recursion formula. The coefficients in (4.1), however, are no simple samples of the given spline function. In Schoenberg’s already mentioned first spline paper in 1946 [ 38 ] , the series (6.5) was the starting point for his studies of spline series like (4.1).

6.1 The Fourier transform of the B-spline \(B\) (Item 2.9)

Let us start by computing the Fourier transform of our cubic B-spline \(B\). Splitting up the complex-valued function \(h_{\omega }\left( x\right) =e^{-i\omega x}\) into its real and imaginary part, we find that we can actually use the Peano kernel property (5.8) and item 2.7 also for this function to obtain

We have

and thus

and

This way we obtain that the Fourier transform of \(B\) is a power of a dilated sinc function:

Since \(B\) is continuous, due to (6.1), taking the inverse Fourier transform yields item 2.9.

6.2 Convolutions (Item 2.10)

The occurrence of the 4-th power of the sinc-function for a cubic B-spline (order = 4) is of course no accident. Starting with piecewise constants, namely the characteristic function \(M_{1}\) of the halfopen interval \(\left[ -\frac{1}{2},\frac{1}{2}\right) \) already presented in (4.15), i.e.,

we define recursively for \(n\geq 2\), using convolution,

Check that this recursive definition is actually consistent with the piecewise definitions in (4.12) and (4.8).

Since the Fourier transform of \(M_{1}\) is

the Fourier transforms of \(M_{n}\ \)are due to convolution property (6.2)

While \(M_{1}\) still has two jumps, we see from (6.9) that the smoothness of \(M_{n}\) increases by one with each convolution, i.e. \(M_{n}\in C^{n-2}\left( \mathbb {R}\right) \). Thus taking the inverse transform establishes for \(n\geq 2\)

implying of course \(M_{4}=B\).

Since the sinc-function is even, we can remove the complex exponential in the integrand and write

Integrals of this form have been investigated for a long time by a lot of mathematicians independent of spline theory, see [ 7 ] for a historical review.

We have already seen in (4.12) that for the hat function \(N\) we have \(N=M_{2}\). This establishes, as the convolution is associative, that

producing item 2.10.

6.3 A moving unit impulse (Item 2.11)

In [ 28 ] it is described how B-splines can be generated recursively by intersecting the graph of a lower order B-spline with a unit impulse moving along the coordinate axis, as derived in [ 36 ] . In more explicit terms this would mean the following.

Moving a unit square along the\(\ x\)-axis with its center at the point \(\left( x,\frac{1}{2}\right) \) means that its four corners lie at \(\left( x-\frac{1}{2},0\right) \), \(\left( x+\frac{1}{2},0\right) \), \(\left( x+\frac{1}{2},1\right) \), \(\left( x-\frac{1}{2},1\right) \) for a given \(x\in \mathbb {R}\). The area \(A(x)\) of the region cut out of the square by the graph of the hat function \(N(x)\) is then nothing but the definite integral of the hat function between the limits of integration \(x-\frac{1}{2}\) and \(x+\frac{1}{2}\):

Thus a repetition of the process using \(A(x)\) instead of \(N(x)\) produces item 2.11:

7 Subdivision

7.1 The refinement equation (Item 2.12)

A simple dilation of the B-spline series (4.1) by a factor of 2 yields that any piecewise cubic polynomial function over the half integers \(\frac{1}{2}\mathbb {Z}\) that lies in \(C^{2}(\mathbb {R})\) can be represented by the sum

Clearly the B-spline \(B\) qualifies as such a function, the knots \(\frac{1}{2}+\mathbb {Z}\) are just not active as \(B\) is a piecewise function over the knots \(\mathbb {Z}\). So there must be coefficients \(p_{k}\), called two-scale coefficients, such that

The specific functional equation for the refinement of \(B\) – as given in item 2.12 – can now be established using the Fourier transform of \(B\), indicating also the corresponding ones for all the functions \(M_{n}\).

Note that in 2.12 we can compute the values of any solution function \(f\) in the points \(\frac{1}{2}+\mathbb {Z}\) from the prescribed values in \(\mathbb {Z}\) using the functional equation. Repeating this process, the values in \(\frac{1}{4}+\frac{1}{2}\mathbb {Z}\) can be computed from those in \(\frac{1}{2}+\mathbb {Z}\) and so forth, so that the values of \(f\) are established for all arguments of the form \(\frac{k}{2^{r}}\). The continuity of f takes care of the remaining rational numbers and all irrational ones, ensuring that there can be only one continuous solution function of the functional equation with the prescribed function values in the integers.

So we take the Fourier transforms in (7.1) to obtain

A dilation by the factor 2 in the function results in a factor of 1/2 in the Fourier transform, the translation by an integer \(k\) in an additional factor of \(e^{-ik\frac{\omega }{2}}\), yielding in total

Denoting \(z=e^{-i\frac{\omega }{2}}\), the function

is called the symbol of the refinable function and its investigation is one of the cornerstones in subdivision theory (see for example [ 8 ] and the tutorial [ 20 ] ) and wavelet analysis (see for example [ 14 ] , [ 11 ] ) to derive properties of the function obeying a refinement equation with given two-scale coefficients.

Our task here is much easier since we have already computed the Fourier transform of \(B\) before and can just derive \(P\) by division, i.e.,

Thus the two-scale coefficients for \(B\) are

Note that the famous Daubechies functions [ 14 ] are derived from splines by starting from the spline symbol \(P(z)\) and multiplying by another term \(S(z)\) chosen to achieve orthogonality of the translates of the refinable function.

7.2 Subdivision curves (Item 2.13)

How are the coefficients related when going from the piecewise cubics over the integers \(\mathbb {Z}\) to the half integers \(\frac{1}{2}\mathbb {Z}\)? In other words given a function

we know that \(g\) can also be written as

so the question is what the relationship is between the coefficients \(c_{k}^{0}\) and \(c_{k}^{1}\). Using the coefficients of the symbol \(P\), also called the mask coefficients, we find with item 2.12 that

Since the representations are unique, we obtain

Splitting up in even and odd indices and using the fact that only five coefficients \(p_k\) are nonzero results in

Clearly we can iterate this procedure and obtain

with

A straightforward way to approximate the graph of the function, i.e. the set of pairs \(\left( x,g\left( x\right) \right) \) for all \(x\in \mathbb {R}\), is to draw the polygon connecting the points \(P_{k}^{0}=\left( k,c_{k}^{0}\right) \) k for all \(k\in \mathbb {Z}\) instead. Note that the polygon does not interpolate, since we take the \(k\)-th coefficient of the B-spline expansion as the second component, not the function value of \(g\) at point \(k\).

A finer polygon and hopefully better approximation is then obtained by connecting the points \(P_{2k}^{1}=\left( k,c_{2k}^{1}\right) \) for all \(k\in \frac{1}{2}\mathbb {Z}\) and so forth for all \(r\in \mathbb {N}\): \(P_{2^{r}k}^{r}=\left( k,c_{2^{r}k}^{r}\right) \) for all \(k\in \frac{1}{2^{r}}\mathbb {Z}\). We contend that the polygons actually converge to the graph of the function. Indeed we have for all \(k\in \mathbb {Z}\)

Using the \(r\)-th level representation of \(g\) we find

and thus

Using the difference operator for biinfinite sequences \(c=\{ c_{\ell }\} _{\ell \in \mathbb {Z}}\) defined by \(\left( \Delta c\right) _{\ell }=c_{\ell +1}-c_{\ell }\), we thus get

It now suffices to investigate how the differences of consecutive levels are related. We have

and

obtaining

and thus by iteration

We have thus established that for a given fraction \(\frac{k}{2^{r_{1}}}\), the coefficients \(c_{k2^{r-r_{1}}}^{r}\) converge to \(g\left( \frac{k}{2^{r_{1}}}\right) \) for \(r\rightarrow \infty \), and the continuity of \(g\) takes care that the sequence of polygons converge to the graph of \(g\) as a whole. In item 2.13 we have specifically chosen the initial coefficients \(c_{k}^{0}=\delta _{k,0}\), and thus \(g=B\), so that the polygons converge to the graph of \(B\) itself.

The study of subdivision curves in Computer Aided Geometric Design started with Chaikin [ 9 ] , but the idea dates back to de Rham in 1947 [ 34 ] . In the original process, called corner cutting, the rules for the coefficients, and thus the corresponding rules for the control points \(P_{2\ell }^{r}\) and \(P_{2\ell +1}^{r}\), are not given by (7.4) and (7.5), but instead by

Compute for a given initial polygon a few steps of the algorithm and draw the resulting polygons to see why the procedure is called corner cutting.

While in our notation and terminology it is fairly easy to see that the coefficients involved are in fact the two-scale coefficients of the piecewise quadratic B-spline \(M_{3}\), and thus the corresponding polygons converge to the piecewise quadratic function \(\sum _{k}c_{k}^{0}M_{3}\left( x-k\right) \), this was not known when the algorithm was first described.

Subdivision procedures are also available for surfaces, and even more important there. I would like to refer those who are interested to find out more to the tutorials by Sabin [ 37 ] . At this point let me just mention that these surfaces are now the state of the art in computer animation movies [ 16 ] .

8 Probability

8.1 An urn model (Item 2.14)

In Subsection 7.1 we have encountered binomial coefficients in the two-scale coefficients of B-splines. Binomial coefficients can be derived from Pascal’s triangle, which is related to urn models in probability. So it should not come as a great surprise that we can link the B-spline to urn models as well.

We consider an urn model introduced in very general form already by Friedman [ 24 ] . Initially the urn contains \(w\) white balls and \(b\) black balls. One ball at a time is drawn at random from the urn and its color is inspected. It is then returned to the urn and a constant number \(c_{1}\) of balls of the same color and a constant number \(c_{2}\) of balls of the opposite color are added to the urn.

The aim is to study the probability of selecting exactly \(K\) white balls in the first \(N\) trials. It turns out (see Goldman [ 25 ] , where this material is taken from) that this probability can be described in dependence on three parameters: the probability of selecting a white ball on the first trial and the percentages of balls of the same and of the opposite color added to the urn after the first trial.

Using the notation

for the probability of selecting a white ball on the first trial, we use the term \(B_{K}^{N}\left( t\right) \) for the probability of selecting exactly \(K\) white balls in the first N trials.

For the simplest case of \(c_{1}=c_{2}=0\) we obtain the binomial distribution for sampling with replacement, i.e.

The case \(c_{1}\neq 0,\ c_{2}=0\) corresponds to classical Polya-Eggenberger models [ 21 ] .

The urn models for the cases \(c_{2}=\omega +b+c_{1}\) are called spline (!) models,and we will just look at the simplest case \(c_{1}=0,\ c_{2}=\omega +b\). This means that the number of balls after the first \(N\) trials is always \((N+1)(w+b)\), but the number of white and black balls is determined by the outcome of those trials.

We introduce two more probabilities (dependent on \(t\)):\(f_{K}^{N}\left( t\right) \) as the probability of selecting a black ball after selecting exactly \(K\) white balls in the first \(N\) trials and \(s_{K}^{N}\left( t\right) \) as the probability of selecting a white ball after selecting exactly K white balls in the first \(N\) trials.

We can now set up a recursion for the terms \(B_{K}^{N}\left( t\right) \). After the trial number \(N+1\), we can only have \(K\) white balls in two mutually exclusive cases:

if we already have drawn \(K\) white balls after trial \(N\) and we draw a black ball in the last trial,

if we have \(K-1\) white balls after trial \(N\) and draw a white ball in the last trial.

In probability terms this means

In case i) the number of black balls after the first \(N\) trials is \(b+K(w+b)\), while in case ii) the number of white balls after the first \(N\) trials is \(w+\left( N-K+1\right) (w+b)\). Thus we obtain

and the specific recursion for \(K=0,\ldots ,n\)

The initial conditions are

and we see that we get hat function as

By induction we can derive a closed formula for the functions \(B_{K}^{N}\) based on the recursion (8.1), namely

We see that the formula is correct for \(N=1\). By the recursion (8.1) and the induction hypothesis, we get

with

establishing the desired formula (8.2).

We now define a piecewise polynomial function \(U_{N}\) by setting for \(0\leq K\leq N\) and \(x\in \left[ K-\left( N+1\right) /2,K+1-\left( N+1\right) /2\right] \)

Invoking the truncated power function \(x_{+}\), we obtain, similar to (5.7), for all \(x\in \mathbb {R}\)

yielding \(U_{3}=B\) and item 2.14 for \(N=3\).

8.2 A probability density function (Item 2.15)

In subsection 4.2 and 5.3 we have observed the following two properties of the spline \(B\):

Thus the function \(B\) qualifies as a probability density function in the sense that we can investigate a real random variable \(T\) for which the probability \(P\) that an observed value of \(T\) lies between \(a\) and \(b\) is given by

Let us look a little bit more into this. Note first that \(M_{1}\) also satisfies

and thus can be interpreted as well as a probability density function of a real random variable \(X_{1}\).

The convolution property (6.2) yields that \(B=M_{4}\) is the probability density function of the sum \(Y=X_{1}+X_{2}+X_{3}+X_{4}\), where each independent real random variable \(X_{j}\) has the density function \(M_{1}\). So what kind of experiment does this density function \(M_{1}\) represent, producing

In fact, when rounding, let for any \(x\in \mathbb {R}\) denote \(r\left( x\right) \in \mathbb {Z}\) the integer which closest to \(x\), i.e., using the largest integer function \([[ \cdot ]]\):

This implies of course that

is the error when replacing the value \(x\) by its closest integer \(r(x)\), resolving item 2.15. This interpretation was given by Schoenberg in 1946 [ 38 ] , but it turns out (see [ 7 ] for a historical account) that such probability distributions were studied by a number of people, resulting in approaches quite different from the ones we have taken so far and which we will examine in the following.

8.3 Sommerfeld’s approach in 1904 (Items 2.16 and 2.17)

Starting from the sum \(\sum _{j=1}^{n}X_{j}\) , where each real random variable \(X_{j}\) represents the error when replacing a real number by its closest integer, Sommerfeld in 1904 [ 43 ] wanted to investigate the limit behaviour if the number \(n\) of terms goes to infinity. We will have a look how he set out to compute the probability density functions explicitly for the first few values of n. We will refer to the corresponding probability density functions as \(M_{n}\) and follow Sommerfeld’s approach, thus ignoring the closed formulae we are able to derive by convolution.

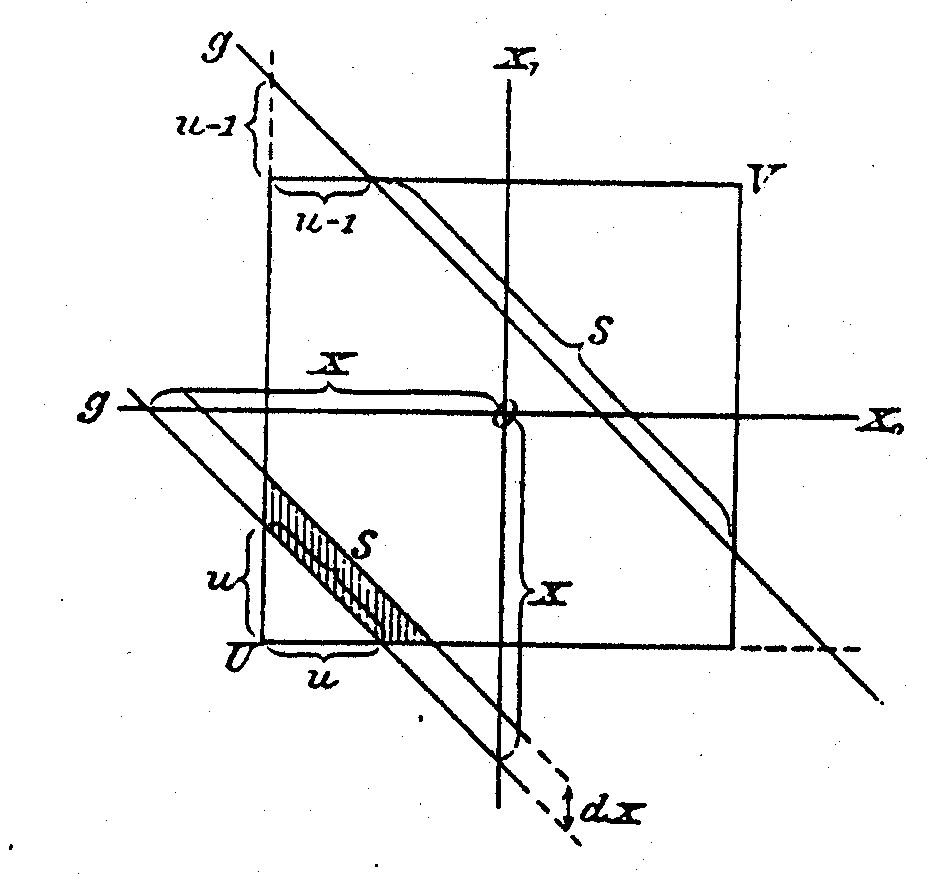

We have already covered \(M_{1}\), so we can continue with \(M_{2}\), i.e., the sum \(X_{1}+X_{2}\). Figure 3 is taken directly from Sommerfeld’s paper. Working in \(\mathbb {R}_{2}\) with axes \(x_{1}\) and \(x_{2}\), all possible outcomes for \(X_{1}\) and \(X_{2}\) are represented by pairs of numbers lying in a unit square centered at the origin, i.e., \(Q_{2}=\left[ -\frac{1}{2},\frac{1}{2}\right] \times \left[ -\frac{1}{2},\frac{1}{2}\right] \). All outcomes \(\left( x_{1},x_{2}\right) \) resulting in the same sum \(x\) lie on the line \(x_{1}+x_{2}=x\) . Anyway, for small \(dx{\gt}0\) (the negative case is handled analogously) we are looking for the area of the region cut out of the square \(Q_{2}\) by the strip \(S\left( x,x+dx\right) \) between the two lines \(x_{1}+x_{2}=x\) and \(x_{1}+x_{2}=x+dx\) in the sense that

The width of the strip \(S\left( x,x+dx\right) \) as the distance between the two lines is \(dx/\sqrt{2}\), so that

where \(S_{2}\left( x\right) \) denotes the length of that part of the line \(x_{1}+x_{2}=x\) which lies within the square \(Q_{2}\), since \(Q_{2}\cap S_{2}\left( x,x+dx\right) \) is a trapezoid.

We obtain therefore that

Thus our task is now reduced to finding a formula for \(S_{2}\left( x\right) \). The line intersects the square iff \(-1\leq x{\lt}1\), so \(S_{2}\left( x\right) =0\) for \(\left\vert x\right\vert \geq 1\): To simplify we introduce \(u=1+x\), so that we still have to investigate the case \(0\leq u{\lt}2\). Note that \(u\) is the distance from the corner of \(\left( -\frac{1}{2},-\frac{1}{2}\right) \) of \(Q_{2}\) to the point \(\left( x+\frac{1}{2},-\frac{1}{2}\right) \), where the line \(x_{1}+x_{2}=x\) intersects the line containing that side of \(Q_{2}\), which originates at \(\left( -\frac{1}{2},\frac{1}{2}\right)\) and is parallel to the \(x_{1}\)-axis (the same for \(\left(-\frac{1}{2} ,x+\frac{1}{2}\right)\) and the side parallel to the \(x_{2}\)-axis).

For \(0\leq u{\lt}1\) the point of intersection lies within \(Q_{2}\) and so the right triangle with the vertices \(\left( -\frac{1}{2},-\frac{1}{2}\right) ,\left( x+\frac{1}{2},-\frac{1}{2}\right) ,\) \(\left( -\frac{1}{2},x+\frac{1}{2}\right) \) has a hypotenuse of length \(S_{2}\left( x\right) \) and the other two sides are both of length \(u\). Thus by Pythagoras, since \(S_{2}\left( x\right) \) is nonnegative:

For \(1\leq u{\lt}2\) the intersection point\(\left( x+\frac{1}{2},-\frac{1}{2}\right) \) lies outside of \(Q_{2}\), so the length of the hypotenuse \(\sqrt{2}u\) of the triangle with vertices \(\left( -\frac{1}{2},-\frac{1}{2}\right) ,\) \(\left( x+\frac{1}{2},-\frac{1}{2}\right) \) and \(\left( -\frac{1}{2},x+\frac{1}{2}\right) \) is in fact too long and we must subtract the length of the hypotenuses of the two small right triangles, one with vertices \(\left( -\frac{1}{2},\frac{1}{2}\right) ,\left( -\frac{1}{2},x+\frac{1}{2}\right) \) and \(\left( x-\frac{1}{2},\frac{1}{2}\right) \), the other with vertices \(\left( \frac{1}{2},-\frac{1}{2}\right) \), \(\left( x+\frac{1}{2},-\frac{1}{2}\right) \) and \(\left( \frac{1}{2},x+\frac{1}{2}\right) \), whose other sides have both length \(u-1\). This yields

so that we obtain, just as we should, \(M_{2}\left( x\right) =\frac{S_{2}\left( x\right) }{\sqrt{2}}=N\left( x\right) \).

We proceed now to \(M_{3}\) and the sum \(X_{1}+X_{2}+X_{3}\). Again Figure 4 is the original one from Sommerfeld’s paper. Now the outcomes lie in the unit cube \(Q_{3}=\left[ -\frac{1}{2},\frac{1}{2}\right) \times \left[ -\frac{1}{2},\frac{1}{2}\right) \times \left[ -\frac{1}{2},\frac{1}{2}\right) \) and all outcomes with the same sum \(x\) on the plane \(x_{1}+x_{2}+x_{3}=x\): Arguing as before for two variables, this time with the strip between the two planes for \(x\) and \(x+dx\), we find that the strip has width \(dx/\sqrt{3}\) and

where \(S_{3}\left( x\right) \) is the area of the piece of the plane \(x_{1}+x_{2}+x_{3}=x\) that lies within the cube \(Q_{3}\). This time we have intersection iff \(-\frac{3}{2}\leq x{\lt}\frac{3}{2}\), so \(S_{3}\left( x\right) =0\) for \(\left\vert x\right\vert \geq \frac{3}{2}\). The substitution \(u=\frac{3}{2}+x\) introduces again the length of the (now three) segments from the corner \(\left( -\frac{1}{2},-\frac{1}{2},-\frac{1}{2}\right) \) to the intersections of the lines containing a side of the cube originating at this corner with the plane \(x_{1}+x_{2}+x_{3}=x\).

![\includegraphics[scale=1.2]{figpage38.jpg}](https://ictp.acad.ro/jnaat/journal/article/download/1099/version/1021/1378/3081/img-0004.jpg)

For \(0\leq u{\lt}1\) these intersection points \(\left( x+1,-\frac{1}{2},-\frac{1}{2}\right) , \left( -\frac{1}{2},x+1,-\frac{1}{2}\right) \) and \(\left( -\frac{1}{2},-\frac{1}{2},x+1\right) \) lie within the cube and \(S_{3}\left( x\right) \) is the area of the equilateral triangle with these three vertices, so that its side length is \(\sqrt{2}u\) and thus

For \(1\leq u{\lt}2\), the intersection is a hexagon, obtained from the triangle with vertices \(\left( x+1,-\frac{1}{2},-\frac{1}{2}\right) ,\left( -\frac{1}{2},x+1,-\frac{1}{2}\right) ,\left( -\frac{1}{2},-\frac{1}{2},x+1\right) \) by removing small equilateral triangles of length \(\sqrt{2}\left( u-1\right) \) at the corner, for example the one with the vertices \(\left( x+1,-\frac{1}{2},-\frac{1}{2}\right) ,\left( -\frac{1}{2},x,-\frac{1}{2}\right) ,\left( -\frac{1}{2},-\frac{1}{2},x\right) \), yielding

For \(2\leq u{\lt}3\), the intersection is again a triangle. Instead of computing its area directly, we observe that by taking away the triangles as we did for \(1\leq u{\lt}2\), we are now removing too much and have to add in again 3 triangles at each corner of side length \(\sqrt{2}\left( u-2\right) \) to obtain

Finally, we consider \(M_{3}\ \)and the sum \(X_{1}+X_{2}+X_{3}+X_{4}\). We find

where \(S_{4}\left( x\right) \) is the (3-dimensional) volume of the piece of the hyperplane \(x_{1}+x_{2}+x_{3}+x_{4}=x\) that lies within the (4-dimensional) cube \(Q_{4}=\left[ -\frac{1}{2},\frac{1}{2}\right) ^{4}\). With the substitution \(u=2+x\), we obtain a regular tetrahedron as the intersection for \(0\leq u{\lt}1\), yielding

For \(1\leq u{\lt}2\), we obtain an octahedron, resulting from cutting four small tetrahedra at the 4 corners of the original tetrahedron, in order to get

For \(2\leq u{\lt}3\), we still obtain an octahedron, but the four tetrahedra added in the previous step are overlapping now. As a compensation we have to add tetrahedra again, one for each edge of the original tetrahedron, i.e. a total of six to obtain

Finally, for \(3\leq u{\lt}4\) the intersection is a tetrahedron again, and we need to take away again a tetrahedron for each face of the original tetrahedron, so that

A quick check shows that the function derived as \(M_{4}\) is indeed the originally introduced \(M_4\) and thus \(B\), establishing representation 2.16.

Sommerfeld was the first one (as far as I know) to present a graph of \(B\) alias \(M_{4}\), together with those for \(M_{1},M_{2}\) and \(M_{3}\) in his original paper from 1904, which is given in Figure 5, resolving item 2.17.

Note also that the formulae given for the pieces of the function \(S_{4}\) are divided differences of the truncated power functions

yielding also

We will not sketch here how Sommerfeld pursued his original goal of investigating the limit behaviour, let it suffice to remark that indeed properly normalized B-splines tend to Gaussian (i.e., exponential) functions, if we let the polynomial degree go to infinity. Since Sommerfeld did not give a closed formula expression for his probability density functions \(M_{n}\), there were further attempts to shed some light on this problem, which did not become so widely known.

![\includegraphics[scale=0.37]{fig_4_1.jpg}](https://ictp.acad.ro/jnaat/journal/article/download/1099/version/1021/1378/3082/img-0005.jpg)

![\includegraphics[scale=0.37]{fig_4_2.jpg}](https://ictp.acad.ro/jnaat/journal/article/download/1099/version/1021/1378/3083/img-0006.jpg)

![\includegraphics[scale=0.37]{fig_4_3.jpg}](https://ictp.acad.ro/jnaat/journal/article/download/1099/version/1021/1378/3084/img-0007.jpg)

![\includegraphics[scale=0.37]{fig_4_4.jpg}](https://ictp.acad.ro/jnaat/journal/article/download/1099/version/1021/1378/3085/img-0008.jpg)

![\includegraphics[scale=0.38]{fig_4_5.jpg}](https://ictp.acad.ro/jnaat/journal/article/download/1099/version/1021/1378/3086/img-0009.jpg)

9 And something from Norway, too !

9.1 Brun 1932 (Item 2.18)

This section is devoted to the efforts of two Norwegian mathematicians, whose contributions and biographies are described in [ 7 ] . The first one is V. Brun, who is also the man who rediscovered Abel’s long lost original manuscript on transcendental functions, which Abel had submitted to the Academy in Paris in 1826. The text was finally printed in 1841, but the original was considered lost until Brun rediscovered it in Firenze in 1952. There were 8 pages missing, however, but that is another story, so back to our subject.

In his paper [ 6 ] in 1932, Brun had another look at the problem of the density functions \(M_{n}\) as considered by Sommerfeld. He had the idea of relating these functions by an integral recursion corresponding to the convolution (6.9). He then found the following representation for \(M_{1}\): introducing the jump function

we have

so that \(\frac{1}{\pi }\Delta Q\) and \(M_{1}\) only differ in the two points \(\pm 1/2\), which is irrelevant for integration, yielding

and thus by iteration

i.e. representation 2.18. Brun then used \(\frac{1}{\pi }\Delta Q\) and the integral recursion to establish the functions \(M_{n}\) in the integral form (6.10), and gave some quadrature formulae for the integration.

9.2 Fjelstaad 1937 (Items 2.19 and 2.20)

In his paper [ 6 ] , Brun stated: Vi ser herav det hpløse i fremstille \(\varphi _{n}\left( x\right) \) efter potenser av \(x\) (We see from here how hopeless it is to represent \(\varphi _{n}\left( x\right) \) [his notation for \(M_{n}\left( 2x\right) \)] in powers of \(x\)). According to the introduction in [ 23 ] , this spurred on another Norwegian, J. E. Fjelstaad, to derive in fact such a representation from the integral form (6.10), bringing us to the end of our cubic B-spline tour.

Replacing \(x\) by \(x/2\) we obtain from (6.10)

Integration by parts yields then formula

so we obtain the double integral formula

We compute that for the integral

we have

One integration by parts yields

and yet another one

Thus we obtain

establishing item 2.19.

From here we want to proceed by using partial fractions. The zeros in the denominator are \(i\left( x+4-2k\right) \), \(k=0,1,2,3,4\) and we have

where the factors \(A_{k}\) can be computed to be

Since

we have

Integration from \(0\) to \(z\) yields

Earlier we have already used limit properties of \(\arctan \) in (9.4), here we see that

so we finally establish

and

or

establishing item 2.20. In the general case - which is of course the one considered in [ 23 ] , the signum function appears for odd polynomial orders (even degrees) in the sense that

10 An Outlook

A good way to wrap up our treatment now is to consider the general definition of a B-spline of arbitrary degree and make some comments in light of the 20 representations we have covered. For a more detailed introduction with examples and references I would like to refer to a recent tutorial [ 33 ] , standard spline monographs such as [ 3 ] , [ 42 ] and for the cardinal setting [ 40 ] , and specifically for CAGD [ 22 ] , [ 28 ] .

Schoenberg wrote his book [ 40 ] mainly about interpolation of infinite data by spline series of the form (4.1) for arbitrary polynomial degree. Still, in practical applications we regularly need to address situations with finitely many data points given over some finite interval, introducing the nontrivial problem of handling boundaries.

At first, let us check that divided differences can be defined recursively not just for simple distinct knots, but also for coalescing knots in the following way.

(Divided differences) Provided that a function \(f\) is smooth enough so that all occurring derivatives are defined, the divided

difference of order \(\ell \) for the function \(f\) in the points \(t_{k}\leq \ldots \leq t_{k+\ell }\) is defined recursively as

and

We now start from a knot sequence \(\mathbf{t}\) of non-decreasing knots \(t_{k}\), which will serve as the breakpoints for the polynomial pieces

To define B(asis)-splines of a given degree \(d\geq 0\), we need at least \(d+1\) knots and \(t_{k}{\lt}t_{k+d+1}\) for all possible \(k\) to avoid functions identically zero.

(B-spline) For a given knot sequence \(\boldsymbol {t}\) the \(k\)-th

B-spline of degree \(d\) is defined as the divided difference with respect to \(y\) of the truncated power function \(\left( y-x\right) _{+}^{d}\) in the knots \(t_{k},\ldots ,t_{k+d+1}\), multiplied by the distance of the last and first knot in this subsequence, i.e.,

At this point we have to say a bit more about knot sequences. There are two main settings. One is a biinfinite sequence of simple knots \(t_{k},k\in \mathbb {Z}\), with the most common example being the integers. For this cardinal setting \(\boldsymbol {t}_{C}:t_{k}=k\), we have of course

The other main setting is a finite knot sequence for a closed and bounded interval \(\left[ a,b\right] \), typically a standard so-called \((d+1)\)-regular knot sequence where, with \(n\geq d+1\),

It must be strongly emphasized – although here we have mostly looked at just one spline function \(B\) – that it is the set of all B-splines over a knot sequence that is needed in applications, such as manipulating a curve or solving a differential equation by collocation.

Based on Leibniz’ formula for divided differences

one can establish a general recursion for B-splines (see item 2.4). With the B-splines of the initial level \(d=0\), i.e., piecewise constants, defined as

we have the following recursion for \(d\geq 1\)

and thus triangular schemes for the evaluation of splines of the form

analogous to the one introduced in subsection 3.6.

The continuity of a function of the form (10.2) is more tricky to describe. If all knots are simple, the polynomial pieces are glued together with continuity \(d-1\). For a multiple knot, i.e., a number which occurs repeatedly in the knot sequence, the continuity in this breakpoint is reduced by the multiplicity of the knot. For a more detailed discussion see [ 33 ] . The flexibility of different orders of smoothness in different breakpoints is especially important in curve design for CAD/CAM.

The divided difference formulation (see item 2.5) shows that each B-spline is a piecewise polynomial as a linear combination of truncated powers. One can establish by induction, using the recursion (10.1), that

Using Peano’s theorem as in item 2.7, we can derive that the integral of a B-spline is given as

Note that the normalization factor in the B-spline definition is chosen to achieve a partition of unity, and we must change it to \(d+1\) to get spline functions that qualify as probability density functions (item 2.15). Only in the cardinal case we get both properties at the same time.

As for item 2.2, we can show the basis property of the set of B-splines, namely that all piecewise polynomials of degree \(d\), with smoothness in the breakpoints prescribed by the knot multiplicities, can be written in the form (10.2). This allows us also to show that the B-splines have smallest support under the given smoothness requirements. Also as in item 2.2, we can derive that the monomials can be represented as sums of the form (10.2), with coefficients given in terms of symmetric functions. Specifically, one gets that the B-splines form a partition of unity. The necessary general tool to show polynomial representation is the so-called Marsden identity [ 31 ] .