Convergence of the -Euler-Maruyama method for a class of Stochastic Volterra Integro-Differential Equations

Abstract.

This paper addresses the convergence analysis of the -Euler-Maruyama method for a class of stochastic Volterra integro-differential equations (SVIDEs). At first, we discuss the existence, uniqueness, boundedness and Hölder continuity of the theoretical solution. Subsequently, the strong convergence order of the -Euler-Maruyama approach for SVIDEs is established. Finally, we provided numerical examples to illustrate the theoretical results.

Key words and phrases:

Stochastic Volterra integro-differential equations, -Euler-Maruyama method, strong convergence, Hölder continuity.2005 Mathematics Subject Classification:

65C30, 60B10, 65L20.1. Introduction

Stochastic differential equations (SDEs) have attracted significant attention and are currently emerging as a modeling tool in various scientific fields, including but not limited to telecommunications (see [15]), economics, finance (see [5]), biology, chemistry, and quantum field theory.

The Volterra integral equations (VIEs) were proposed by Vito Volterra, which Traian Lalescu later studied in his 1908 thesis ”Sur les équations de Volterra”, written under the direction of Émile Picard. Volterra integral equations find application in viscoelastic materials, fluid mechanics, and demography (see, e.g., [10],[3], [17],[8],[7]). Stochastic Volterra integral equations (SVIEs) are an extension of ordinary Volterra integral equations to include random noise, making them suitable for modeling systems with stochastic components. SVIEs find applications in various fields, including mathematical finance, biology, physics and engineering. For example, in finance, SVIEs are used to model the evolution of financial asset prices over time, taking into account the stochastic nature of market movements. In biology, they can be used to describe population dynamics subject to random environmental factors. Therefore, in recent years, SVIEs have attracted the attention of many researchers. For instance (see [10],[17],[8],[7]). The exact methods for solving stochastic differential equations (SDEs) involve addressing current challenges in the field (cf. [6], [16], [3], [11], [4], [17], [13], [7]). While there are many analytical methods available, the complexity of these equations makes it difficult to obtain exact solutions. Among the numerical methods are the Milstein method, Runge-Kutta method see [1], Euler-Maruyama method, stochastic theta method, and others (see [10], [9],[12],[2], [17]). Zong et al. [18] conducted a study on two classes of theta-Milstein schemes for stochastic differential equations in terms of convergence and stability.

Recently, Deng et al. [4] examined the semi-implicit Euler method for non-linear time changed stochastic diferential equations. Wang et al. [14] investigated the stochastic theta methods (STMs) for stochastic differential equations (SDEs) with non-global Lipschitz drift and diffusion coefficients. Zhang et al. [17] examined the Euler-Maruyama (EM) method’s numerical analysis of the following generalized SVIDEs:

Lan et al. [7] presented the -EM method corresponding to the following SVIDEs:

Inspired and motivated by the above works [4, 14, 17, 7], in this paper, we study the strong convergence of the -Euler-Maruyama method for a class of stochastic Volterra integro-differential equations (SVIDEs) as follows:

| (1.1) |

with initial condition , where and are given functions. The kernels are continuous on with the norm for . is a stochastic processes defined on probability space , is a standard Brownian motion (1-dimensional Brownian motion) defined on the same probability space. And .

The structure of this paper is as follows: We introduce some fundamental notations and preliminaries in Section 2. We then present the definition of the solution of equation (1.1) and investigate the existence, uniqueness, boundedness and Hölder continuity of the analytic solution in Section 3. The -EM method for equation (1.1) is presented, and its order of convergence is taken into account in Section 4. Finally, we provide numerical examples in Section 5 to illustrate the theoretical results.

2. Preliminaries

Let be a complete probability space with a filtration satisfying the usual conditions , and let denote the expectation corresponding to . A 1-dimensional Brownian motion defined on the probability space is denoted by . Let by the family of Borel measurable functions such that for every We denote the family of -valued -adapted processes such that be the family of processes adapted such that . For . If is a subset of , the indicator function is denoted by . We can write the integral of equation (1.1) as follows:

| (2.2) |

We will introduce the definition of the solution, we assume that where

Definition 2.1.

Definition 2.2.

Let . A stochastic process is referred to as Hölder continuous with exponent if a constant M exists such that

In this article, we propose the following hypotheses.

-

(1)

() (Lipschiz condition). Assume that there exist a positive constant such that

for .

-

(2)

() (Linear growth condition). For

where .

-

(3)

() (Mean value theorem). Assuming that the coefficients for of (1.1) satisfy

with for all and

where .

3. Theoretical analysis of the class of SVIDE

In this section, we present the theoretical results. The existence and uniqueness of the solution to (2) have been established. Additionally, we verified the Hölder continuity condition for the analytical solutions.

3.1. The existence and uniqueness of the analytical solution

We now discuss the theory of existence and uniqueness of the solution of the equation (2). We first present the following lemma.

Lemma 3.1.

Assume that () is satisfied. If is a solution of SVIDEs (1.1), and such that

| (3.3) |

where depends on and .

Proof.

For every integer be a stopping time such that

Evidently, a.s. by letting Define for . It can be verified that satisfies.

By Cauchy’s inequality, the Itô isometry, and the inequality , we obtain that

Using Cauchy’s inequality and the Itô isometry, we show that

| (3.4) |

where

and

First, we calculate , using Cauchy’s inequality and assumption (), we get

| (3.5) | ||||

Second, we calculate , by () and Itô isometry, we get

| (3.6) | ||||

By using Gronwall’s inequality, we have

where

and

Since for , letting , we conclude that , that is,

Theorem 3.1.

Proof.

We will divide the proof into two fundamental steps.

- Step I. Uniqueness:

-

Let and be two solutions of (1.1). From Lemma 3.1 we have, . By (), Cauchy’s inequality and Itô isometry, we show that

Finally, from Gronwall’s inequality we conclude that

which proves that for every .

- Step II. Existence:

-

Let and define the Picard approximation.

(3.7) for and It is evident that , and through induction, we also get . Using the proof of Lemma 3.1, we have

where and are those from Lemma 3.1. Thus, for each , we have

By the Gronwall’s inequality, we obtain

where Since is arbitrary, we conclude

(3.8) We claim that for ,

(3.9) By induction, we need to show that (3.9) still holds for . Note that

(3.10) By Chebyshev inequality, we get

Since . Thus, applying the Borel-Cantelli lemma, we can show that

With probability , it follows that,

are convergent uniformly for . denote the limit. is obviously continuous and -adapted. On the other hand, it can be seen from (3.9) that, is a Cauchy sequence in for any t.

Letting therefore

is a Cauchy sequence in . Therefore, we also have in

where depends on and , resulting in . It has to be demonstrated that satisfies (2). Note that

∎

3.2. Hölder continuity of the analytic solutions

We now show Hölder Continuity property by the analytical solution of SVIDEs (2).

Theorem 3.2.

Assume that () holds. Then, the solution Y(t) is Hölder continuous with exponent

4. Numerical analysis of the class of SVIDE

Let . For , we have defined the numerical results of SVIDE

4.1. -Euler Maruyama method

We apply the -EM method to SVIDEs (LABEL:SDEf) (see [1]–[3] and references therein),

| (4.1) | ||||

where with initial data , where , . By induction, we rewrite (4.1) in the following form:

| (4.2) | ||||

Remark 4.1.

Now, we examine the stability properties with respect to SVIDE (4.1) in the following result.

Theorem 4.1.

Assume that () holds. Let be the numerical solution of the -EM method (4.1). Then there exists a positive constant , which depends on and , but not on , such that

Proof.

For all , we have

| (4.4) |

where

and

Using Cauchy’s inequality, Minkowski inequality and (), we show that

Similar to [4, 3, 1, 2], the convergence order of the -EM method can be enhanced by including more terms in the numerical approximation.

4.2. Strong convergence of the -Euler Maruyama method

In order to obtain the convergence result for the -Euler Maruyama method (LABEL:theta_num-sul), we now introduce time continuous interpolations of the discrete numerical approximations.

Define and , for with .

Let with and X(t) be the continuous form of with , we obtain

| (4.7) | ||||

The following theorem illustrates the convergence order of (4.1), and its proof proceeds similarly to [3] for the situation when

Lemma 4.1.

Assume that () holds. Let be the numerical solution of the -Euler Maruyama method (LABEL:theta_num-sul). Then there exists a positive constant , which depends on , and T, but not on h, such that

Proof.

Theorem 4.2.

Suppose() and , for satisfy (). Let X (t) and Y (t) are The numerical solution of the -Euler-Maruyama method and the analytical solution (LABEL:solu-EDSf), respectively. Then there exists a positive constant , which depends on and T, but not on h, such as

Proof.

By (), Cauchy’s inequality and the Itô isometry, we have

| (4.10) |

where

and

By Cauchy inequality and Itô isometry, we obtain

Next, using () and , one has

By Hölder’s inequality, Itô isometry, LABEL:bond-thetam and Lemma 4.1, we have

and

By (), we show that

Thus

| (4.11) |

where

and

Using LABEL:bond-thetam, we get

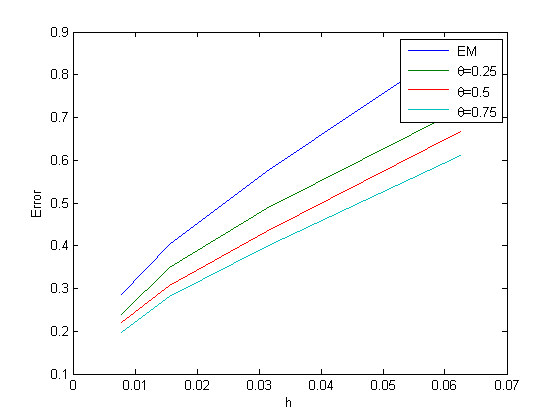

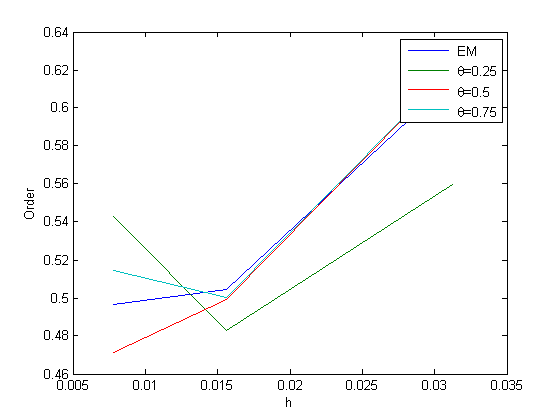

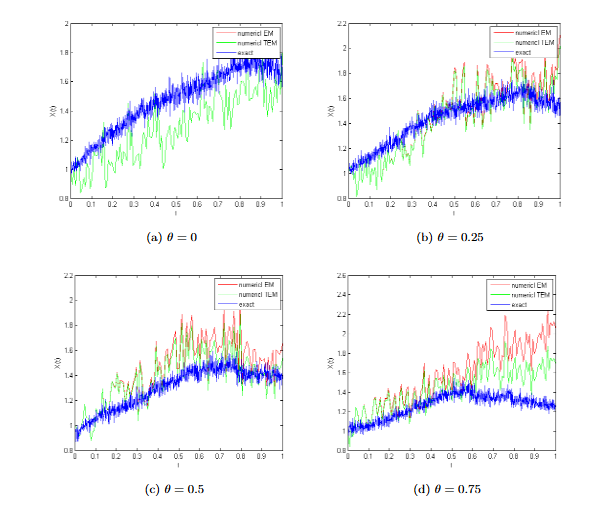

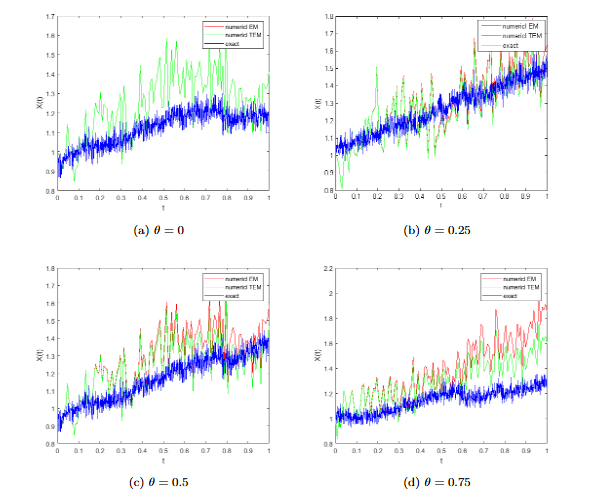

We now give a number of numerical experiments that support the theoretical predictions made in the earlier sections about the class SVIDEs is convergent of order .