ATM Transaction Simulation:

Combination of ACDs and Cox Process

Abstract.

Two main approaches for analyzing the ultra-frequency data such as ATM (auto teller machine) transaction are Cox process and autoregressive conditional durations (ACDs). This paper combines both models and gives its advantages. The functional data analysis proposes useful method for modeling the intensity of counting process. Two simulated cases results are verified. A real data set is analyzed and conclusions are also given.

Key words and phrases:

ATM transaction, ACD model, Cox process, functional data analysis, intensity function.2005 Mathematics Subject Classification:

62M10.1. Introduction

Irregularly spaced financial time series have received considerable attention in high-frequency data literature, see [6]. High frequency time series forecasting is a crucial field that tackles the analysis of data recorded at very short intervals, from seconds to fractions of a second. This discipline is fundamental in various sectors, from meteorology to finance, energy management, and quality control in manufacturing. Intraday transactions of ATM and POS [8], trading in stock markets [11], and volatility patterns in high frequency trading [9] are well-known examples of these time series. In the current paper, the ATM transactions are studied. To this end, suppose that counts the number of intraday transactions of an ATM of a specific bank recorded up until time . Indeed, is a counting process with intensity function .

There are two main independent approaches for analyzing these types of time series including the Cox Poisson process (referred as approach a, in this paper) from [11] and ACD models (approach b) from [4]. However, in the current paper, the combined approach (called approach c) is proposed. There, it is assumed that both types of Cox and ACD models govern on the data, simultaneously. According to the best author’s knowledge, this model is not applied before in the literature and has many advantages which are discussed in Section 2. Although, approaches a and b are customized for problem of in hand of the current paper.

: Cox process. Following [11], assume that is modeled as Cox process. That is a Poisson process with a random intensity where

The Cox process is a type of point process. For comprehensive review on Cox process and generally point processes, see [3].

Often, to find the functional forms of and , the functional data analysis (FDA) method is proposed which gives an approximation for .

: ACD models. The ACD model mainly uses the stopping times of and stopped process properties, without assuming being a Poisson process, as Cox process assumes. To describe more, let be the time of -th transaction (in a day)

The related duration be defined by

Let be modeled by ACD model (with intercept ) from [4]; i.e.,

at which errors ’s are independent, positive random variables with and

where are unknown dimensions of model which are optimized during solving the case study problem while parameters and are unknown parameters which should be estimated. The authors from [4] proposed a close relationship between ACD and GARCH models. The package of software estimates these parameters, accurately and quickly, see [1].

: Combined model. Here, it is assumed that both models of Cox and ACD are hold, simultaneously. Some advantages of this approach are:

-

1.

Under this setting, the exact Monte Carlo simulation for dynamics of is derived.

-

2.

Often, FDA is a time-consuming task, because of choosing the length of linear combination of orthogonal basis function.

-

3.

Choosing basis functions and number of them are a little subjective which is critical in applied problems.

-

4.

By combined model, is also simulated, directly, by Binomial distribution which approximates the Poisson distribution, see Section 2.3.

2. Dynamics of

Here, dynamics of and are derived. To this end, first, the FDA method is proposed which gives an approximation for . Then, under the combined model setting, the derivation of is based on Monte Carlo simulation which uses the exact distribution of partial sums of ’s.

2.1. FDA

In practice, FDA is used to model the intensity function of Poisson process as random element in Hilbert space, see [7]. To this end, considering days, let the number of transactions throughout the -th day with intensity function . Following [8], to remove the periodically effects for different days, let be the intensity function of -th day and let

and consider the following functional autoregressive model for as follows

where kernel is estimated using the functional principal component and error terms

are supposed to be independent functions such that for each and

For comprehensive review on functional data analysis, see [10]. Package fda.usc of software is useful instrument for studying functional time series data, see [5]. Then, use the basis representation for such as

(see [10]) for basis functions for , say Fourier basis functions. Therefore,

In the literature, widely used selections for are

However, in practice, we find which we have

for some error terms . Therefore,

Following [2], this is a type of functional regression analysis. To this end, variables and are computed for ,…,, () and parameters of a multiple regression model are estimated.

2.2. in combined model

Here, the exact functional form of is derived. To this end, let and

Notice that

Hence,

Let . One can see that

It is concluded that

This relation leads to the computation of the exact probabilities. Notice that

Therefore, the Monte Carlo estimate of is the number of times (in M repetitions of Monte Carlo simulations) that the random interval

contains the constant number . In practice, after fitting and to , the Monte Carlo simulation method approximates the exact distribution of and , hence is computed. Notice that

Therefore,

where

This is a non-linear equation and root-finding methods such as Newton-Raphson are applicable, as follows: For iteration -th, let

To find after finding for discrete values of ’s, the numerical differentiation is applied to find discrete values of . Then, a smoothing method such as spline or smoothing polynomials is used to derive the functional form of .

2.3. Another combined

In Sections 2.1 and 2.2, we proposed some methods for obtaining functional form of based on Cox process and ACD models. However, in practice, the series of numbers of events that each occurred in small fraction of the time are recorded and it is necessary to simulate , itself, directly.

The idea behind this method is that the Binomial distribution approximates the Poisson distribution. To propose the method, suppose that throughout a crowded business day which is expected the possible numbers of ’s in where at them transaction occur i.e., (of Bernoulli distribution) is large. However, because of some political or social events which has happened in yesterday, the probability of transaction is too low such that

Following [8] and noticing that

it is seen that is a good estimate of . Therefore, is simulated by sampling from binomial distribution with parameter , directly and

Then, by collecting number of transactions and fitting an ACD model, the empirical estimate of and consequently are estimated.

Suppose that, the dynamic of is proposed. For example, consider the dynamics of given by Ito stochastic differential equation, as follows:

where is standard Brownian motion on (0,1). To obtain parameters , notice that they are mean and standard deviation of which are estimated by their related samples values. Therefore, the dynamic of is proposed.

3. Simulations

In this section, some simulated cases are analyzed. Banks often refuse to provide tick-by-tick transaction data of ATMs and do not make this type of data available (or at least hard available) to the public due to network security issues and keeping customer’s secrets. However, a small part of database is usually given to researchers from the core system of databases of banks. This is why, in the current paper, we only survey the simulated situations which correspond to real data. For using simulated data instead of real one, we must be sure that the simulated data with the combined model are good approximations for the real data. However, since in both cases, we use the dataset in [11], we are sure that these considerations are checked. Case 1 studies the Cox process with known dynamic for . Case 2 gives simulation results under the combined model setting.

Case 1: as OU process. In the Cox process, motivated by [11], suppose that is an Ornstein-Uhlenbeck (OU process) process defined by

where is increment of Brownian motion, and . Here, it is supposed that which corresponds to the empirical results from [11]. Consider and let where . To simulate at ’s, increments are samples from Poisson distribution with rate where In this way, the partial sums of increments generate paths of Poisson process. Therefore, ’s are computable. It is easy to see that is process with intercept, as follows

where has exponential distribution with mean 1 and

Case 2: Combined . Here addition to Cox process assumption, assume that come from process . For the weekly data from [11], the ACD model is defined by

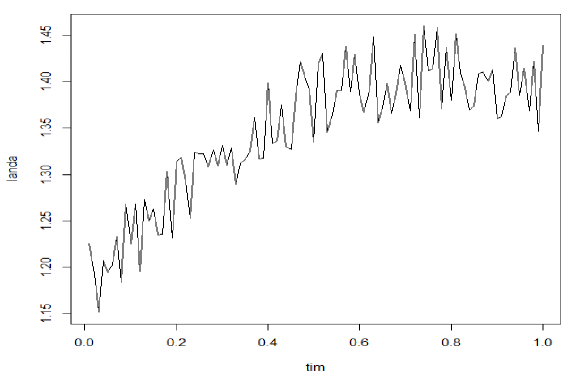

with being exponentially distributed random variable with rate 1. Hence, empirical distributions of are computed. Next, using the Monte Carlo method proposed in subsection 2.1, is computed for various values of ’s. Then, using the Newton-Raphson and numerical differentiation, values of and are computed, respectively. The following figure gives the plot of . Smoothing by basis Fourier function, it is seen that

Although it is difficult to obtain real tick-by-tick data in practice, nevertheless, the case 3 provides an alternative method for reconstructing. For the weekly data from [11], the following smoothing results are provided:

where and are periodic functions with period 7 given as follows:

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | |

|---|---|---|---|---|---|---|---|

| 0.2 | 0.2 | 0.5 | 0.5 | 0.1 | 0.25 | 0.25 | |

| 0.1 | 0.1 | 0.5 | 0.25 | 0.5 | 0.2 | 0.5 | |

| 0.2 | 0.2 | 0.1 | 0.1 | 0.1 | 0.25 | 0.25 |

4. Real data sets

Here, the method of Section 2.3 is applied to 3 real-time series.

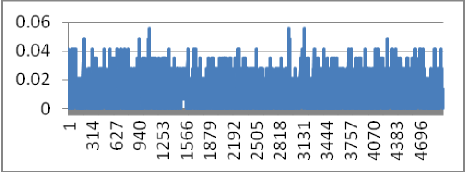

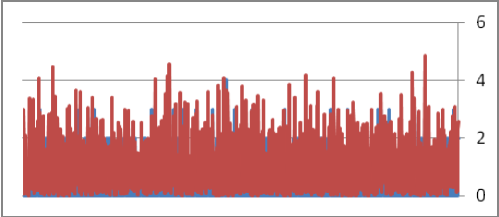

Data set 1. The dataset includes 11520 observations which are 15-minute by 15-minute ATM transactions of a selected branch of an Iranian Bank ABC (which we are not naming for security reasons) from March 11, 2024 to October 11 2024 (30 days) during 7 AM to 7 PM. For a day, there are 15 minutes. Therefore, . Thus, . The time series plot of first 5000 observations is given as follows:

It is seen that . Here, we provide fittings of the model on many real high frequency data sets, then obtain the optimal parameters, plot on the same figure the real data and the optimal model and last analyze the corresponding residuals. The following plot gives the simultaneously, time series of actual (blue line) and its estimate (red line).

The following table gives the max, min, mean and standard deviation (sd) of 5000 residuals

The table shows that errors are negligible.

| Max | Min | Mean | SD |

| 0.0632 | 0.0024 | 0.0414 | 0.019 |

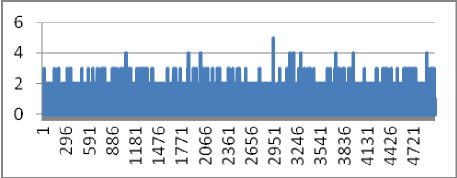

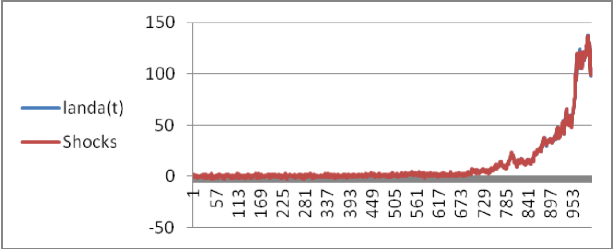

Data set 2. In the second data set, the transactions of an ATM along one day for 6336 days are recorded. First the following ACD model is fitted to duration of transactions

. The following plot gives real is plotted against its estimated process derived from above ACD model using simulation of a Poisson process based on simulating the ’s partial sums. To better presentation the first 3000 observations i.e., the actual (blue line) and its estimate (red line) are presented. This figure shows the maximum closeness of both series.

Again, the summaries of errors are proposed in the following table.

| Max | Min | Mean | SD |

| 0.0475 | 0.0064 | 0.0325 | 0.032 |

Also, a Poisson process is fitted to data, based on functional estimate of , given by

The following table gives the related errors.

| Max | Min | Mean | SD |

| 0.0734 | 0.0055 | 0.0455 | 0.043 |

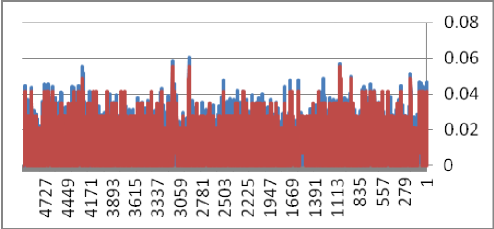

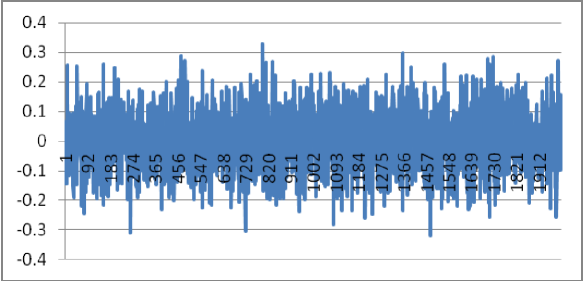

Data set 3. Here, the functional form of of previous data set are compared with its actual values. First the following table shows that the errors are negligible. Then, different scenarios are studied using 1 times standard deviations of errors as shocks to . The following figure shows the errors of actual and its functional estimates. Shocks are simulated using normal distributions with zero means and 1 times standard deviations.

As seen, the shocks increase as the number of time series increases. However, the shocks are negligible in the estimate of .

5. Concluding Remarks

This manuscript has many advantages and highlights as follows:

-

1.

The compatibility of the combination of two common models used in the analysis of data with high frequency was examined and it was seen in the simulation section that these two models can be recovered from each other.

-

2.

The use of functional data analysis was used as a practical solution for modeling the intensity function of the Poisson process, and the performance of this solution was seen alongside the previous two methods.

-

3.

Mathematical models were developed to be useful for simulation analysis.

Acknowledgements.

The author thanks the referees and the editor for their useful remarks, which contributed to improving the quality of this article.

References

-

[1]

M. Belfrage,

Package ACDm: tools for autoregressive conditional duration models, 2022.

https://cran.r-project.org/web/packages/ACDm/index.html

![[Uncaptioned image]](ext-link.png)

-

[2]

T.T. Cai and P. Hall,

Prediction in functional linear regression, Ann. Statist., 34(2006), pp. 2159–2179. http://dx.doi.org/10.1214/009053606000000830

![[Uncaptioned image]](ext-link.png)

- [3] D.J. Daley and D. Vere-Jones, An Introduction to the Theory of Point Processes, Springer, 2012.

- [4] R.F. Engle and J.E. Russell, Forecasting transaction rates: the autoregressive duration model, USCD Discussion Paper, 1995.

-

[5]

M. Febrero-Bande and O.M. de la Fuente,

Statistical computing in functional data analysis: the R package fda.usc, J. Stat. Softw., 51(2012), pp. 1–28. https://doi.org/10.18637/jss.v051.i04

![[Uncaptioned image]](ext-link.png)

- [6] N. Hautsch, Modeling Irregularly Spaced Financial Data, Springer, 2004.

- [7] L. Horváth and P. Kokoszka, Inference for Financial Data with Applications, Springer, 2012.

- [8] A. Laukaitis and A. Rackauskas, Functional data analysis of payment systems, Nonlinear Analysis: Modelling and Control, 7(2002), pp. 53–68.

-

[9]

U.A. Müller,

Volatility computed by time series operators at high frequency, Working Paper, Nuffield College, Oxford, 2000.https://doi.org/10.1142/9781848160156_0007

![[Uncaptioned image]](ext-link.png)

- [10] J.O. Ramsay and B.W. Silverman, Functional Data Analysis, Springer, 2006.

-

[11]

T.H. Rydberg and N. Shephard,

A modeling framework for the prices and times of trades at the NYSE, Working Paper, Nuffield College, Oxford, 1999. https://dx.doi.org/10.2139/ssrn.164170

![[Uncaptioned image]](ext-link.png)