A comparative study of Filon-type rules

for oscillatory integrals

Abstract.

Our aim is to answer the following question: “Among the Filon-type methods for computing oscillatory integrals, which one is the most efficient in practice?”. We first discuss why we should seek the answer among the family of Filon-Clenshaw-Curtis rules. A theoretical analysis accompanied by a set of numerical experiments reveals that the plain Filon-Clenshaw-Curtis rules reach a given accuracy faster than the (adaptive) extended Filon-Clenshaw-Curtis rules. The comparison is based on the CPU run-time for certain wave numbers (medium and large).

Key words and phrases:

oscillatory integral, Filon-Clenshaw-Curtis rule, extended FCC rule, adaptive FCC+ rule.2005 Mathematics Subject Classification:

65D32, 65Y201. Introduction

Consider the oscillatory integral

| (1) |

where is a given smooth function on , and the wave number is rather large. Filon-type methods, in brief, reads as , where is a polynomial approximation of in . In details, the interpolation polynomial is generally expanded as

| (2) |

where is a polynomial of order for each . The scalars , for all , are computed in the construction phase of . Thus, the Filon-type method is expanded as

| (3) |

where are the moments to be evaluated.

Certainly, accuracy of a Filon-type method is proportional to the accuracy of the corresponding polynomial approximation because

| (4) |

In practice, what is the best choice of of a given degree? Even if we restrict our investigation to interpolation, there are a tremendous number of interpolation polynomials approximating a smooth function satisfactory, e.g. splines, Hermite-Birkhoff interpolation, and those based on orthogonal polynomials and their roots or extrema. The main question motivating this research is, which interpolation is the best choice for Filon-type methods? Beside the accuracy of the interpolation polynomial on , there are mainly three other aspects that affect the efficiency of the Filon-type method in practice: (i) the cost of construction of , (ii) the cost of computing the moments, (iii) and the order of interpolation at the endpoints .

In general, Lagrange interpolation at arbitrary points is rather costly. However, in [6], a rapid and stable construction method is proposed based on the fast multipole method (FMM) of [8]. Instead of an arbitrary set of interpolation points, however, one prefers roots or extrema of orthogonal polynomials and utilize FFT for better performance. Among several kinds of orthogonal polynomials, Chebyshev polynomials are often used mainly because their roots and extrema are in the most simple and explicit forms.

For fast computing the moments, one prefers polynomials which satisfy a recurrence relation. Then the moments can be computed recursively with few computational cost. Orthogonal polynomials not only can be constructed efficiently by FFT, but also they satisfy three-term recurrence relations. Assume that in (2) satisfies the three-term recurrence relation

| (5) |

where and are known scalars with for all . The first two moments can easily be computed analytically. Then, the other ones are computed by (5), recursively:

| (6) |

On the other hand, the asymptotic expansion of the oscillatory integral turns out that the value of is mostly determined by its amplitude, , and their derivatives up to some order at the endpoints . More precisely, if is smooth enough in , and

| (7) |

for some integer , then

| (8) |

(see, e.g., [11]). Therefore, not only the accuracy of the interpolation, i.e. , is important for the total accuracy of a Filon-type rule , but also its order at the endpoints.

The conditions (7) implies an example of Hermite-Birkhoff interpolation, which construction rarely utilizes fast algorithms like FFT or FMM. Interpolation at the Clenshaw-Curtis points , , have all the above advantages. Because of the distribution of the Clenshaw-Curtis points in , interpolation at them is most accurate and numerically stable. Moreover, thanks to the three-term recurrence relation for the Chebyshev polynomials, it can stably be constructed by FFT at the cost of (see [5]). In addition, the computational cost for computing , , is negligible. Finally, having the Lagrange interpolant at the Clenshaw-Curtis, one can efficiently construct the corresponding Hermite-Birkhoff interpolant that satisfies (7), too (see [15]).

Therefore, without doubt, Filon-Clenshaw-Curtis (FCC) rules and their extensions are the most efficient Filon-type rules for computing the oscillatory integral (1). Extended FCC (FCC+) rules and their adaptive versions can be constructed efficiently by the algorithms of [15] and [14], respectively. To the best of our knowledge, they are the most efficient algorithms for constructing the FCC+ and adaptive FCC+ rules so far.

In comparison with the algorithm of [5] for constructing the FCC rules, the algorithms of [15] and [14] are more complex. This difference in the computational costs may be negligible when the maximum order of derivatives in the FCC+ rules is rather small. On the other hand, the asymptotic order of an (adaptive) FCC+ rule is better than that of the corresponding FCC rule. Actually, one cannot readily answer which algorithm is more efficient, and this question naturally triggers a comparative study. Indeed, this paper fills a gap in the literature and help in the comparison between Filon-type methods.

In this paper, we carry out a set of numerical experiments to compare efficiency of FCC, FFC+, and adaptive FCC+. Actually, we are interested in the CPU run-time to achieve a specific accuracy. We apply these three classes of rules to a set of oscillatory integrals with different regularity characteristics. Since FCC is less complex than the other rules, one can use more interpolation points for the same computational effort as (adaptive) FCC+.

The rest of the paper is organized as follows. In Section 2, we carry out a set of numerical experiments in order to compare the efficiency of the FCC and the FCC+ rules. In Section 3, the same is done for the FCC and the adaptive FCC+ rules. We also modify Theorem 2.2 of [14] and give a more practical error bound for the adaptive FCC+ rules that is explicit in and the rule parameters. In Section 4, we account several other advantages of the FCC rules over the (adaptive) FCC+ ones. Finally, we bring some conclusions in Section 5.

2. FCC vs FCC+

In this section, we compare the efficiency of the FCC and FCC+ rules. In contrast to the common ideas in the literature, we see that the FCC+ rules have no advantages over the plain FCC rules in practice. At first, we give a brief review of the FCC+ rules (see [7, 15]).

2.1. A review of the FCC+ rules

The ()-point FCC+ rule of order is defined by

| (9) |

where is the degree Hermite interpolation polynomial satisfying the following interpolation conditions (see [15]):

| (10a) | ||||

| (10b) | ||||

Then, the asymptotic error of the rule will be

| (11) |

see, e.g., [11].

An important advantage of the FCC rules over the FCC+ ones is that there are error estimates for the FCC rules, which are applicable even if is of confined regularity. Furthermore, they are explicit in both and (see [4, 5, 20, 21]). Such error bounds do not exist for the FCC+ rules so far (see [15] for details).

In [7], an efficient algorithm is proposed for constructing the FCC+ rule (9) that can be implemented by flops. A more efficient algorithm was later proposed in [15]. One advantage of the latter is that its computational cost can be determined precisely. By the algorithm of [15], one needs flops to extend the ()-point FCC rule to the corresponding FCC+ rule of order in a natural way. Thus, beside its simplicity and naturality, the algorithm of [15] enables one to compare computational costs of the two rules easily. The algorithm for constructing the ()-point FCC+ rule of order leads to two linear systems of order . However, the FCC+ rules of order 1 can be stated explicitly, and one only needs about extra flops to build it from the corresponding ()-point FCC rule.

2.2. The race for convergence speed

Error estimate (11) turns out that for a fixed , the asymptotic decaying rate of the error of is higher than that of , and it grows by . On the other hand for rather large , the complexity of the FCC rule, , is comparable to that of the FCC+ rule, because is usually taken rather small in practice. Thus, some authors conclude that the FCC+ rules are always the winner in the competition with the FCC rules. According to [3],“the generalization of FCC … enjoys all the advantages of ‘plain’ FCC … in cost and simplicity, while arbitrarily increasing the asymptotic rate of decay of the error” (p. 66).

In this section, we show that such claims are in doubt in practice. Although, it may be required in some applications to compute for several and , but each time, we face the problem of computing one integral with a fixed parameter and a fixed amplitude function . The FCC+ rule approximates the integral to a specific accuracy for some rather large integers and . As mentioned above, the FCC rule with the same generally achieves less accuracy. However, we may choose larger integer and achieve the same accuracy or even better while the computational cost does not exceed that of . Recall from [15] that the FCC+ rule is more complex than its corresponding FCC rule by at least flops. Now assume that is the exact number of flops for constructing by the algorithm proposed in [5]. Thus, in order to compare the simplicity of the two rules and , we should compare the two numbers and

Even if we compute the number of flops for construction of the two rules exactly, error estimates (specially for the FCC+ rules) are not so sharp that the accuracy of the rules can be estimated a priori for a given and . For practical examining the efficiency of an approximation method (or rule), one can consider the run-time that the method consumes in a specific machine to reach a given accuracy.

In the following, we apply FCC and FCC+ to a set of oscillatory integrals (1), covering wave numbers from moderate to quite large values. We try to have at least one sample of the amplitude function in each class of , for some integer , elementary functions, and non-elementary functions. In each method, we choose as the minimum number of interpolation points to achieve a given accuracy. As discussed above, this number for FCC is generally larger than that of FCC+.

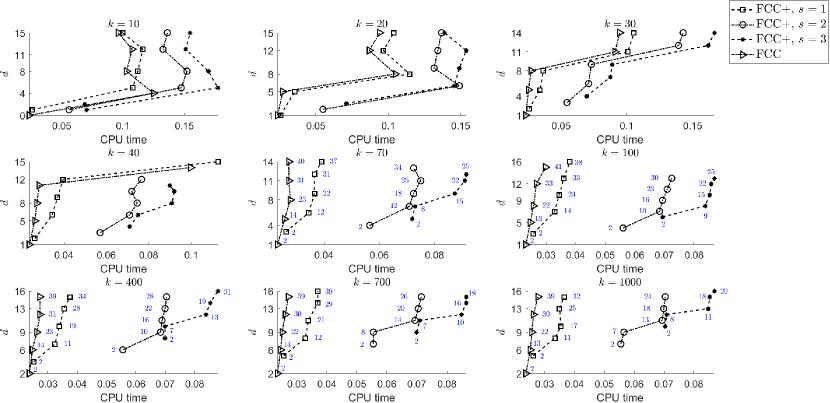

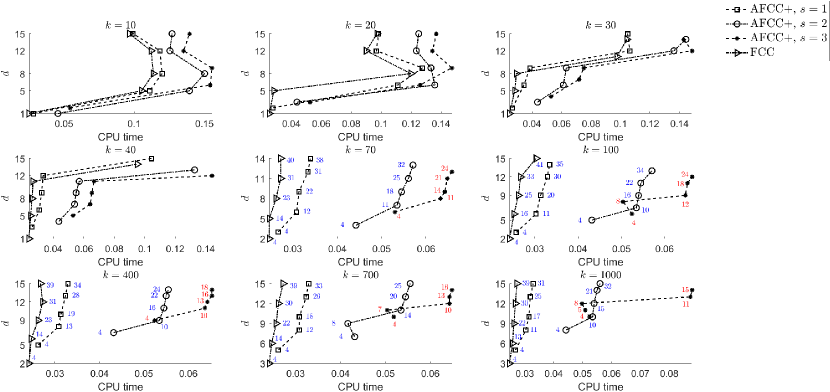

Experiment 1.

Let , where is the relative error of an approximation. Indeed, is roughly the number of correct significant digits in the approximation. In Fig. 1, we plotted versus the machine run-time (in milliseconds) for the FCC and FCC+ rules (9) of orders when applied to with and some wave numbers ranging from small to quite large values. Note that each time an algorithm is run by a specific machine, the run-time may vary a little mainly because of invisible services running in the background on the operating system (OS). For that reason, in all the numerical experiments in the sequel concerning run-times, we run the corresponding Matlab algorithm ten times and consider the minimum of the run-times. For , for which the plots have space enough, we label each marker by the corresponding . All the Matlab codes have been optimized in order to use less memory and take less time to execute.

Although, the derivatives of have been precalculated manually to the advantage of FCC+, our numerical experiments turn out that FCC are still more efficient than FCC+, especially for moderate and larger . Also, among the FCC+ rules, those with smaller are more efficient. This observation is against some authors’ claims that the FCC+ rules are ‘the winner’ just because the asymptotic decaying rates of their error are higher than those of the FCC rules. As it is seen, for a specific , accuracy of the FCC+ rules is higher than that of the FCC rule, and this accuracy enhances as grows. For example, in the last plot corresponding to , the 31-point FCC rule, the 26-point FCC+ rule of order 1, the 19-point FCC+ rule of order 2, and the 12-point FCC+ rule of order 3 reach approximately 13 correct significant digits. However, the FCC rules ultimately reach a given accuracy faster than all the FCC+ rules.

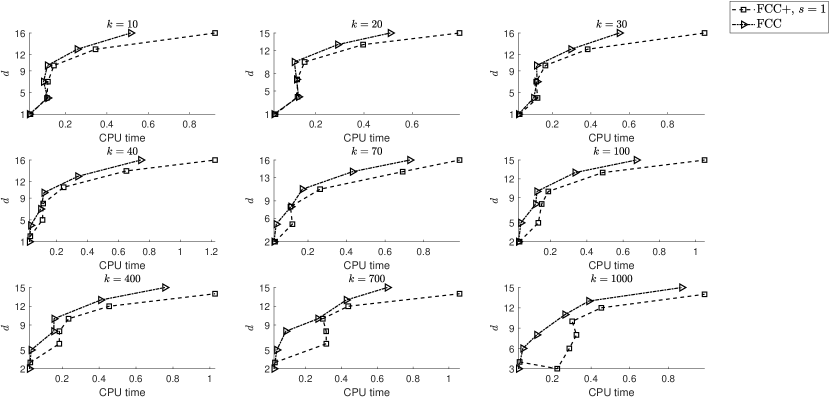

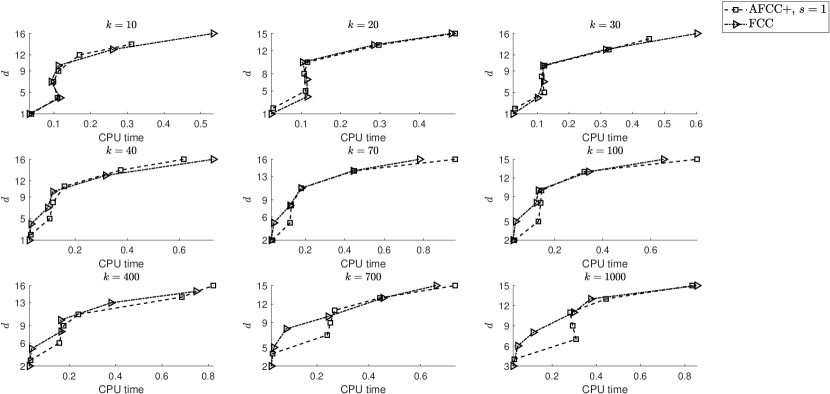

Experiment 2.

The amplitude function in the previous experiment is infinitely differentiable. Here, we consider , , for which only the first derivative exists on . Thus, the parameter in the FCC+ rules cannot exceed 1. In Fig. 2, we have plotted the accuracy versus the machine run-time (in milliseconds) for the FCC rules and the FCC+ rules of order 1. Because in this example the degree of regularity is low, the rules reach a given accuracy only for larger .

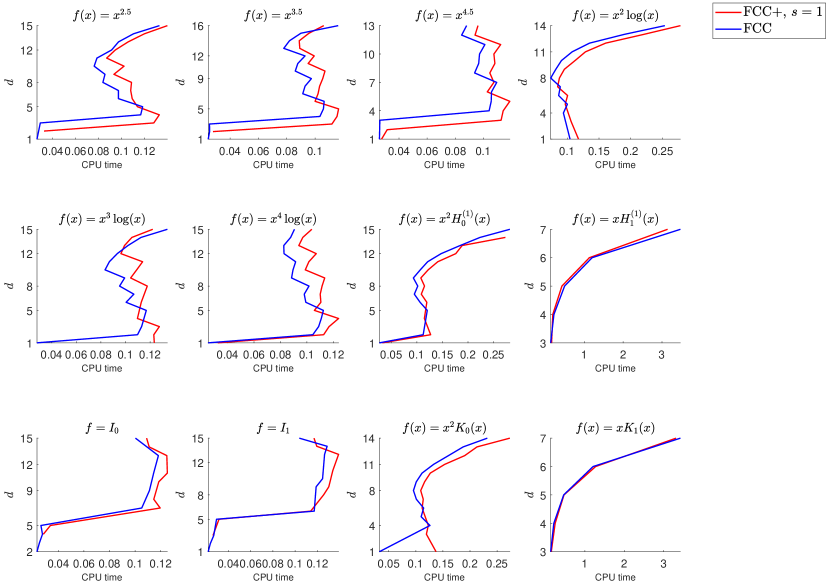

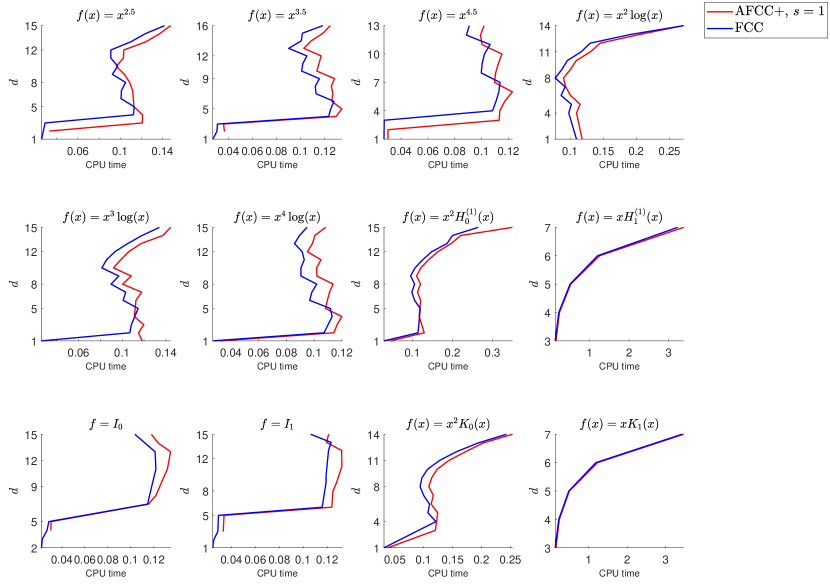

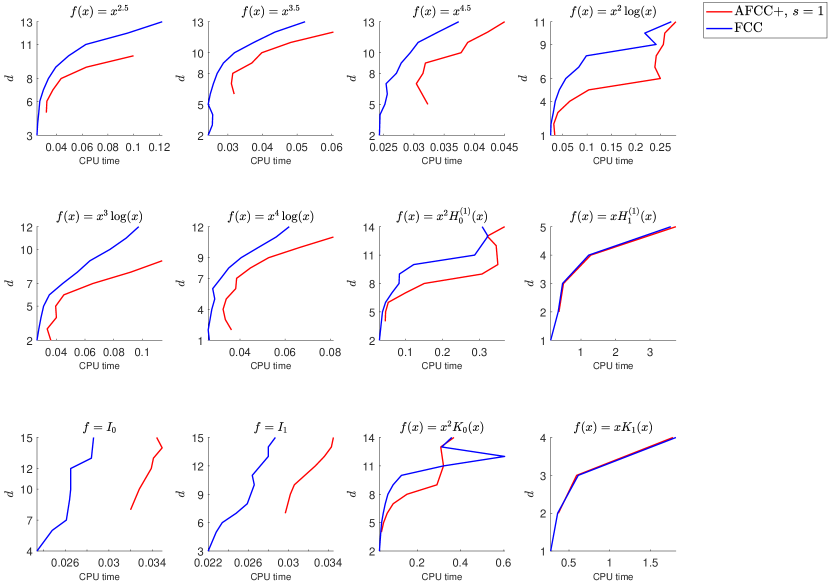

Experiment 3.

In order to see if the above experimental results hold for other amplitude functions , here we choose a set of elementary and non-elementary functions of various types on :

Here, , , and are the Hankel function of the first kind, the modified Bessel function of the first kind, and the modified Bessel function of the second kind of order , respectively. is smooth on while each of the other functions has one and only one singularity at zero. The singularity is bounded and of algebraic or logarithmic type.

3. FCC vs Adaptive FCC+

In this section, we compare the efficiency in practice of the FCC and the adaptive FCC+ rules of [14]. An adaptive FCC+ rule and its corresponding FCC+ rule always have the same asymptotic order while the former is derivative-free and less complex. We will see, however, that in the race to achieve a given accuracy, the adaptive FCC+ rules are still overtaken by the corresponding FCC rules. At first, we give a brief review of [14], where the concept of adaptive FCC+ rules has been introduced for the first time.

3.1. A review of the adaptive FCC+ rules

The ()-point adaptive FCC+ rule of order is defined by

| (12) |

where is the Lagrange interpolant of at , for , and extra points , , , that should be near the endpoints and are defined as follows:

| (13) |

As mentioned above, an adaptive FCC+ rule preserves the asymptotic order of the corresponding FCC+ rule. More precisely, we have the following result proved in [14]:

Theorem 1.

Assume that . Then,

| (14) |

as .

In contrast to the FCC+ rules, there are error bounds for the adaptive FCC+ rules that are explicit in all the three parameters , , and .

Corollary 2.

Assume that , and . Then,

| (15) |

for all .

Proof.

In [14], it was shown numerically that, adaptive FCC+ is always more efficient than FCC+. However, our numerical experiments in the next section show that it is still overtaken by FCC is the race to achieve a given accuracy.

3.2. The race for convergence speed

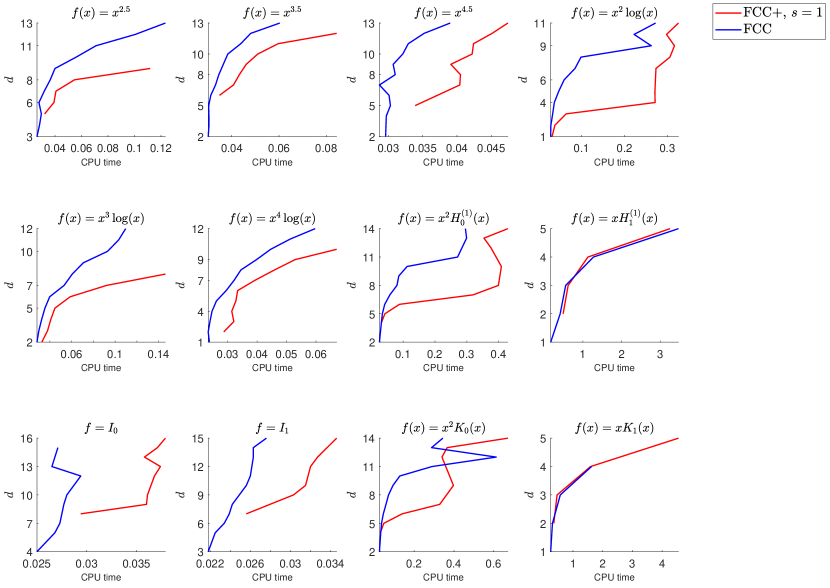

In this section, we repeat the numerical experiments of Section 2.2, now by the adaptive FCC+ instead of the FCC+ rules. The same discussion is applicable here, too. In Fig. 5–Fig. 8, we compare convergence rates of the adaptive FCC+ rules and the corresponding FCC rules. The results are the same as in Fig. 1–Fig. 4, and FCC is always the winner.

4. Other advantages of FCC rules

The FCC rules have further advantages over the FCC+ and adaptive FCC+ rules. In this section, we account some of them.

-

(1)

Composite FCC rules can readily be developed [4] while the composite versions of the (adaptive) FCC+ rules require many data within the integration interval. In the case of FCC+ rules, one should compute derivatives of the amplitude function at some (or many) internal points in addition to the endpoints. This can increase the computational cost most largely.

-

(2)

One can apply composite FCC rules with suitably graded meshes and treat oscillatory integrals with algebraic or logarithmic endpoint singularities [4]. Trying to apply this idea to the FCC+ rules, one should compute derivatives of the amplitude function at some (or many) points clustered near the singular endpoint. In the case of adaptive FCC+ rules, we will have a bunch of points clustered near the endpoints of each subinterval. Thus, in the vicinity of the singular endpoint, the density of the points may become so high that rounding error pollutes the total accuracy.

-

(3)

When estimating the computational cost of the FCC+ rules in [15], it is assumed that the derivatives of the amplitude function have been precalculated. This is also the case in the numerical experiments of Section 2.2. However, in many situations it is impossible or awkward to differentiate manually [10]. It is well-known that computing derivatives (especially of high-order) by finite differences is subject to cancellation. For that reason, many special numerical differentiation methods has been developed, e.g. automatic differentiation [9, 18, 19], complex and multicomplex step differentiation [12, 16, 17], differentiation rules base on Cauchy integrals [2, 1], etc. Thus, computing the derivatives is not necessarily a trivial task and imposes an extra computational cost.

-

(4)

One can study the stability of the FCC rules [13], but such study can be sophisticated for (adaptive) FCC+ rules.

5. Conclusions

In this work, we carried out a benchmark of numerical experiments and observed that the (adaptive) FCC+ rules are most often overtaken by the plain FCC rules in the speed race to achieve a given accuracy. Availability of error estimates when the amplitude function is of confined regularity, capability to develop efficient composite rules, availability of a stability analysis, and being derivative-free are other features that make the FCC rules the most-efficient decisive-choice ones among other Filon-type methods for oscillatory integrals (1).

References

- [1] (2013) Optimal contours for high-order derivatives. IMA J. Numer. Anal. 33, pp. 403–412.

- [2] (2011) Accuracy and stability of computing high-order derivatives of analytic functions by Cauchy integrals. Found. Comput. Math. 11, pp. 1–63.

- [3] (2017) Computing highly oscillatory integrals. SIAM.

- [4] (2013) Filon-Clenshaw-Curtis rules for highly oscillatory integrals with algebraic singularities and stationary points. SIAM J. Numer. Anal. 51, pp. 1542–1566.

- [5] (2011) Stability and error estimates for Filon-Clenshaw-Curtis rules for highly oscillatory integrals. IMA J. Numer. Anal. 31, pp. 1253–1280.

- [6] (1996) Fast algorithms for polynomial interpolation, integration, and differentiation. SIAM J. Numer. Anal. 33, pp. 1689–1711.

- [7] (2017) A generalization of Filon-Clenshaw-Curtis quadrature for highly oscillatory integrals. BIT Numer. Math. 57, pp. 943–961.

- [8] (1987) A fast algorithm for particle simulations. J. Comput. Phys. 73, pp. 325–348.

- [9] (2008) Evaluating derivatives: principles and techniques of algorithmic differentiation. SIAM.

- [10] (2004) On quadrature methods for highly oscillatory integrals and their implementation. BIT Numer. Math. 44, pp. 755–772.

- [11] (2005) Efficient quadrature of highly oscillatory integrals using derivatives. Proc. R. Soc. Lond. A 461, pp. 1383–1399.

- [12] (2012) Using multicomplex variables for automatic computation of high-order derivatives. ACM Trans. Math. Softw. 38, pp. 1–21.

- [13] (2022) On the stability of Filon-Clenshaw-Curtis rules. Bull. Iran. Math. Soc. 48, pp. 2943–2964.

- [14] (2023) Adaptive FCC+ rules for oscillatory integrals. J. Comput. Appl. Math. 424, pp. 115012.

- [15] (2023) Efficient construction of FCC+ rules. J. Comput. Appl. Math. 417, pp. 114592.

- [16] (2003) The complex-step derivative approximation. ACM Trans. Math. Softw. 29, pp. 245–262.

- [17] (2014) Multicomplex Taylor series expansion for computing high order derivatives. Int. J. Appl. Math. 27, pp. 311–334.

- [18] (2010) Introduction to automatic differentiation and MATLAB object-oriented programming. SIAM Rev. 52, pp. 545–563.

- [19] (2013) An efficient overloaded method for computing derivatives of mathematical functions in MATLAB. ACM Trans. Math. Softw. 39, pp. 1–36.

- [20] (2010) Error bounds for approximation in Chebyshev points. Numer. Math. 116 (3), pp. 463–491.

- [21] (2015) On error bounds of Filon-Clenshaw-Curtis quadrature for highly oscillatory integrals. Adv. Comput. Math. 41, pp. 573–597.