Abstract

We obtain some new Shepard type operators based on the classical, the modified Shepard methods and the least squares thin plate spline function. Given some sets of points, we compute some representative subsets of knot points following an algorithm described by J. R. McMahon in 1986.

Authors

Babes-Bolyai University, Faculty of Mathematics and Computer Sciences

Keywords

Scattered data; Shepard operator; least squares approximation; thinplate spline; knot points.

Paper coordinates

Malina Andra, Catinas Teodora, Shepard operator of least squares thin-plate spline type, Stud. Univ. Babes-Bolyai Math. 66(2021), No. 2, 257–265

http://doi.org/10.24193/subbmath.2021.2.02

About this paper

Print ISSN

0252-1938

Online ISSN

2065-961X

MR

?

ZBL

?

Google Scholar

Paper (preprint) in HTML form

Shepard operator of least squares thin-plate spline type

Abstract.

We obtain some new Shepard type operators based on the classical, the modified Shepard methods and the least squares thin plate spline function. Given some sets of points, we compute some representative subsets of knot points following an algorithm described by J. R. McMahon in 1986.

Key words and phrases:

Scattered data, Shepard operator, least squares approximation, thin plate spline, knot points.1991 Mathematics Subject Classification:

41A05, 41A25, 41A80.1. Preliminaries

One of the best suited methods for approximating large sets of data is the Shepard method, introduced in 1968 in [16]. It has the advantages of a small storage requirement and an easy generalization to additional independent variables, but it suffers from no good reproduction quality, low accuracy and a high computational cost relative to some alternative methods [15], these being the reasons for finding new methods that improve it (see, e.g.,[1]-[8], [17], [18]). In this paper we obtain some new operators based on the classical, the modified Shepard methods and the least squares thin plate spline.

Let be a real-valued function defined on and some distinct points. Denote by the distances between a given point and the points . The bivariate Shepard operator is defined by

| (1.1) |

where

| (1.2) |

with the parameter

It is known that the bivariate Shepard operator reproduces only the constants and that the function has flat spots in the neighborhood of all data points.

Franke and Nielson introduced in [10] a method for improving the accuracy in reproducing a surface with the bivariate Shepard approximation. This method has been further improved in [9], [14], [15], and it is given by:

| (1.3) |

with

| (1.4) |

where is a radius of influence about the node and it is varying with is taken as the distance from node to the th closest node to for ( is a fixed value) and as small as possible within the constraint that the th closest node is significantly more distant than the st closest node (see, e.g. [15]). As it is mentioned in [11], this modified Shepard method is one of the most powerful software tools for the multivariate approximation of large scattered data sets.

2. The Shepard operators of least squares thin-plate spline type

Consider a real-valued function defined on and some distinct points. We introduce the Shepard operator based on the least squares thin-plate spline in four ways.

Method 1.

We consider

| (2.1) |

where are defined by (1.2), for a given parameter and the least squares thin-plate splines are given by

| (2.2) |

with

For the second way, we consider a smaller set of knot points that will be representative for the original set. This set is obtained following the next steps (see, e.g., [12] and [13]):

Algorithm 2.1.

-

1.

Generate random knot points, with ;

-

2.

Assign to each point the closest knot point;

-

3.

If there exist knot points for which there is no point assigned, move the knot to the closest point;

-

4.

Compute the next set of knot points as the arithmetic mean of all corresponding points;

-

5.

Repeat steps 2-4 until the knot points do not change for two successive iterations.

Method 2.

For a given , we consider the representative set of knot points . The Shepard operator of least squares thin-plate spline is given by

| (2.3) |

where are defined by

for a given parameter .

The least squares thin-plate spline are given by

| (2.4) |

with

For Methods 1 and 2, the coefficients of are found such that to minimize the expressions

considering for the first case and for the second one. There are obtained systems of the following form (see, e.g., [12]):

with , .

Next we consider the improved form of the Shepard operator given in (1.3).

Method 3.

The coefficients of are determined in order to minimize the expression

Method 4.

The coefficients of , are determined in order to minimize the expression

3. Numerical examples

Table 1 contains the maximum errors for approximating the functions (3.1) by the classical and the modified Shepard operators given, respectively, by (1.1) and (1.3), and the errors of approximating by the operators introduced in (2.1), (2.3), (2.5) and (2.6). We consider three sets of random points for each function in , knots, and .

Remark 3.1.

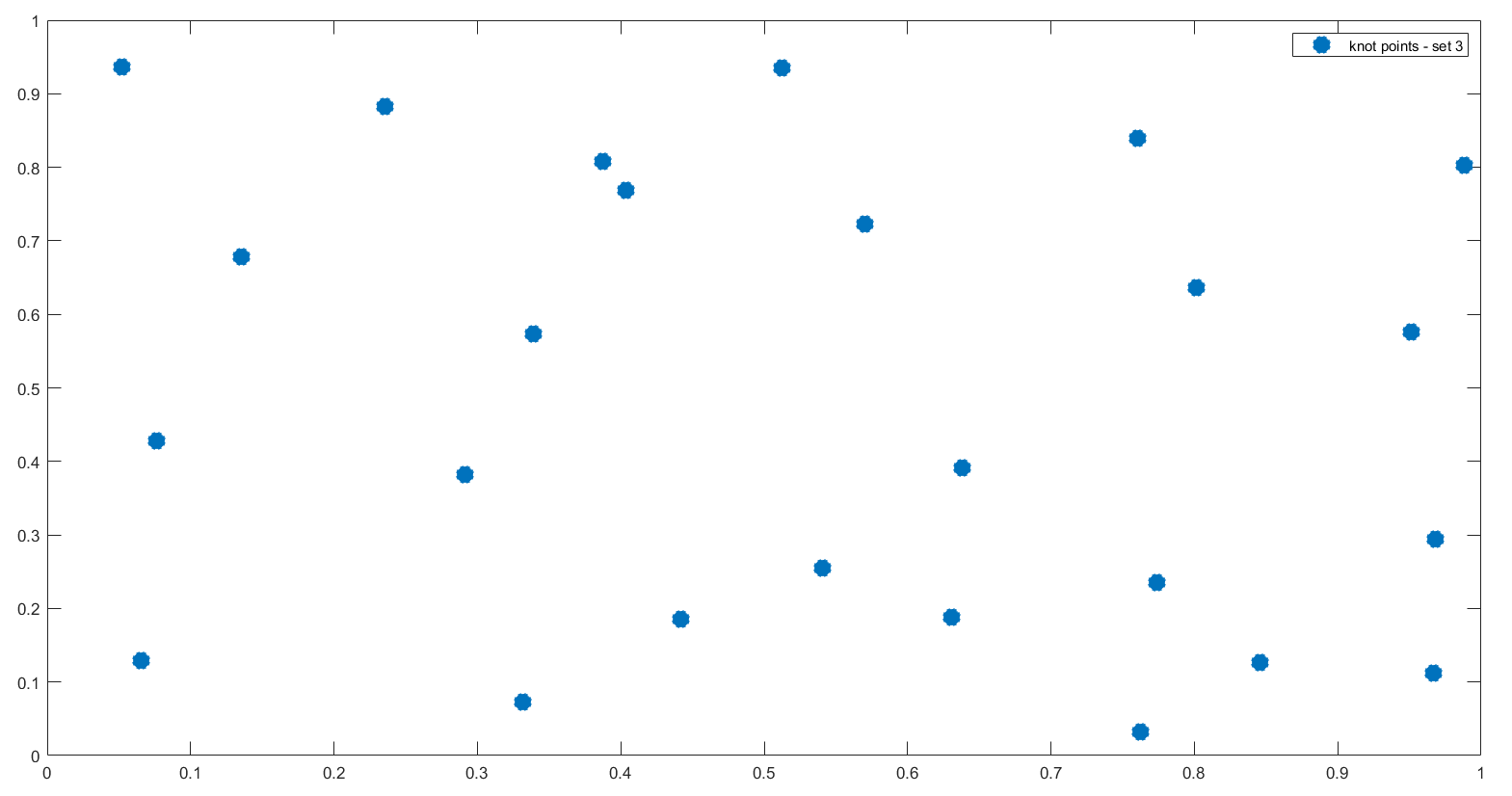

The approximants , , have better approximation properties although the number of knot points is smaller than the number of knot points considered for the approximants , , so this illustrates the benefits of the algorithm of choosing the representative set of points.

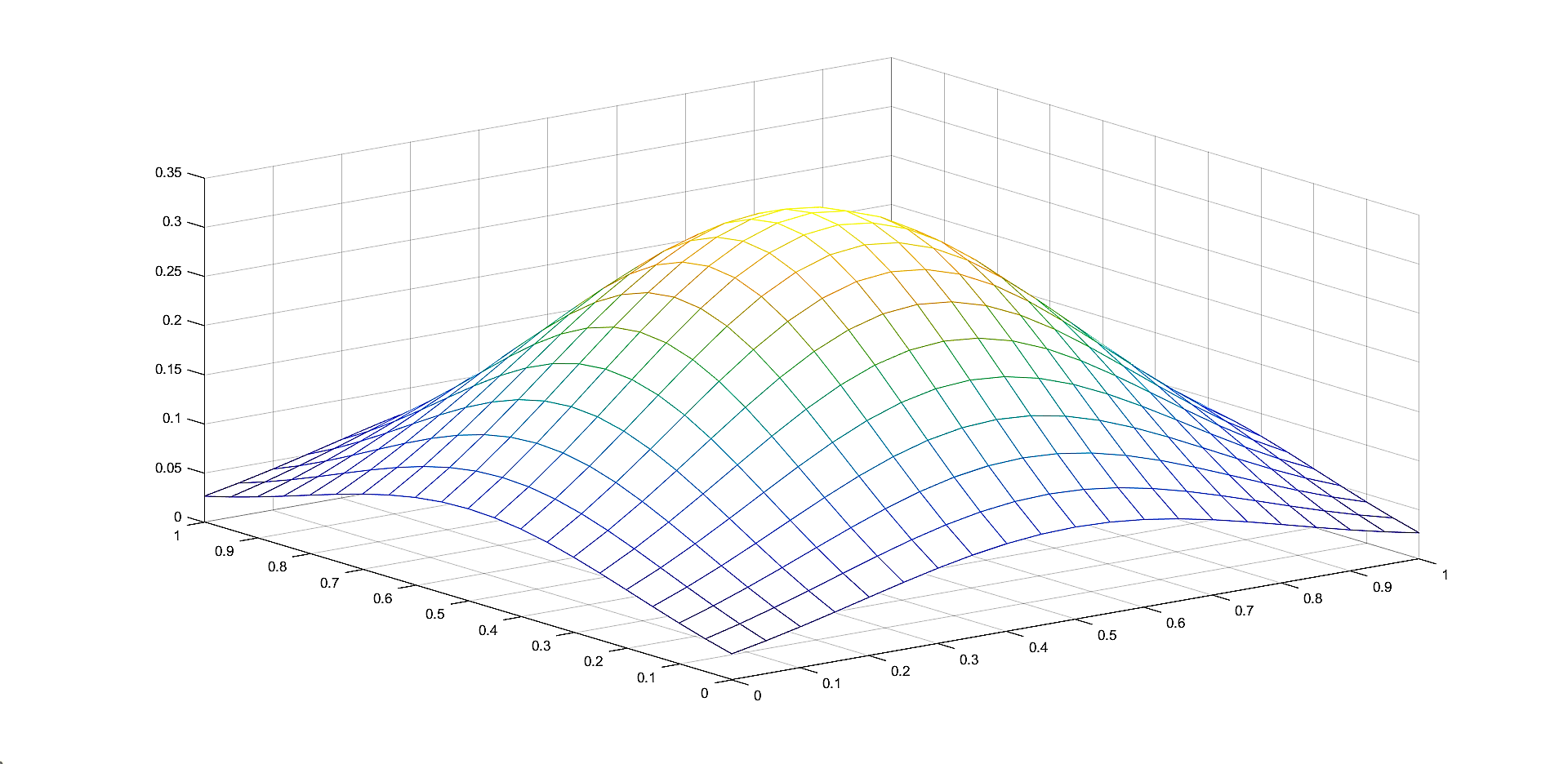

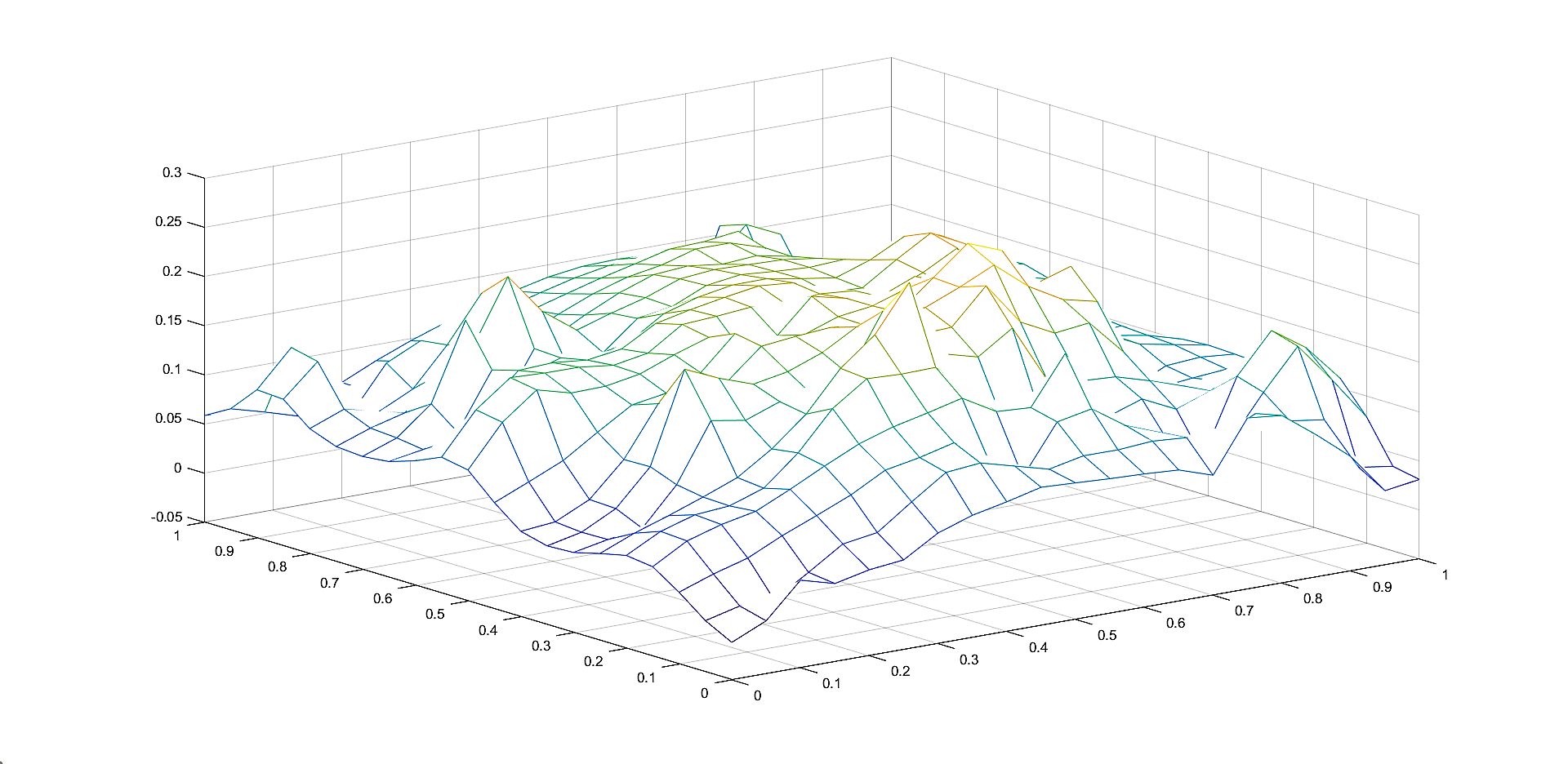

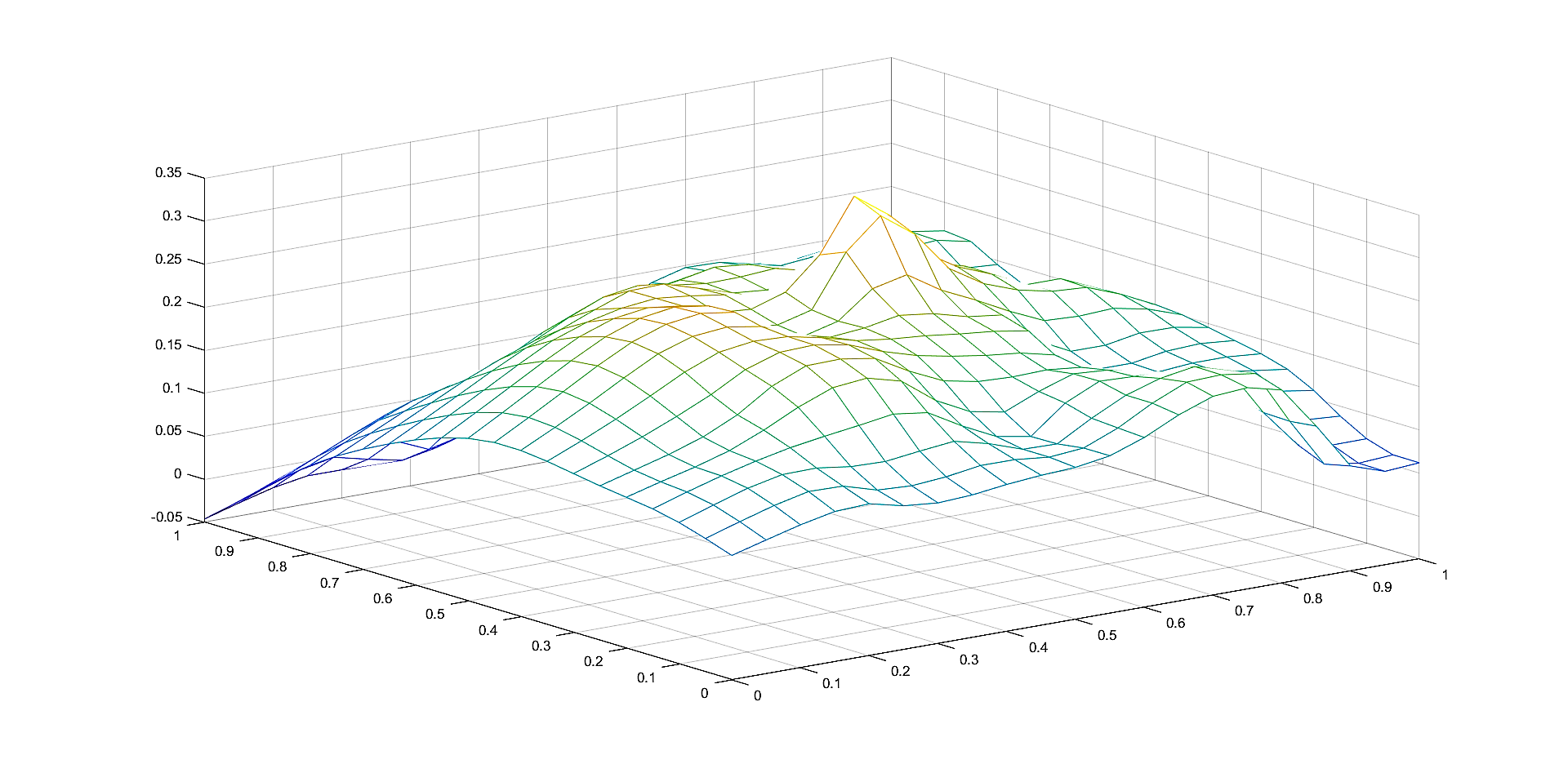

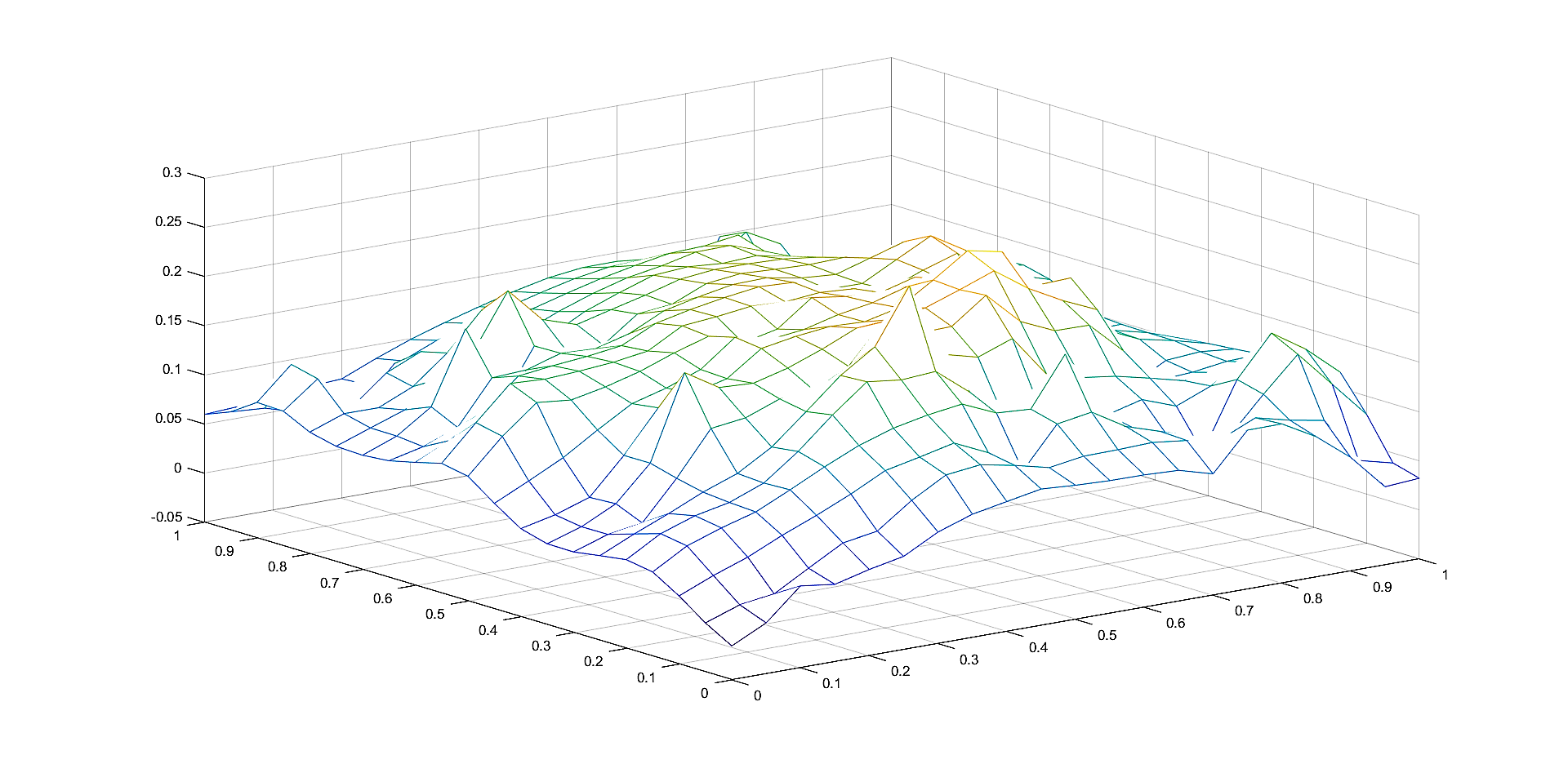

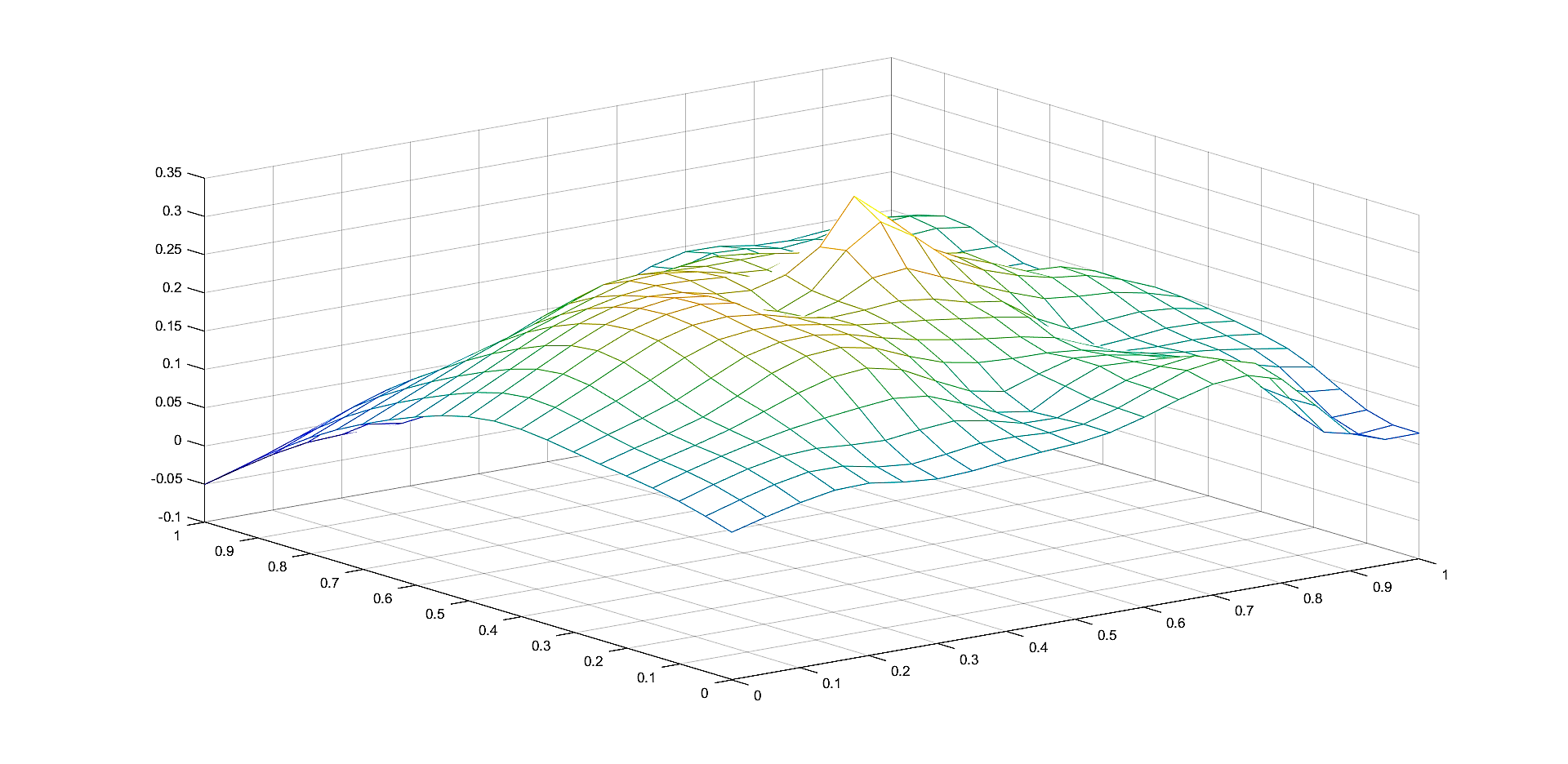

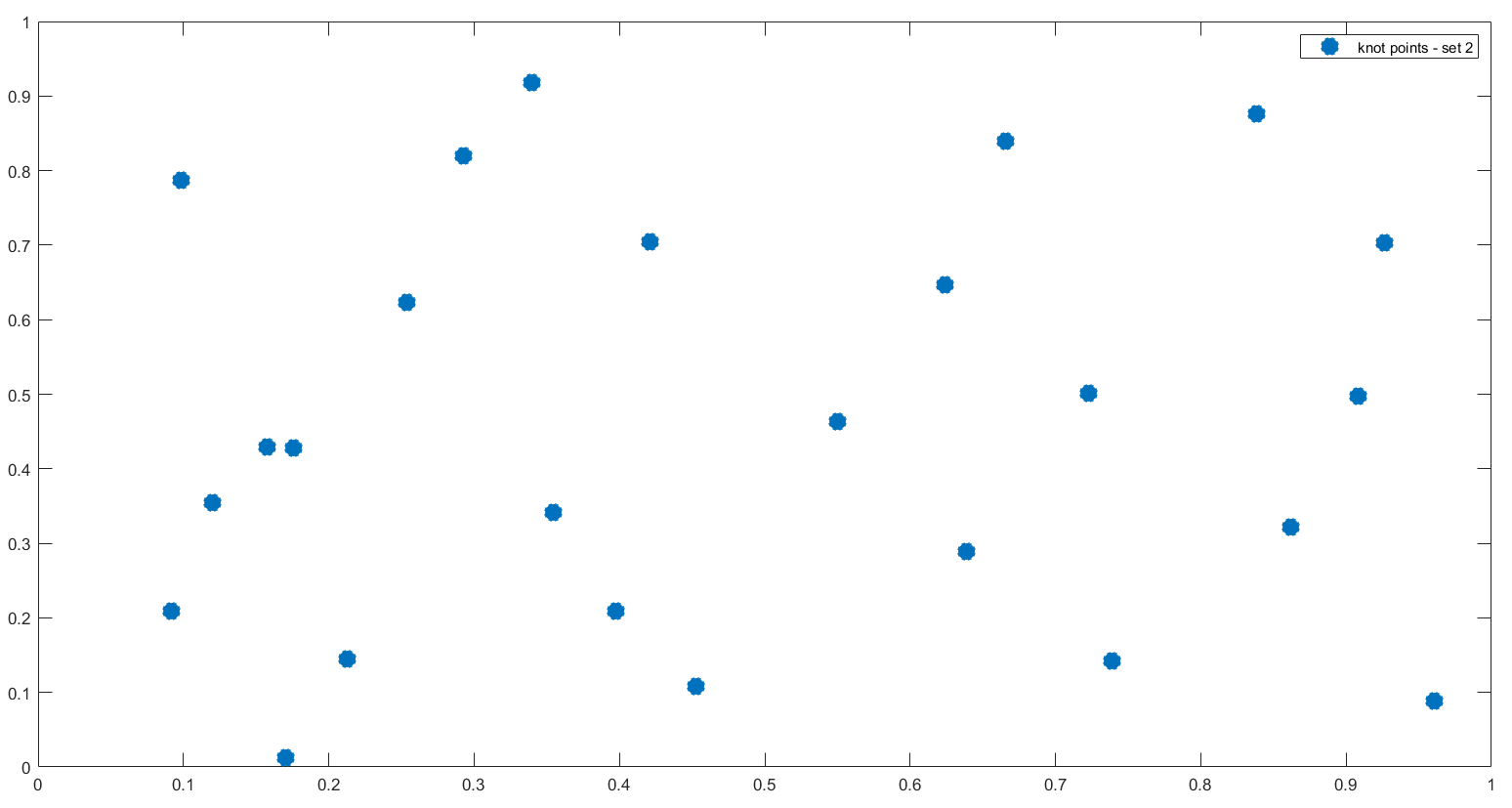

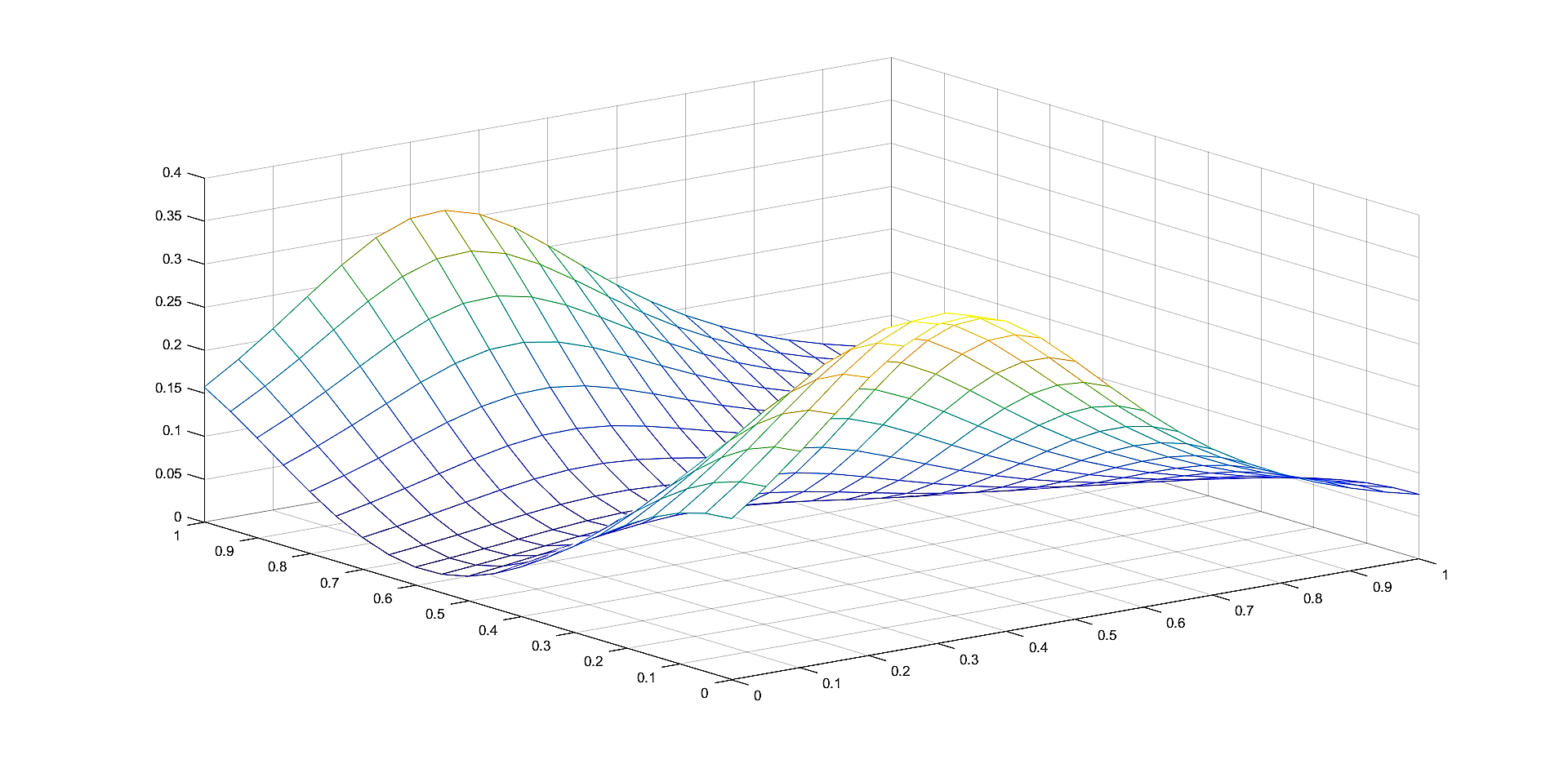

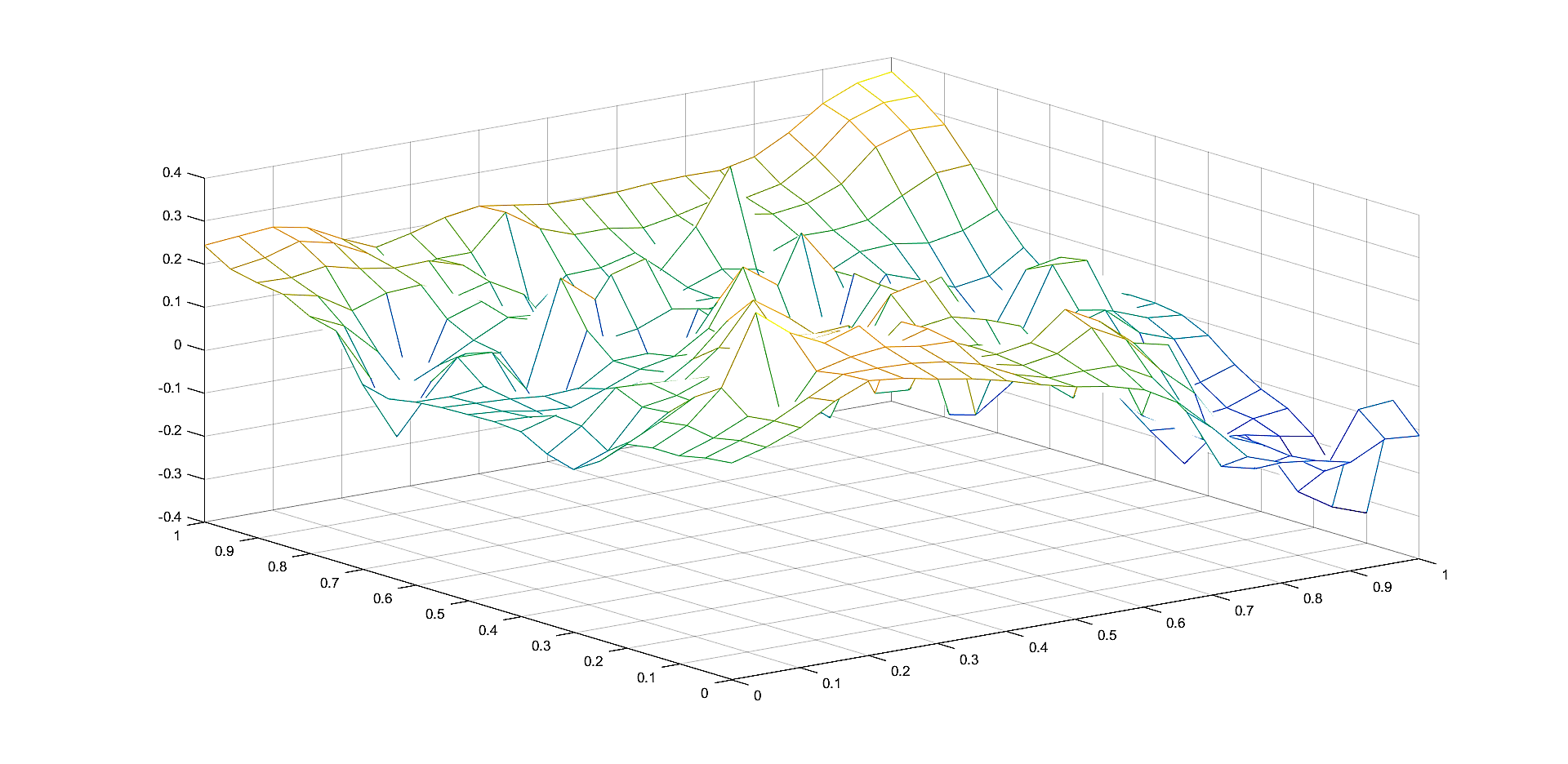

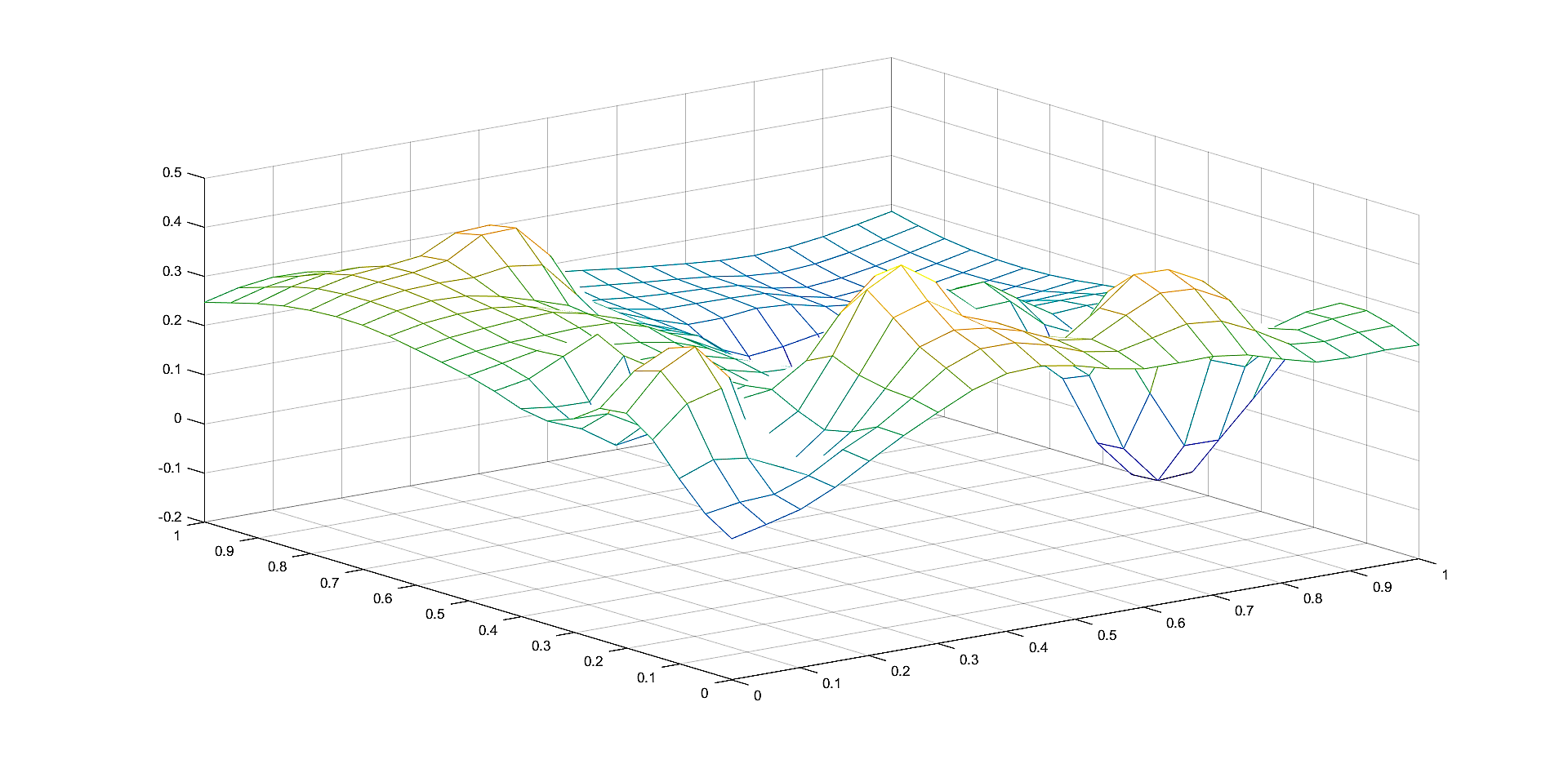

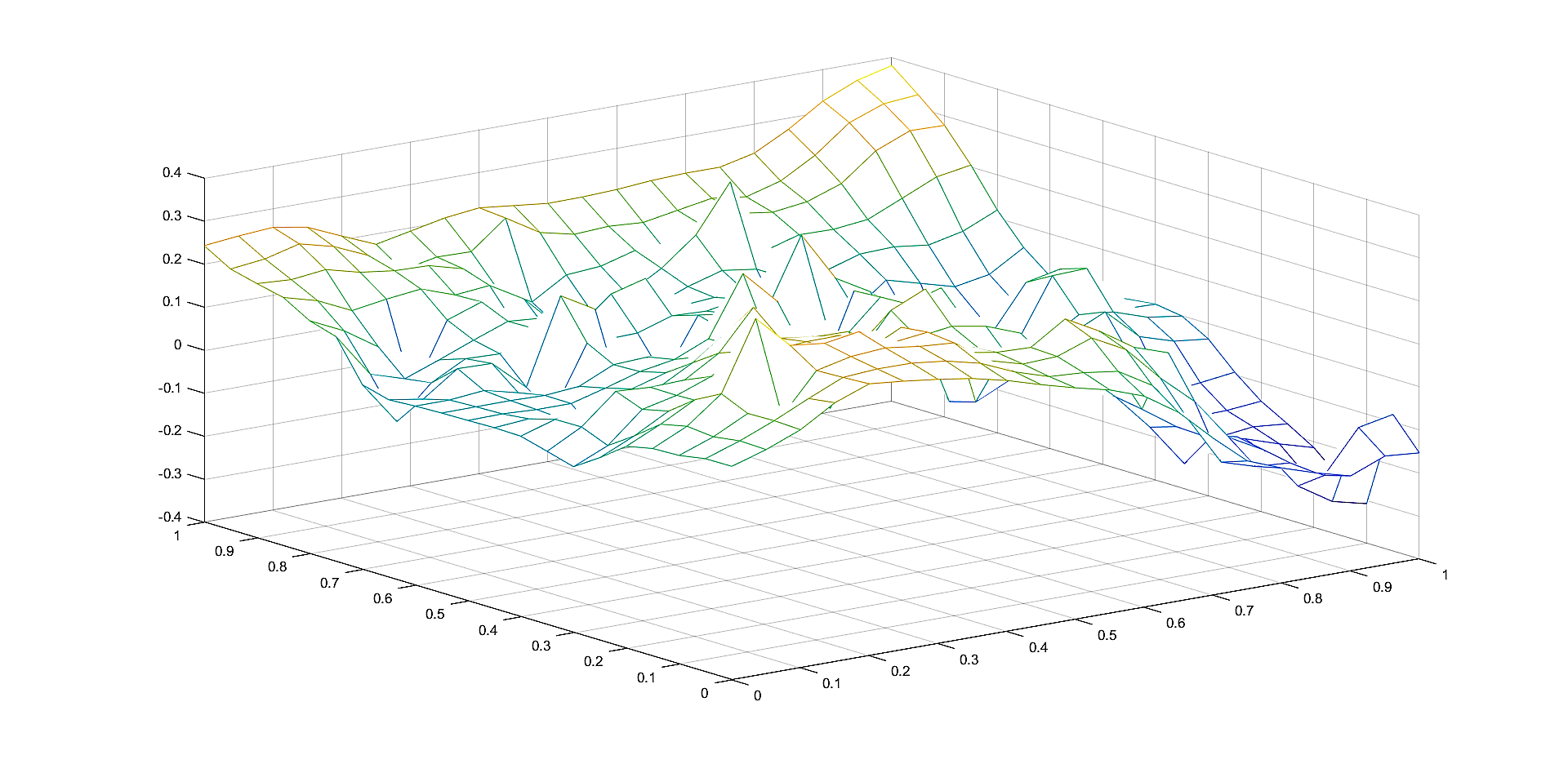

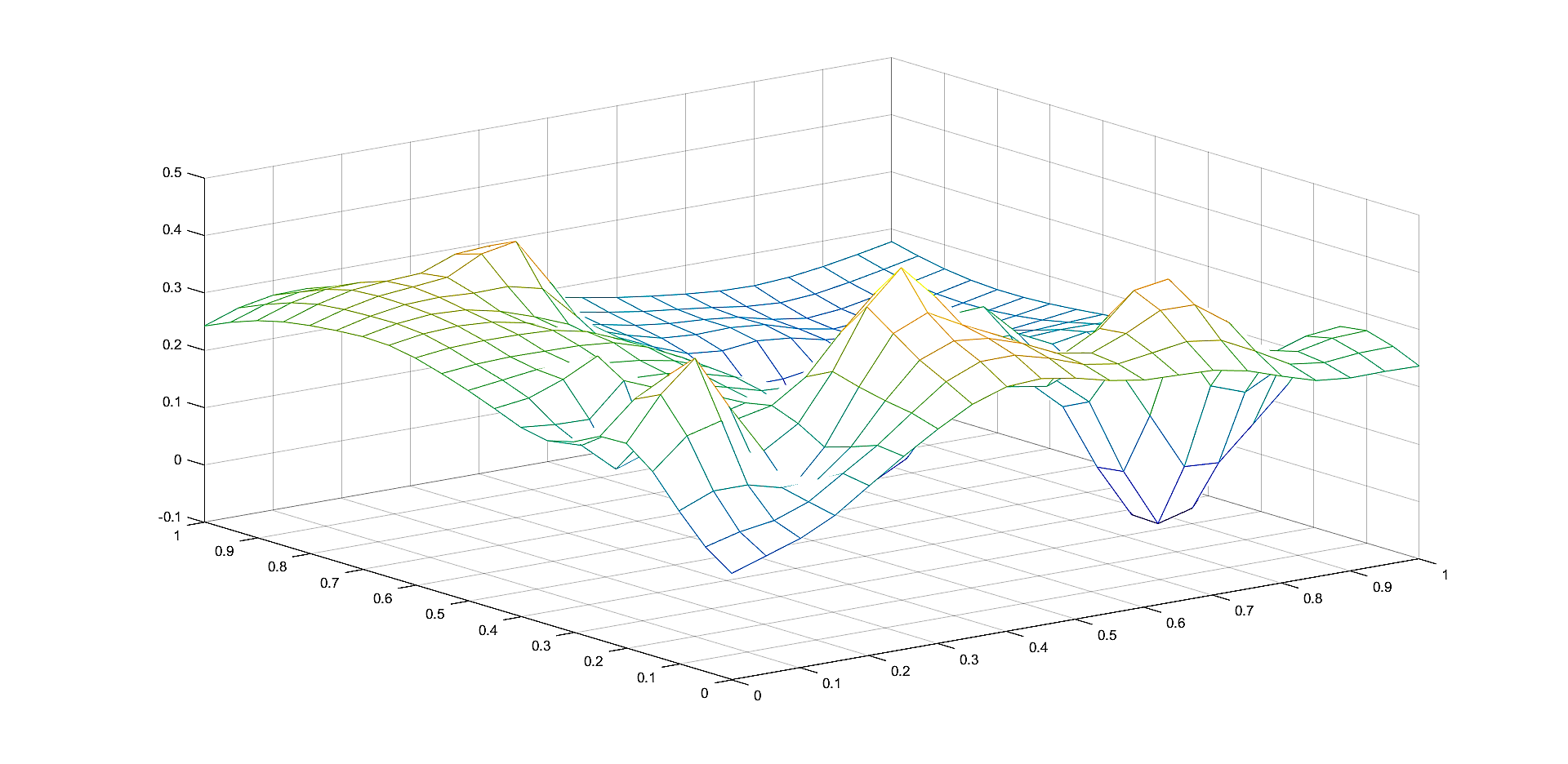

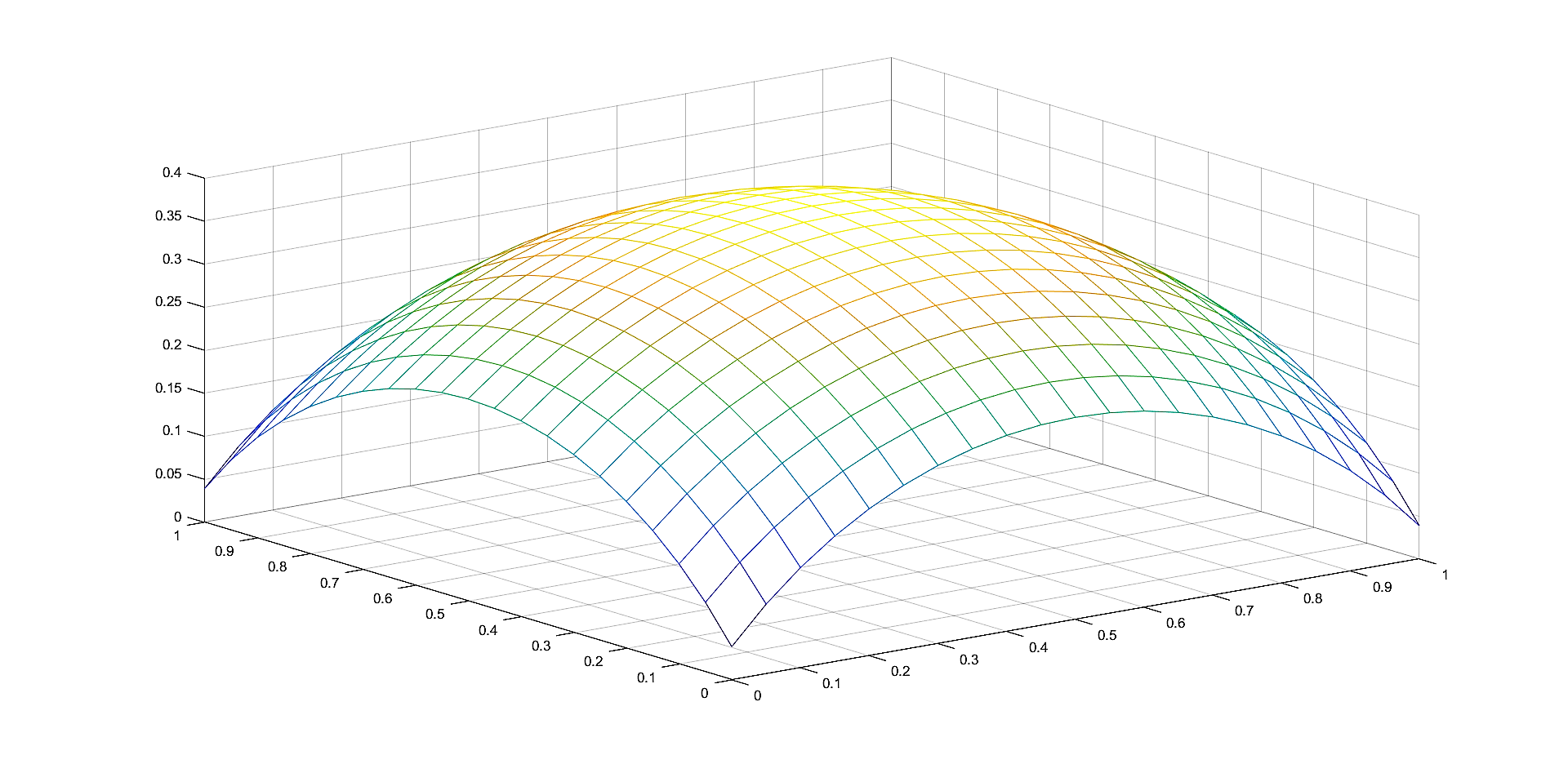

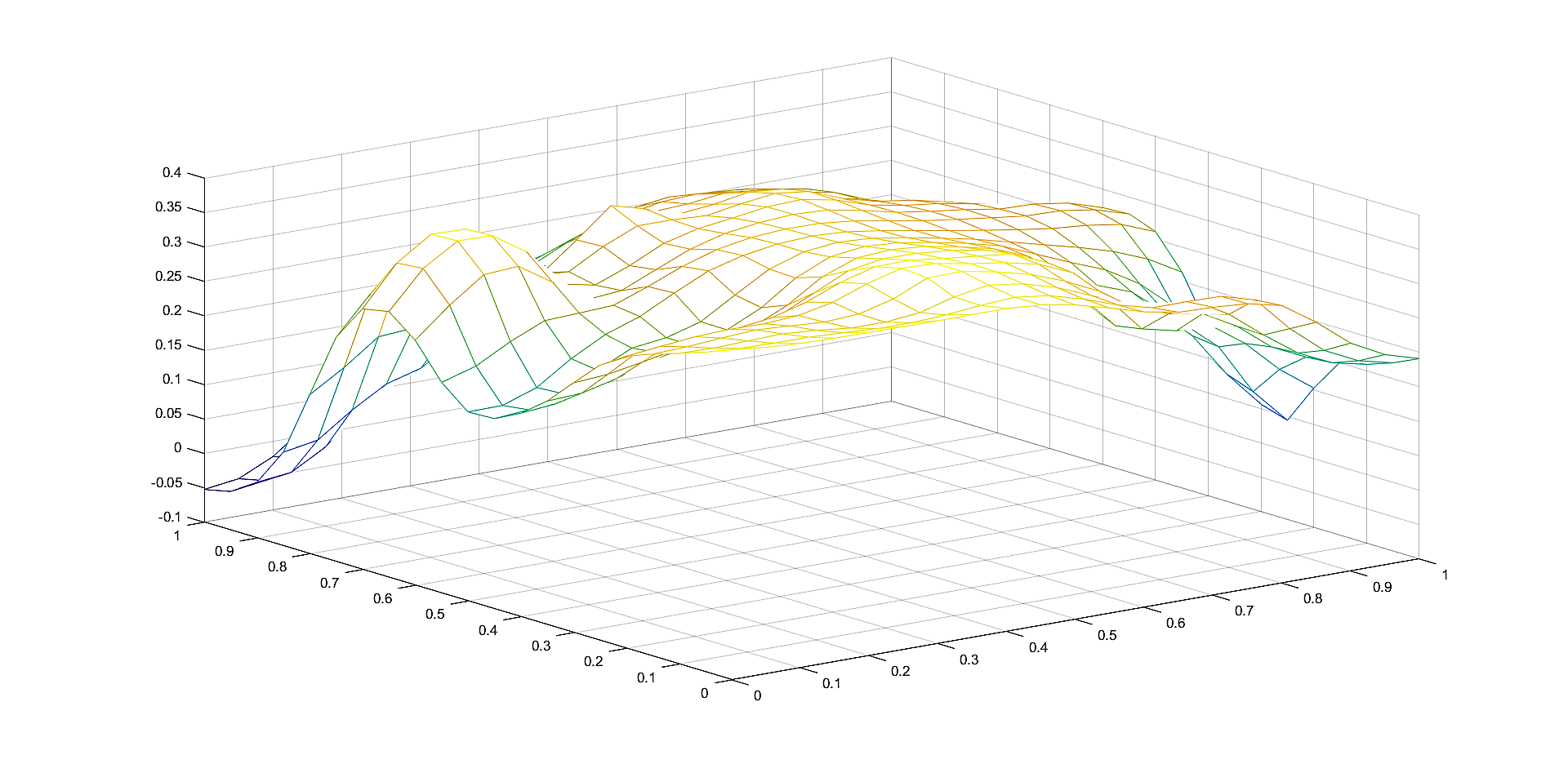

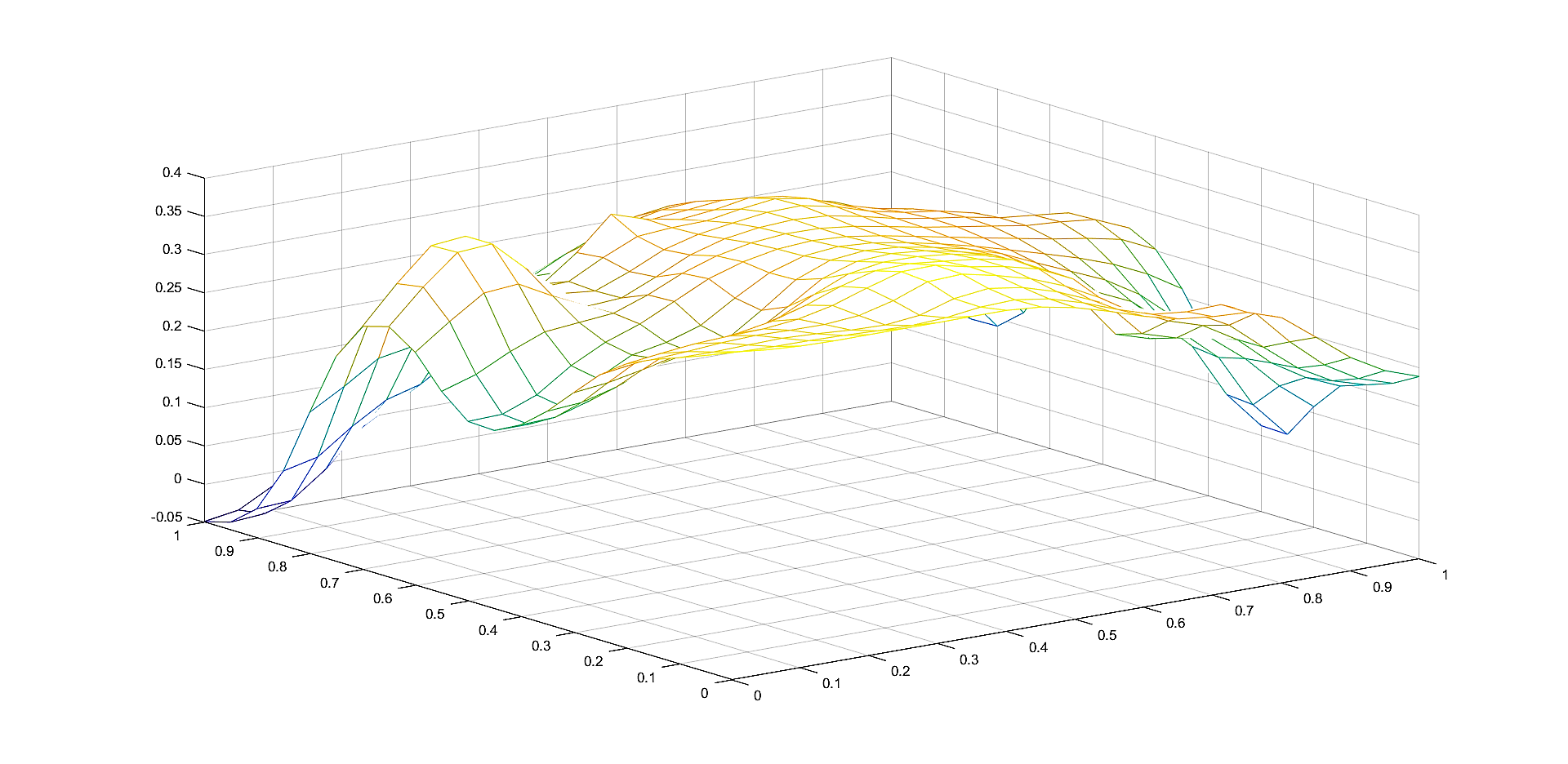

In Figures 2, 4, 6 we plot the graphs of and of the corresponding Shepard operators , and , , respectively.

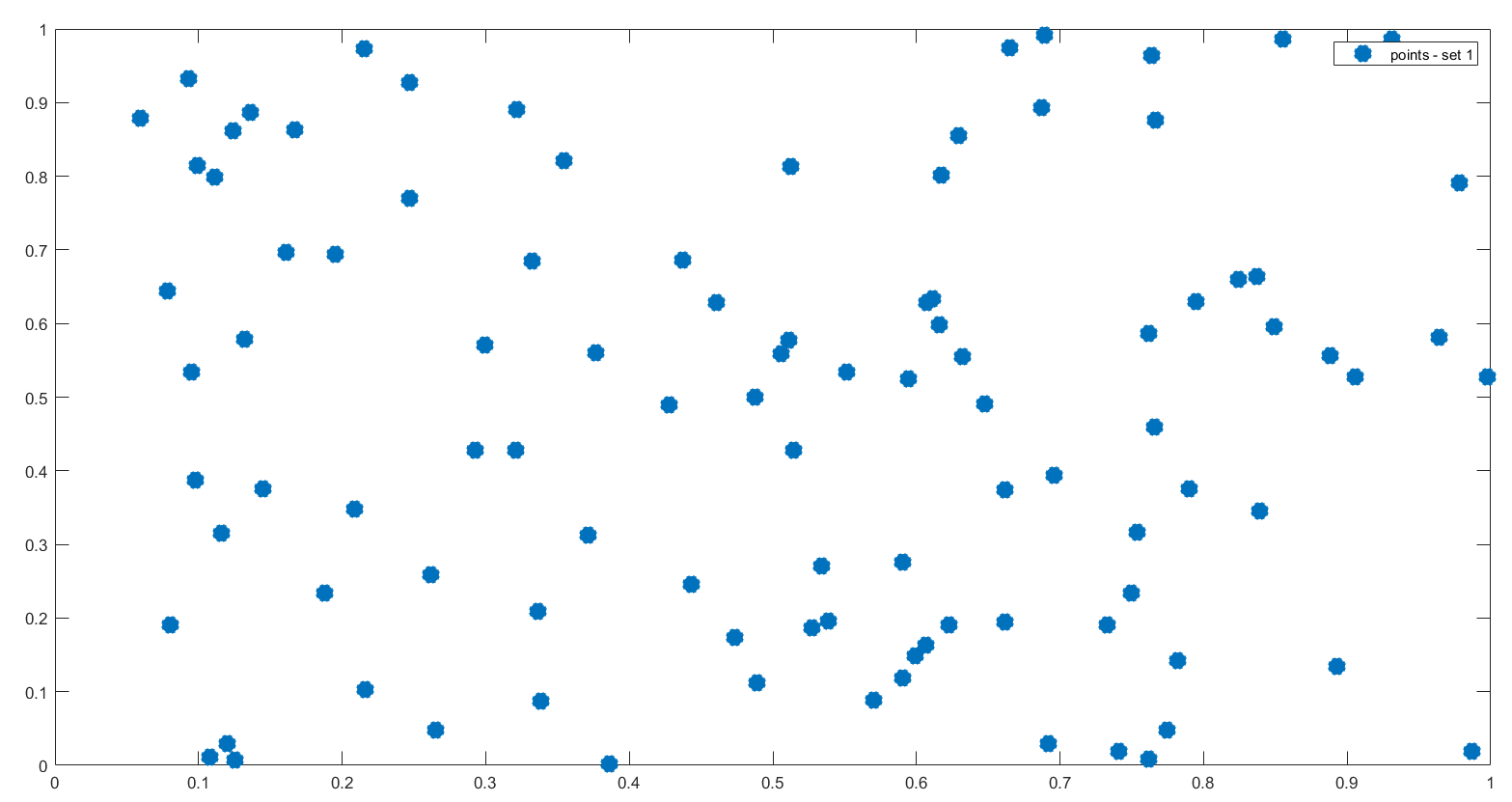

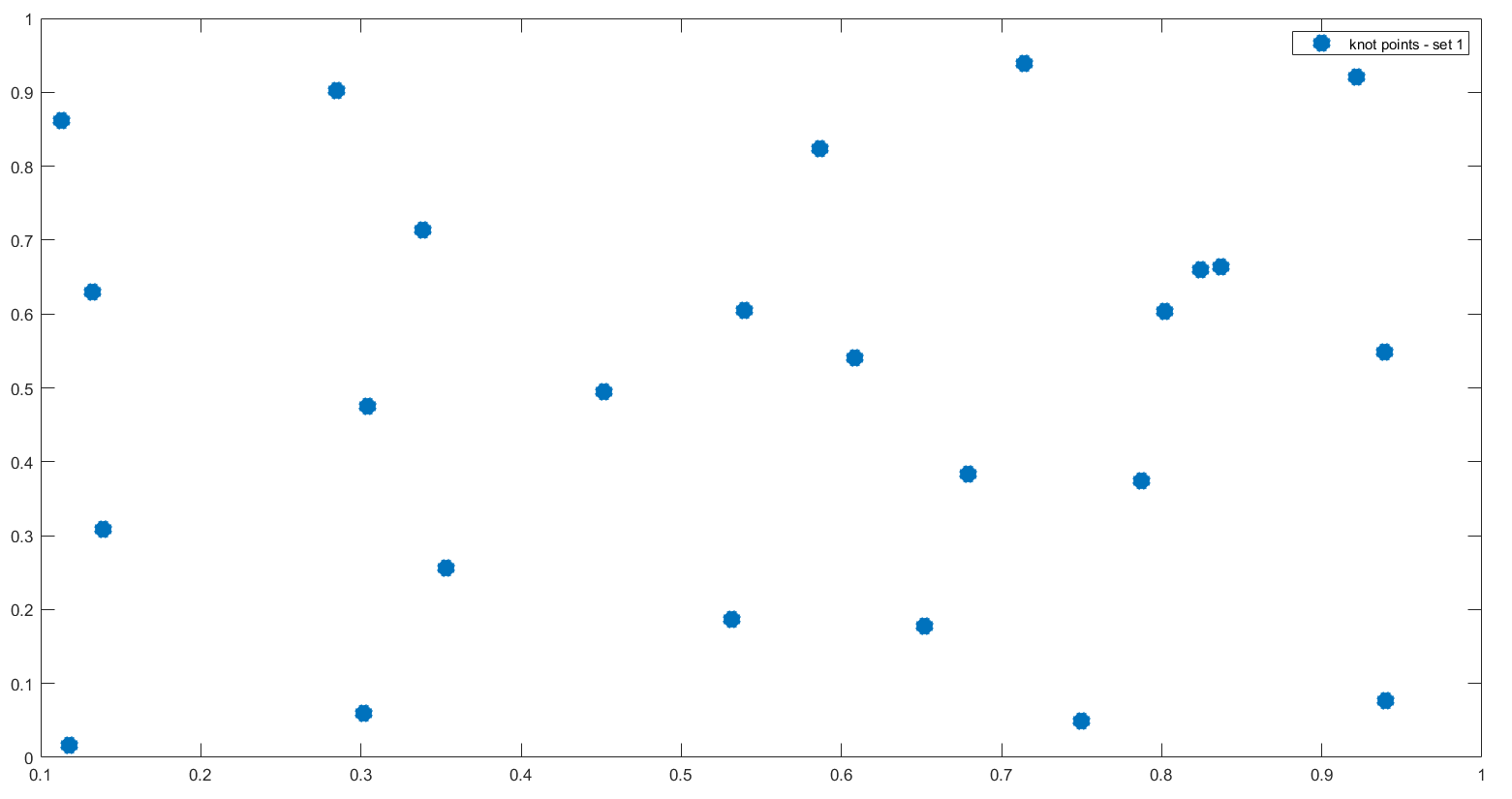

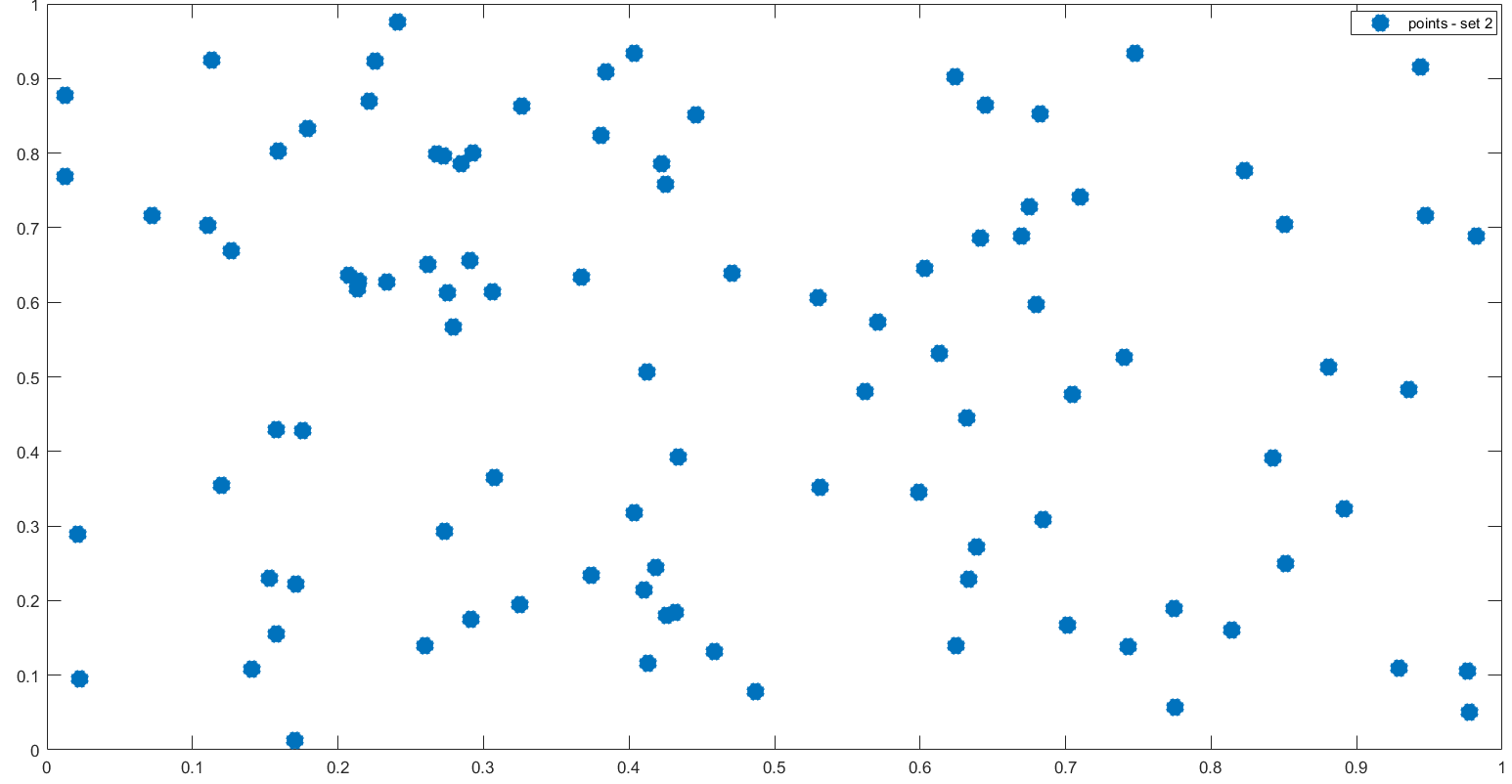

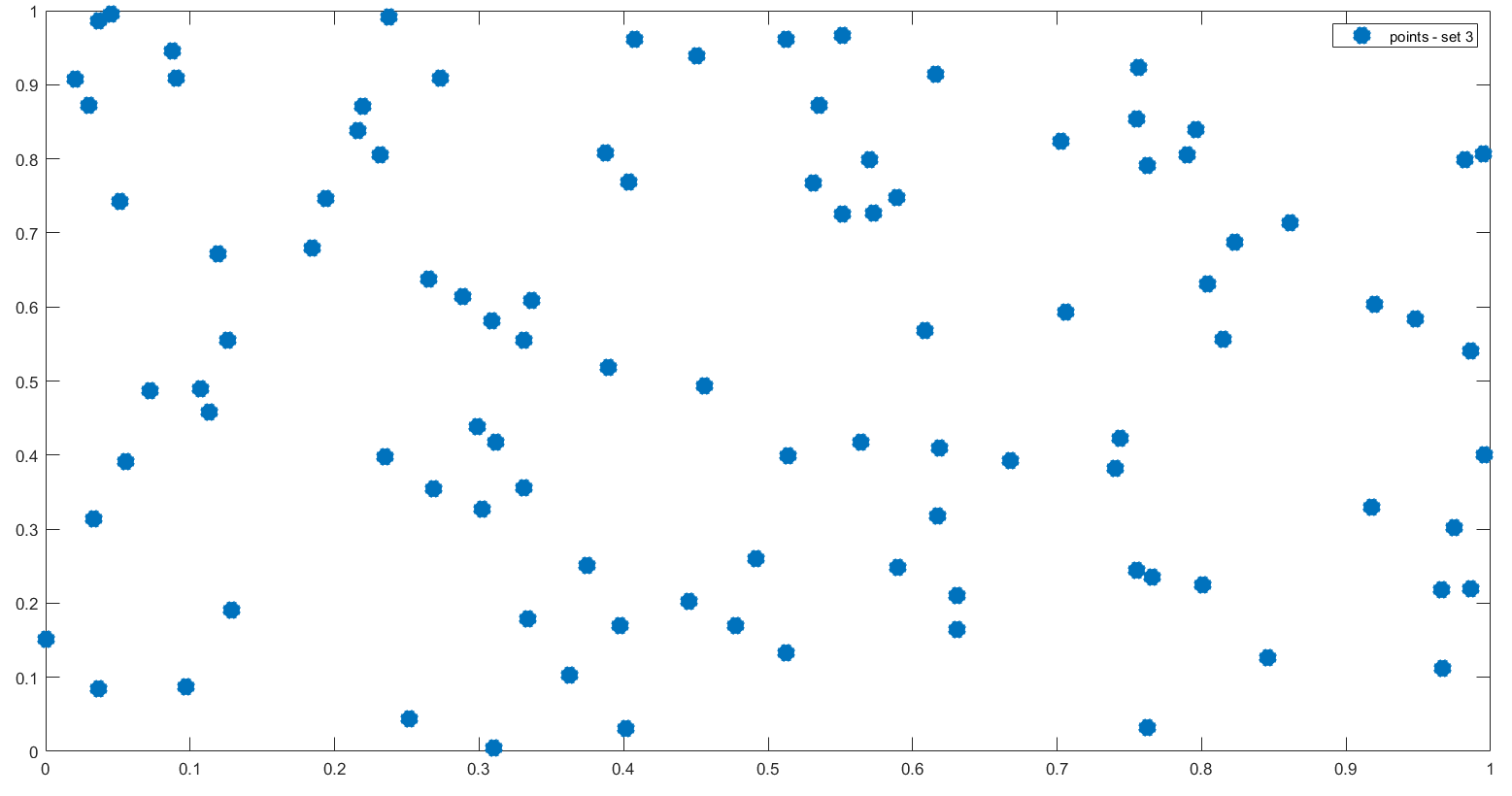

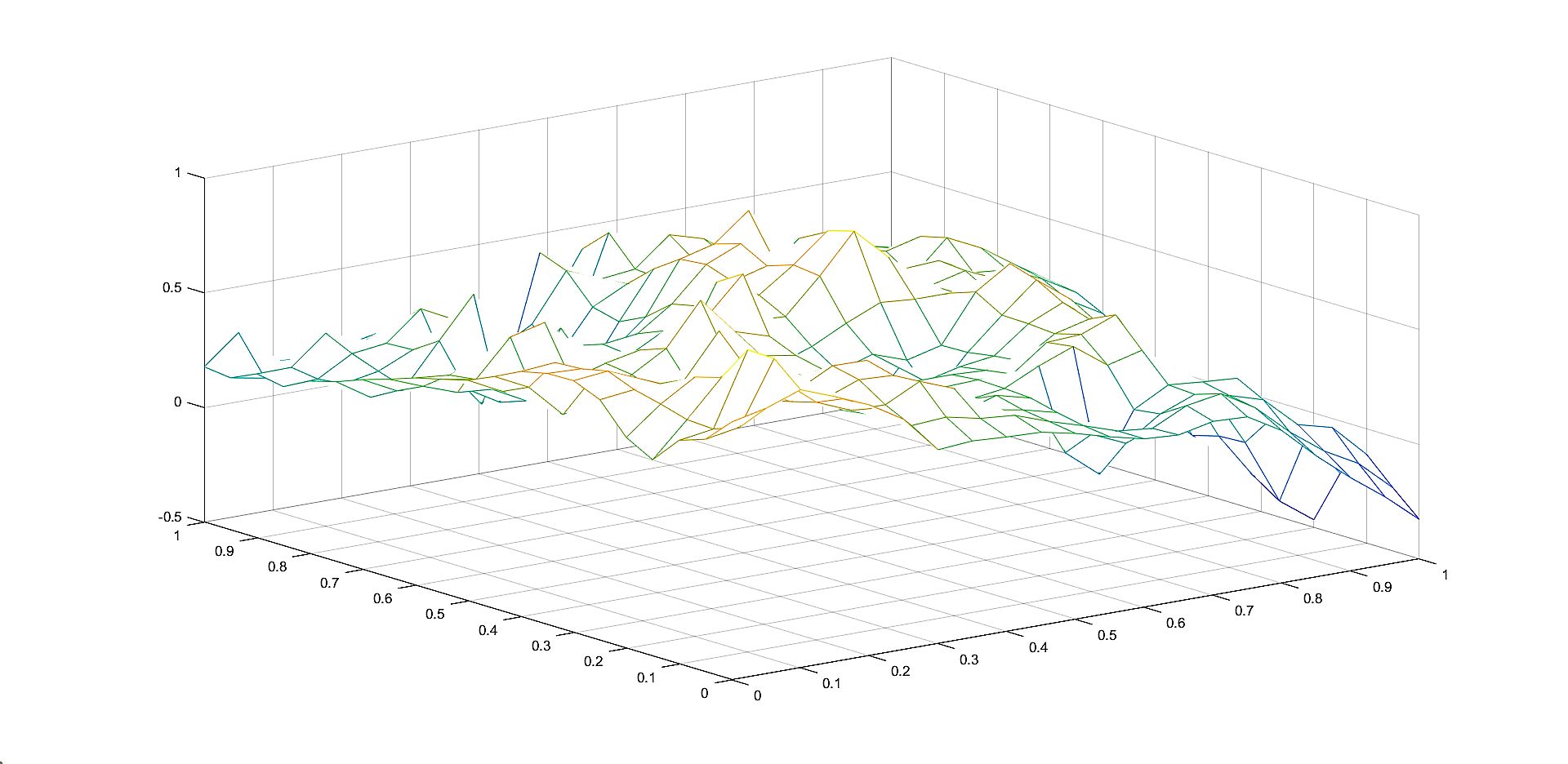

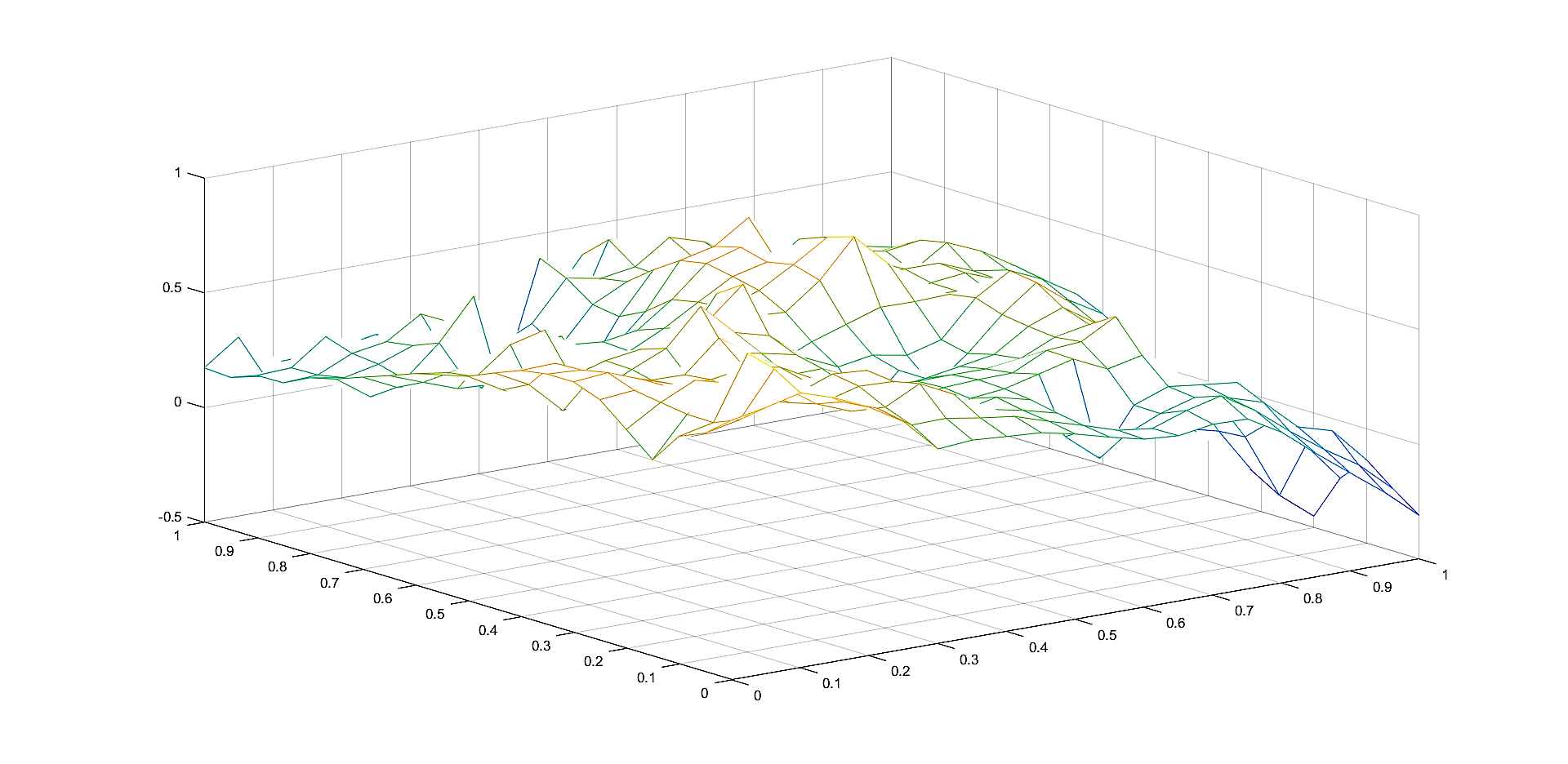

In Figures 1, 3, 5 we plot the sets of the given points and the corresponding sets of the representative knot points.

| 0.0864 | 0.1095 | 0.1936 | |

| 0.0724 | 0.0970 | 0.1770 | |

| 0.1644 | 0.4001 | 0.6595 | |

| 0.1246 | 0.2858 | 0.3410 | |

| 0.1578 | 0.3783 | 0.6217 | |

| 0.1212 | 0.2834 | 0.3399 |

References

- [1] Cătinaş, T., The combined Shepard-Abel-Goncharov univariate operator, Rev. Anal. Numér. Théor. Approx., 32(2003), pp. 11–20.

- [2] Cătinaş, T., The combined Shepard-Lidstone bivariate operator, In: de Bruin, M.G. et al. (eds.): Trends and Applications in Constructive Approximation. International Series of Numerical Mathematics, Springer Group-Birkhäuser Verlag, 151(2005), pp. 77–89.

- [3] Cătinaş, T., Bivariate interpolation by combined Shepard operators, Proceedings of IMACS World Congress, Scientific Computation, Applied Mathematics and Simulation, ISBN 2-915913-02-1, 2005, 7 pp.

- [4] Cătinaş, T., The bivariate Shepard operator of Bernoulli type, Calcolo, 44 (2007), no. 4, pp. 189-202.

- [5] Coman, Gh., The remainder of certain Shepard type interpolation formulas, Studia UBB Math, 32 (1987), no. 4, pp. 24-32.

- [6] Coman, Gh., Hermite-type Shepard operators, Rev. Anal. Numér. Théor. Approx., 26(1997), 33–38.

- [7] Coman, Gh., Shepard operators of Birkhoff type, Calcolo, 35(1998), pp. 197–203.

- [8] Farwig, R., Rate of convergence of Shepard’s global interpolation formula, Math. Comp., 46(1986), pp. 577–590.

- [9] Franke, R., Scattered data interpolation: tests of some methods, Math. Comp., 38(1982), pp. 181–200.

- [10] Franke, R., Nielson, G., Smooth interpolation of large sets of scattered data. Int. J. Numer. Meths. Engrg., 15(1980), pp. 1691–1704.

- [11] Lazzaro, D., Montefusco, L.B.: Radial basis functions for multivariate interpolation of large scattered data sets, J. Comput. Appl. Math., 140(2002), pp. 521–536.

- [12] McMahon, J. R., Knot selection for least squares approximation using thin plate splines, M.S. Thesis, Naval Postgraduate School, 1986.

- [13] McMahon, J. R., Franke, R., Knot selection for least squares thin plate splines, Technical Report, Naval Postgraduate School, Monterey, 1987.

- [14] Renka, R.J., Cline, A.K., A triangle-based interpolation method. Rocky Mountain J. Math., 14(1984), pp. 223–237.

- [15] Renka, R.J., Multivariate interpolation of large sets of scattered data ACM Trans. Math. Software, 14(1988), pp. 139–148.

- [16] Shepard, D., A two dimensional interpolation function for irregularly spaced data, Proc. 23rd Nat. Conf. ACM, 1968, pp. 517–523.

- [17] Trîmbiţaş, G., Combined Shepard-least squares operators - computing them using spatial data structures, Studia UBB Math, 47(2002), pp. 119–128.

- [18] Zuppa, C., Error estimates for moving least square approximations, Bull. Braz. Math. Soc., New Series 34(2), 2003, pp. 231-249.

[2] Catinas, T., The combined Shepard-Lidstone bivariate operator, In: de Bruin, M.G. et al. (eds.): Trends and Applications in Constructive Approximation. International Series of Numerical Mathematics, Springer Group-Birkhauser Verlag, 151(2005), 77-89.

[3] Catinas, T., Bivariate interpolation by combined Shepard operators, Proceedings of 17th IMACS World Congress, Scientific Computation, Applied Mathematics and Simulation, ISBN 2-915913-02-1, 2005, 7 pp.

[4] Catinas, T., The bivariate Shepard operator of Bernoulli type, Calcolo, 44 (2007), no. 4, 189-202.

[5] Coman, Gh., The remainder of certain Shepard type interpolation formulas, Stud. Univ. Babes-Bolyai Math., 32(1987), no. 4, 24-32.

[6] Coman, Gh., Hermite-type Shepard operators, Rev. Anal. Num´er. Th´eor. Approx.,

26(1997), 33-38.

[7] Coman, Gh., Shepard operators of Birkhoff type, Calcolo, 35(1998), 197-203.

[8] Farwig, R., Rate of convergence of Shepard’s global interpolation formula, Math. Comp., 46(1986), 577-590.

[9] Franke, R., Scattered data interpolation: tests of some methods, Math. Comp., 38(1982), 181-200.

[10] Franke, R., Nielson, G., Smooth interpolation of large sets of scattered data, Int. J. Numer. Meths. Engrg., 15(1980), 1691-1704.

[11] Lazzaro, D., Montefusco, L.B., Radial basis functions for multivariate interpolation of large scattered data sets, J. Comput. Appl. Math., 140(2002), 521-536.

[12] McMahon, J.R., Knot selection for least squares approximation using thin plate splines, M.S. Thesis, Naval Postgraduate School, 1986.

[13] McMahon, J.R., Franke, R., Knot selection for least squares thin plate splines, Technical Report, Naval Postgraduate School, Monterey, 1987.

[14] Renka, R.J., Multivariate interpolation of large sets of scattered data, ACM Trans. Math. Software, 14(1988), 139-148.

[15] Renka, R.J., Cline, A.K., A triangle-based C1 interpolation method, Rocky Mountain J. Math., 14(1984), 223-237.

[16] Shepard, D., A two dimensional interpolation function for irregularly spaced data, Proc. 23rd Nat. Conf. ACM, 1968, 517-523.

[17] Trımbitas, G., Combined Shepard-least squares operators – computing them using spatial data structures, Stud. Univ. Babes-Bolyai Math., 47(2002), 119-128.

[18] Zuppa, C., Error estimates for moving least square approximations, Bull. Braz. Math. Soc., New Series, 34(2003), no. 2, 231-249.